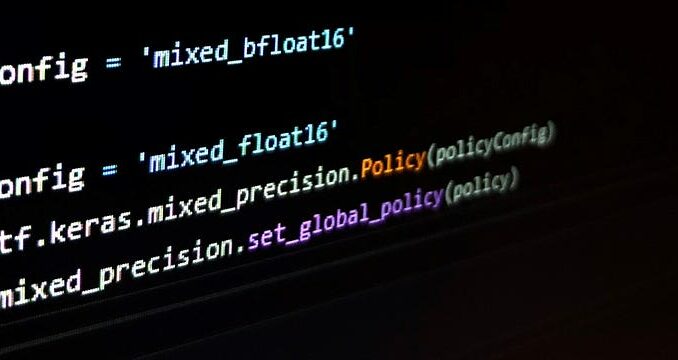

In this post, I will show you, how you can speed up your training on a suitable GPU or TPU using mixed precision bit representation. First, I will briefly introduce different floating-point formats. Secondly, I will show you step-by-step how you can implement the significant speed-up yourself using TensorFlow. A detailed documentation can be found in [1].

Outline

1. Introduction

The more bits are sprent to represent a value the more memory it occupies. Consequently, computations that are performed on these values take more time since more bits must be read from and written to memory and moved around within registers.

Mixed precision refers to a technique, where both 16bit and 32bit floating point values are used to represent your variables to reduce the required memory and to speed up training. It relies on the fact, that modern hardware accelerators, such as GPUs and TPUs, can run computations faster in 16bit.

Be the first to comment