Data comes in different forms and shapes, and the ability to properly transform, enrich, and classify this data in the appropriate categories is of great importance to most data scientists. In this article, we will perform the necessary fine-tuning of a DistilBERT model for Multiclass text classification using custom BBC data obtained from GitHub with the transformer’s library.

This article is based on a real work project where you are expected to classify multiple BBC label data into different categories using the appropriate model. Also, you are expected to perform all quality tests and assess the accuracy of the model created.

To follow along with this project the following are required from you:

● Basic understanding of Python.

● Have Anaconda installed on your PC/ Use Google Colab.

● Installation of all required libraries.

● Be open-minded.

BERT refers to Bidirectional Encoder Representations from Transformers. This paradigm for machine learning is extremely helpful around natural language processing (NLP).

Here are some key points on how BERT works:

● Bidirectional: BERT can analyze every word at once, much like people read and comprehend language, in contrast to conventional models that only read text in one direction (from left to right or from right to left).

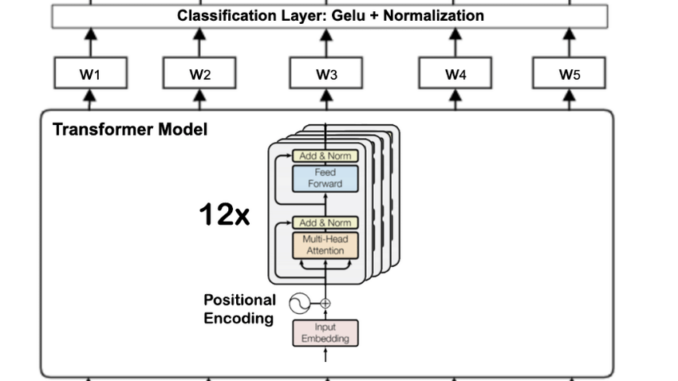

● Transformer Architecture: This kind of neural network architecture aids BERT in determining the connections between words.

● Pre-trained: BERT can understand the subtleties of language and how words are employed in various situations because it has been trained on a vast amount of text data.

By integrating these techniques, the BERT platform may deliver state-of-the-art performance on a range of natural language processing tasks, such as sentiment analysis, machine translation, and question answering.

This article will be broken down into different sections from installing the necessary libraries, data cleaning, and training of the model to creating the desired model. We will be using Google Colab for this project because of its computing speed.

Let import the necessary libraries needed for this project.

The libraries imported above from Transformers which is a popular library for natural language processing developed by Huggingface.

● DistilBertTokenizer: This is used for tokenizing data specifically for models based on the BERT (Bidirectional Encoder Representations from Transformers) architecture.

● TFDistilBert For Sequence Classification: This class is an implementation of a DistilBERT model optimized for sequence classification tasks that is compatible with TensorFlow.

We will be using the BBC multiclass data in our GitHub Repo. The line of code below will help us pull the CSV file from our GitHub repo and read it as a Pandas DataFrame.

Let’s standardize the data with the following steps and make it ready for training.

From the data obtained, we have both the features and labels, with the label not completely categorized. We will need to create a model to properly categorize the missing labels.

Using the unique() function to get all the items in the label column. With this, you will notice all data have been properly grouped except the unknown category.

#Get the Unique Items from the label column

df[‘labels’].unique()

The line of code will help us filter out the unknown category in the label column.

# Filter out rows where the labels are ‘unknown’

df = df[df[‘labels’] != ‘unknown’]# Print the filtered DataFrame

print(df.head())

Label encoding is important since most machine learning models cannot directly comprehend and analyze text labels or raw category data, we encode categories during the model-building process.

#Encode label for easy identification.

df[‘encoded_cat’] = df[‘labels’].astype(‘category’).cat.codes

print(df.head())

Determine which new encoded column belongs to which label. This will help guide our model as we process.

filtered_df = df[df[‘encoded_cat’] == 4]# Print the filtered DataFrame

print(filtered_df)

At this point, we need to start building our model for categorizing the label columns.

Building machine learning models starts with dividing data into features and labels.

● Features: These stand for the traits or qualities of your data that the model will utilize to develop its knowledge and forecasts.

● Labels: You want the model to predict these target values. By separating them, it becomes easier to see what the model is attempting to estimate or learn.

data_texts = df[“data”].to_list() # Features (not tokenized yet)

data_labels = df[“encoded_cat”].to_list() # Labels

Using Sklearn library to spilt, we will still have the data to test and validate before training the model.

Initializes a tokenizer for the DistilBERT model using the DistilBertTokenizer class from the Hugging Face transformers library.

We will need to create a TensorFlow Dataset object from tensors using the tf.data.Dataset.from_tensor_slices() method. This will be done for both train and validate dataset.

The code below uses TensorFlow to initialize and compile a sequence classification model based on the DistilBERT architecture.

Let’s train the model in TensorFlow with early stopping, we also set the number of iterations/epochs to 2. For better accuracy, we might need to increase the number of iterations and parameter tuning.

In machine learning, early stopping is a technique used to improve a model’s generalization and performance during training by preventing overfitting.

From the line of code above, the EarlyStopping callback in TensorFlow Keras stops training after a predetermined number of epochs (patience) if the validation loss doesn’t improve.

The model.summary() is used to display a neural network model architecture summary.

For reusability purposes, let can save the model using the following line of code. This will create an h5 file in any desired location of your choice.

We can recall the saved model for future use by using the DistilBertTokenizer library. The line of code below helps load the .h5 file in the saved directory and be reused for future used cases.

Now that we are done with the training of the model, we need to evaluate it using actual data and see how best it performs. With the code below we can set a variable that will pick a value from the list of data on GitHub Repo.

test_text = test_texts[10]

test_text

After setting the variable, let’s predict with the lines of code below. The code will provide you with a numerical value of the output.

From the image above the output is 4 which represents Tech, this approach is not suitable as we need to categorize all the output into different labels.

To determine the model accuracy, we need to pass it through some test and see how accurately the model predicts.

In machine learning, a confusion matrix is a tool used to assess how well categorization algorithms perform. This is due to the fact it offers a more detailed picture of a model’s accuracy than just a simple overall percentage.

Let’s start by creating a function for making predictions on text data using a loaded tokenizer and a loaded model.

Now, let create a confusion matrix visual that will be used in classification tasks to evaluate the performance of a machine learning model.

The classification report score is a collection of metrics that detail how well a model performs. In addition to overall accuracy, it provides a more complete view by breaking down performance by class.

print(classification_report(test_labels, y_pred))

If you recall earlier, we filtered out the unknown category from the label field before training our data. Let’s make predictions on the unknown category using the trained model.

Filter for the unknown, we will try and make predictions on the unknown categories.

unlabelled_df = df_unknown[df_unknown[‘labels’] == ‘unknown’]

print(unlabelled_df.head())

Converting the column data to a list allows for easy flexibility of the data.

unlabelled_data = unlabelled_df[‘data’].to_list()

len(unlabelled_data)

The line of code will help loop through and create a new column for prediction. We then save the file as a csv on Google Drive.

Let create a new column that gives a better description of the individual category labels.

# Load your CSV file into a DataFrame

df = pd.read_csv("model_prediction.csv")

Use a dataframe map function to better categories the data. This will help better categorize the label columns.

Lastly, add a new column called Predictions.

In this article we learnt how to create a multiclass text classification using the DistilBert Transformer model. We covered data preprocessing and getting the accuracy of the trained model. We also made available all code on GitHub. To have a smooth experience while coding I will suggest using Google Colab. This will save you the burden of creating a virtual environment for installing multiples libraries needed for this project.

● Fine-Tuning DistilBert for Multi-Class Text Classification using transformers and TensorFlow: https://www.sunnyville.ai/fine-tuning-distilbert-multi-class-text-classification-using-transformers-and-tensorflow/

● Multi-class-text-classifica_fine-tuning-distilbert: https://github.com/rohan-paul/LLM-FineTuning-Large-Language-Models/blob/main/Other-Language_Models_BERT_related/Multi-class-text-classifica_fine-tuning-distilbert.ipynb

● Fine Tuning DistilBERT for Multiclass Text Classification: https://www.youtube.com/watch?v=ZvsH09XGuZ0

Be the first to comment