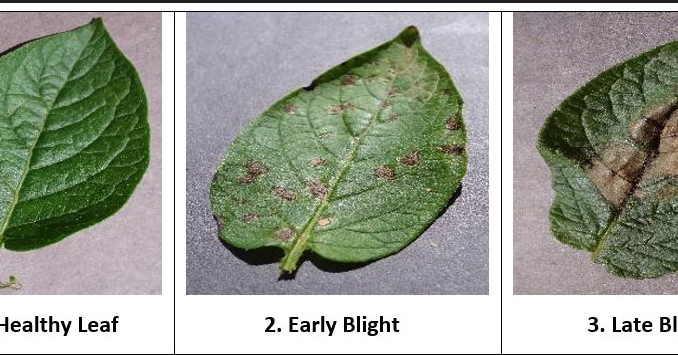

This is a simple image classification project using VGG16 pretrained architecture for classifying Potato leaf diseases

This project focuses on building an image classification model using the VGG16 architecture to classify images into three distinct classes. The dataset is divided into training, validation, and test sets, with images preprocessed and augmented using ImageDataGenerator. The VGG16 model, pre-trained on ImageNet, is fine-tuned by adding custom dense layers and trained with early stopping criteria to prevent overfitting. The model’s performance is evaluated on the test set, achieving high accuracy, and a method is implemented to predict the class of new images from their file paths. This project demonstrates effective transfer learning for image classification tasks.

Folder structure

Import Libraries

import tensorflow as tf

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.applications import VGG16

from tensorflow.keras.layers import Dense, Flatten, Dropout

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.callbacks import EarlyStopping

Data Preprocessing and Augmentation

train_dir = 'path/to/train'

val_dir = 'path/to/val'

test_dir = 'path/to/test'# Image Data Generators

train_datagen = ImageDataGenerator(rescale=1.0/255.0)

val_datagen = ImageDataGenerator(rescale=1.0/255.0)

test_datagen = ImageDataGenerator(rescale=1.0/255.0)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(224, 224),

batch_size=32,

class_mode='categorical'

)

val_generator = val_datagen.flow_from_directory(

val_dir,

target_size=(224, 224),

batch_size=32,

class_mode='categorical'

)

test_generator = test_datagen.flow_from_directory(

test_dir,

target_size=(224, 224),

batch_size=32,

class_mode='categorical'

)

Load VGG16 Model and Add Custom Layers

# --------------- Load the VGG16 model pre-trained on ImageNet, excluding the top (fully connected) layers. --------------

base_model = VGG16(weights='imagenet', include_top=False, input_shape=(224, 224, 3))# ------- Add custom fully connected layers on top of the VGG16 base. ---------------------

x = base_model.output

x = Flatten()(x)

x = Dense(512, activation='relu')(x)

x = Dropout(0.5)(x)

predictions = Dense(3, activation='softmax')(x) # Assuming 3 classes

model = Model(inputs=base_model.input, outputs=predictions)

Freeze Base Layers: Freeze all layers in the VGG16 base model to prevent them from being updated during the training.

for layer in base_model.layers:

layer.trainable = False

Compile the model

model.compile(optimizer=Adam(learning_rate=1e-4),

loss='categorical_crossentropy',

metrics=['accuracy'])

Add Early stopping Callback to stop the training when it achieves optimal validation accuracy , This is mainly to prevent the overfitting of the model and to make the model more generalized

early_stopping = EarlyStopping(

monitor='val_accuracy',

patience=5, # Stops training when there is no change for first 5 consecutive validation accuracy values

verbose=1,

mode='max',

restore_best_weights=True

)

Train the model

history = model.fit(

train_generator,

steps_per_epoch=train_generator.samples // train_generator.batch_size,

validation_data=val_generator,

validation_steps=val_generator.samples // val_generator.batch_size,

epochs=100, # Set a high number for epochs; early stopping will handle stopping early.

callbacks=[early_stopping]

)

Evaluate and Save the model

# Evaluate the Model

test_loss, test_accuracy = model.evaluate(test_generator, steps=test_generator.samples // test_generator.batch_size)

print(f'Test accuracy: {test_accuracy}')# Save the Model

model.save('vgg16_image_classification_model.h5')

Testing The model

Create Class label dictionary

import jsonclass_indices = {v: k for k, v in train_generator.class_indices.items()} # Get class labels

with open("class_names.json","w") as f:

json.dump(class_indices,f,indent=4)

Test Code

import cv2

import json

import os

import numpy as np

from tensorflow.keras.preprocessing.image import load_img,img_to_array

from tensorflow.keras.models import load_modelmodel_path="vgg16_image_classification_model.h5"

model=load_model(model_path)

with open("class_names.json") as f:

class_dict=json.load(f)

test_image_path=r"Data" # Add your image Path here

image_obj=cv2.imread(test_image_path)

test_image=cv2.resize(image_obj,(224, 224))

#==========Convert image to numpy array and normalize ==========

test_image=img_to_array(test_image)/255

#=======================change dimention 3D to 4D ==============

test_image=np.expand_dims(test_image,axis=0)

result=model.predict(test_image)

pred=np.argmax(result,axis=1)

predicted_val=int(pred)

predicted_disease=class_dict[str(predicted_val)]

print("Disease : ",predicted_disease)

Be the first to comment