Training a machine learning model often involves monitoring metrics such as loss and accuracy to evaluate performance. In machine learning, particularly with gradient descent-based models, it is common to observe that the training loss is lower than the validation loss because the model has been trained on the training data, whereas the validation data is unseen. But there are situations where model’s training loss is higher than the validation loss. I know this is quite counter intuitive but it happens.

Surprisingly, the inferences drawn from the epoch vs. loss plot might also be fundamentally flawed. This discrepancy arises because the process of calculating loss during training is different from that during testing in frameworks like TensorFlow and PyTorch. The true training loss is significantly lower than the reported training loss.

To understand this better, let’s explore each factor influencing this outcome step by step.

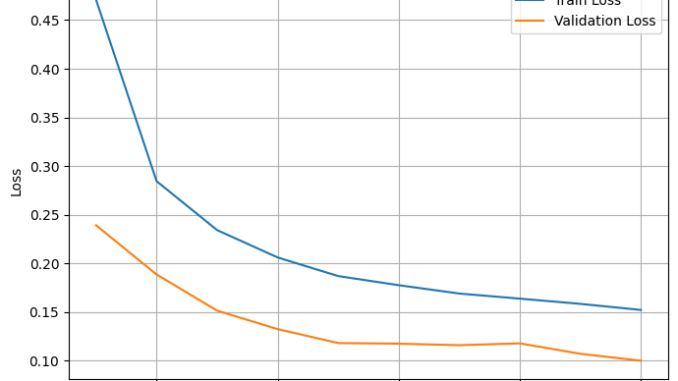

A loss curve shows the training and usually validation loss on the y-axis and the number of iterations or epochs on the x-axis during the training of a machine learning model. Loss curves are essential for providing critical insights into the model’s learning process and performance. By examining the loss curve, we can make several key inferences:

1. Model Convergence: Steadily decreasing training and validation loss indicates learning and performance improvement. When both losses level off, the model has likely reached optimal performance.

2. Fit of the model: The gap between the training loss and validation loss indicates the fit of the model. Ideally, this gap should be minimized without them diverging, and the training loss should be close to the optimal performance.

3. Learning Rate Issues: Significant fluctuations in both losses suggest a too-high learning rate, causing overshooting. A very slow decrease suggests a too-low learning rate, making training inefficient.

4. Early Stopping: If both losses plateau for several epochs, it might be time to stop training to prevent overfitting and save resources.

5. Model Capacity: Persistent high losses indicate the model may lack capacity (e.g., insufficient parameters or layers) to capture data patterns.

6. Training Quality: A smooth curve for both losses indicates stable training, while noisy curves suggest issues with the data (e.g., noise or inconsistencies) or the model’s robustness.

While the epoch vs. loss plot is a valuable tool, it’s important to understand that the inferences drawn from it may sometimes be misleading due to differences in how loss is calculated during training and testing in frameworks like TensorFlow and PyTorch. The reported training loss can often be higher than the true training loss which is the base loss, primarily because the training loss calculation includes additional factors such as regularization. Now let’s understand how model behave differently in train and test mode.

Training Loss: When the model is in train mode the loss includes the base loss (such as mean squared error for regression or cross-entropy for classification) plus any regularization terms. Mathematically, it can be expressed as:

Additionally, the reported training loss is the average of the loss over all the batches within each epoch. As the model processes different batches, each with unique data distributions and optimal weight configurations, it updates its parameters after each batch (in stochastic optimizers). These frequent updates can introduce inconsistencies, causing fluctuations in the training loss and making it appear less stable.

Testing (Validation) Loss: When the model is in test/eval mode the model already has a defined set of weights so the final loss is only the base loss. Regularizations are not enabled, as they are only part of the training process. Mathematically it can be written as:

But you might wonder why, in most loss curves, the validation loss is still higher even though the differences in calculation method should push the training loss up. The reason is twofold:

- The difference between the true training loss and validation loss is often so significant that even after adding regularization elements to the training loss, it remains lower than the validation loss.

- Each problem has its own unique set of loss and regularization characteristics, so the effects of these differences cannot be generalized across all scenarios.

Dropout: During training, the inclusion of dropout means that a certain percentage of neurons are randomly disabled. This effectively creates a different, “thinned” network at each training step. The loss calculated during these steps reflects the performance of a network under these regularization conditions, the displayed loss is generally higher because the network isn’t leveraging its full capacity.

When the model is in test mode, dropout is not applied. The network uses all its neurons, thus operating at full capacity. This typically results in a lower loss and higher accuracy compared to those reported during training epochs, as the network can now leverage all available resources and information.

L1 and L2 Regularization: Both L1 (Lasso) and L2 (Ridge) regularizations add penalties to the loss function to prevent overfitting by penalizing the magnitude of the model’s coefficients. L1 regularization adds a penalty equal to the absolute value of the coefficients, while L2 regularization adds a penalty equal to the square of the coefficients. Mathematically, this can be expressed as:

Both types of regularization increase the training loss by adding these penalties to the base loss, but they are not applied during testing, leading to different calculations.

Understanding these nuances is crucial for correctly interpreting the loss curves. While a higher training loss might initially seem concerning, recognizing the role of regularization helps contextualize these values and leads to more accurate inferences about model performance.

Although regularization is the primary reason behind the increase in training loss, other factors can also contribute, including:

- Batch Normalization: During training, batch normalization uses batch statistics, which can introduce noise and cause higher loss. In validation, it uses moving averages, resulting in lower loss.

- Data Augmentation: Techniques like random cropping, flipping, and rotation are applied during training, making the data more challenging and increasing training loss. These are not applied to the validation set, leading to lower validation loss.

- Shuffling of Training Data: Shuffling training data can cause variations in loss calculations, as the model sees different batches in each epoch, potentially resulting in higher training loss compared to the validation loss.

- Representativeness of Data: Discrepancies can occur if the training or validation dataset does not adequately capture the full complexity of the data domain. A smaller training dataset might underfit, misleadingly indicating better performance on familiar data than on validation data.

- Stochastic Training Variability: The random nature of model initialization and optimization can introduce variability in training loss, affecting model stability and convergence.

The answer lies in the balance between computational efficiency and practical utility. The reported loss value is straightforward to obtain after a forward pass, streamlining the training process. If frameworks were to separate the regularization components from the total training loss, it would introduce significant computational overhead. For example, recalculating losses without regularization for each batch would slow down the training significantly.

Additionally, frameworks often assume that the impact of regularization terms is relatively small and thus negligible. However, this assumption is subjective and varies depending on the specific problem and dataset. The trade-off between simplicity and computational expense, along with the variability in the impact of regularization, is why this discrepancy is accepted.

To address the discrepancy between training and testing loss calculations, you can put the model in test mode and then pass the training data after each step (batch/epoch) to obtain the true loss, excluding regularization effects. Although this can add a significant computational overhead, it is a viable solution. While this is quite straightforward in PyTorch, the implementation is not as simple in TensorFlow Keras.

To simplify this process in TensorFlow Keras, I created a library called trueloss. This library is specifically designed for Keras and helps to compute and plot the true training loss and accuracy of a model without the influence of regularization.

Key Features of trueloss:

- Computes true training loss and accuracy without regularization effects.

- Seamlessly integrates with Keras, using the same fit method parameters and defaults.

- Returns the exact same

Historyinstance as Keras’model.fit(), ensuring compatibility with existing workflows and codebases.

By using trueloss, you can obtain a clearer picture of your model’s true performance on the training data. This helps you make more informed decisions during the training process.

You can view the trueloss PyPI page here. Here is an example of how to use the trueloss library:

To visually compare the loss over epochs with and without using trueloss, see the following plots:

No, the loss vs. epoch plot is not completely flawed. It remains one of the most widely used plots in machine learning for monitoring model performance. However, incorporating the epoch vs. true loss plot can provide a more accurate understanding of the model’s behavior. It’s an additional metric that complements the traditional loss plot, offering deeper insights into the model’s training dynamics.

Understanding the nuances of training and validation loss is crucial for interpreting your model’s performance correctly. By recognizing the impact of regularization and using tools like trueloss, you can gain deeper insights into your model’s behavior and make more informed adjustments during training. This approach ensures that your model not only performs well on training data but also generalizes effectively to new, unseen data.

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. MIT Press.

- JMLR: Machine Learning Research. (2020). Understanding Overfitting in Machine Learning. Journal of Machine Learning Research.

- DeepAI.org. (2020). Regularization. DeepAI.

- Stanford University CS231n: Convolutional Neural Networks for Visual Recognition. (2021). Retrieved from cs231n.stanford.edu.

Be the first to comment