Downloading Scanner API data using a Microsoft Fabric Notebook

I was recently working with a customer where they had more then 100 app workspaces and I was running into some challenges when using the Scanner API in Power Automate.

I then discovered this blog post where they detailed how to download the Scanner API data (DataXbi – admin-scan.py), it was not quite in the format that I needed, so below is my modified code.

The reason that I am downloading the Scanner API into a JSON file is that I find it easier to extract the data that I need using Power BI Desktop.

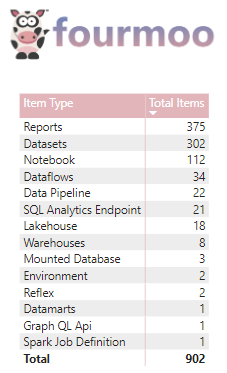

As you can see below, there have been some additions to the Power BI Scanner API where I can now see all my Fabric Items (That is a future blog post)

The first code cell is where I am authorizing getting the values I need from my Azure Key Vault (Using Sempy to Authenticate to Fabric/Power BI APIs)

Please note that you will have to update the following line numbers below with your respective information:

- This will be your Azure Key Vault Name.

- This will be your tenant ID

- This will be your Application ID in your Azure Key Vault.

- This will be your Client Secret in your Azure Key Vault.

- This will be your domain name.

The section below is where it has the definitions created based on the code from DataXbi.

All that I did was to only use the definitions I needed to extract the data.

- def get_access_token():

- def scan_worspaces(access_token, excludePersonalWorkspaces=True):

- def get_workspace_info(access_token, workspaces):

- def get_scan_status(access_token, api_url):

- def get_scan_result(access_token, api_url):

The final section is where I get the access token and then run the scanner API to get all the workspaces that need to be scanned.

In my example I always scan all workspaces every day.

The next code cell is where it then scans and downloads all the workspace data.

As in the code supplied if there are more than 100 app workspaces it will load them 100 workspaces at a time.

The final section of notebook is where I am then saving the data from the Scanner API to a JSON file in my Lakehouse.

I save it with the current date’s filename so that when I later use this file in Power BI Desktop, I am always getting the latest version.

Once the notebook has completed, I can see it in my Lakehouse view as shown below.

In this blog post I have shown you how I use a Microsoft Fabric Notebook to download the Scanner API data and store it in a JSON File.

You can get a copy of the Notebook here: Fabric/Blog-Scanner API.ipynb at main · GilbertQue/Fabric (github.com)

Thanks for reading and any comments or suggestions are always welcome 😊

If you do not authenticate using an Azure Key Vault, you can use the code below to authenticate.

NOTE: You will need to have the appropriate permissions in order to use the Scanner API which can be found here: Admin – WorkspaceInfo GetModifiedWorkspaces – REST API (Power BI Power BI REST APIs) | Microsoft Learn

import requests, os, datetime

############################################################

# Authentication - Using owner authentication

############################################################

access_token = mssparkutils.credentials.getToken("https://analysis.windows.net/powerbi/api")

print('Successfully authenticated.')

############################################################

# Get Refreshables

############################################################

base_url="https://api.powerbi.com/v1.0/myorg/"

header = {'Authorization': f'Bearer {access_token}'}

Be the first to comment