Something that is not very popular in communities, and it’s useful, is the possibility of building real-time tiles in Power BI. Nowadays, the final user always asks for real-time BI because they don’t understand how BI works very well, and, after you explain, they change their mind, letting you configure just N refreshes a day. The eight times a day refresh usually wins the fight. However, there are real projects for streaming like checking a small piece of actual specific data. It’s usually when they are thinking of a TV show state for a process or entity. The real streaming is for that kind of data, for what is happening right now. Let’s call Streaming Dataset in Power BI to all data that is mandatory to have a refresh of less than 15 minutes (Direct Query cache time).

Power BI will only show real time on dashboards. This board that captures on tiles has specific data already filtered. I won’t explain all types of streaming possibilities that you can check on this doc. I’ll focus in the solution that I think is the most useful and I recommend. I’m talking about the Push Data, also known as a Hybrid dataset. The best advantage of this method is the opportunity of building a report and DAX measures against a streaming dataset, giving us strength over Data Visualization.

Let’s get started. In order to go through these steps, you need a Power BI account (free or pro) and a place to run Python code (local, VM, runbook or Azure function).

The first step is creating the streaming dataset. This will be a Live Connection. This means that in Power BI desktop we will only be able to create visualizations and measures. We won’t have Power Query and the rest of the DAX code features.

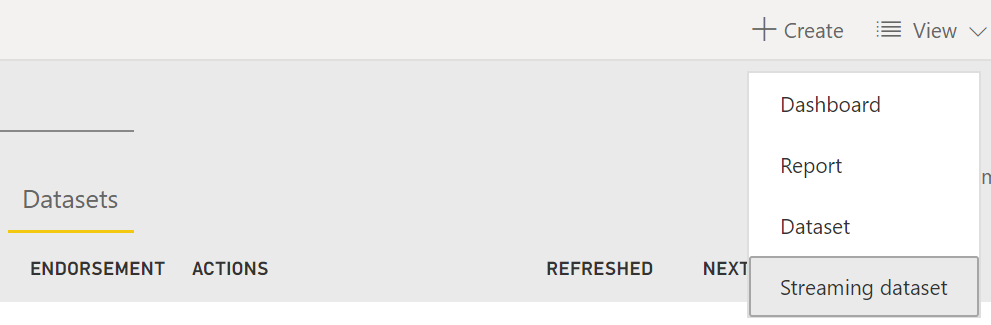

Under create menu over a Dataset tab in a workspace, we can see the streaming option:

There are three methods. Let’s pick API.

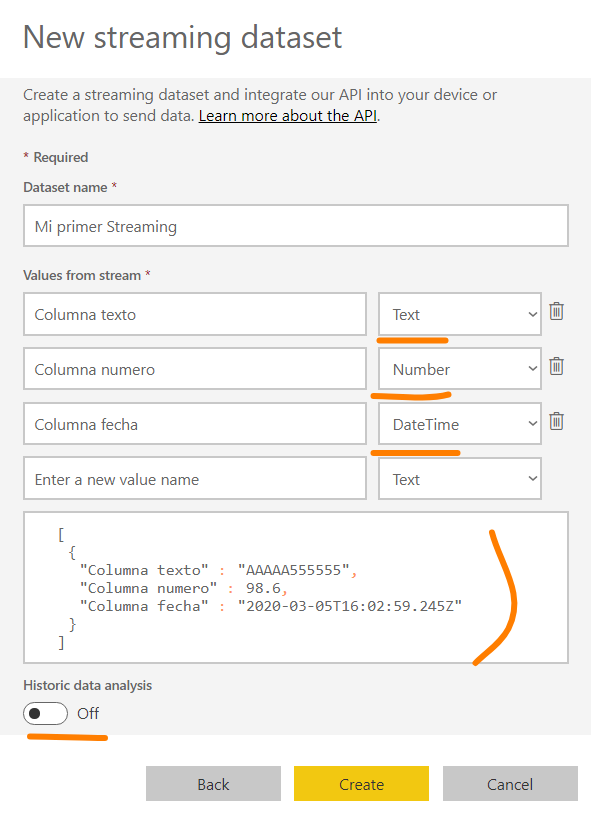

Before finishing the creation, Power BI will help us creating an API to receive a Post request with the columns, we need to see what the body will look like.

Like the picture shows, you must write a name and the column definition. This definition will be the only table available in the live connection of Power BI. We must think carefully if we have all the data we need in this only table. We must solve this. The data merges in one table. Let’s also define data types (number, date and string).

Before creating the HTTP POST, we can see an example of the body and a check with “Historic data analysis” on it. That will open two scenarios for us:

- Off: Generate only one entry that will replace the data on each HTTP POST that our code pushes to Power BI. This is great for actual states that are previously calculated.

- On: With this option, each HTTP POST will insert rows on the unique table, letting us create new kinds of calculations or check not only my last entry but also the rest of the day or week. It is important to know that it only allows 1 million rows. When you get to that number of rows, the report dies until you reset the data. In order to reset the date, you can edit the streaming data set and switch off the historic data to switch it on again later.

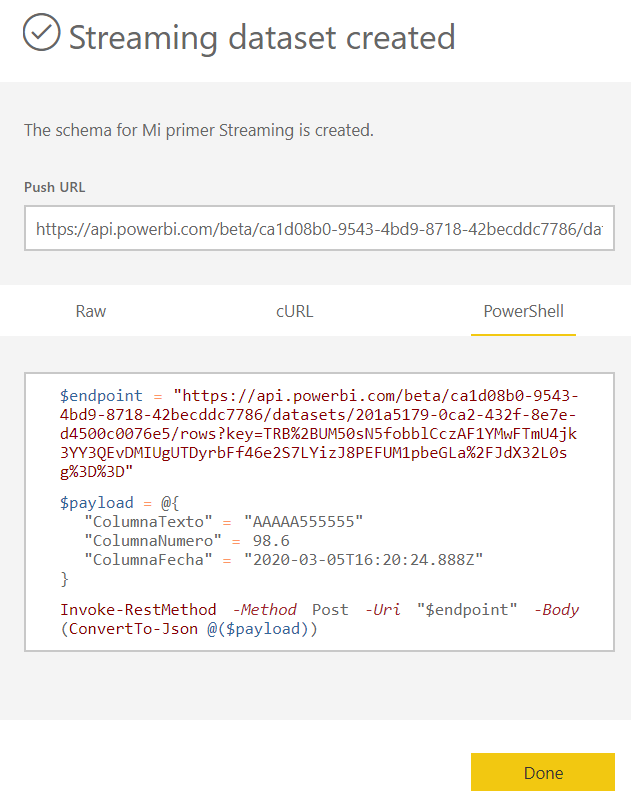

Turn Historic Data on and continue. Now that the streaming dataflow is created, Power BI will show us the API URL to push our data.

From this time on, our workspace contains a streaming dataset that we can connect with Power BI Desktop. The picture shows us an example of the code in PowerShell. In our case, the code will be in Python and not just for one row but for a complete pandas DataFrame.

You can check the Python script on my GitHub right here. The script contains a place to do anything we want with pandas (connect to a source or apply any transformation). Then it shows two very important details like formatting the date columns and how to parse the data frame body as byte to complete the request as Power BI API accepts. It also has a small error handling.

If you want to run the script locally, you can use the last lines of the code, but if you plan to use it on Azure, make sure to remove that last part. I hope you know a bit of Python, in order to know this change, depending on where you run it.

Now that we have our streaming dataset receiving data every N seconds/minutes, we can build our Power BI report obtaining data from service like the doc shows. This way, we can start building visualizations and calculating measures. Everything we pin later to our dashboard will automatically change its data on each push.

Remember that only the dashboards will refresh its data on each push. The report won’t, you must click on refresh button if you want new data. You can check my original post in LaDataWeb here.

You are ready to go! hope you can build a streaming dataset and report with Python pandas now!

Be the first to comment