Creating a deploy-able model like a chatbot, where raw data is directly inputted to the model, requires preprocessing within the model layers. In a previous post, I worked with feature column transformations outside the model. However, feature column transformations should be also used inside the model layers. Feature column transformation and tf.keras.layers are great ways to do pre-processing inside the model, to hide the step of data pre-processing from the user.

In this post, I work with pre-processing using tf.keras.layers completely inside the model using the Tensorflow Functional API. The dataset and inspiration is from the TensorFlow on Google Cloud Training lab.

# import necessary libraries

import numpy as np

import pandas as pd

import tensorflow as tfimport pathlib

dataset_url = 'http://storage.googleapis.com/download.tensorflow.org/data/petfinder-mini.zip'

csv_file = 'gs://cloud-training/mlongcp/v3.0_MLonGC/toy_data/petfinder-mini_toy.csv'

tf.keras.utils.get_file('petfinder_mini.zip', dataset_url, extract=True, cache_dir='.')

df = pd.read_csv('datasets/petfinder-mini/petfinder-mini.csv')# Only look at data rows for two AdoptionSpeed types: 4 means NOT adopted,

# <2 means quickly adopted

df = df[(df['AdoptionSpeed']==4) | (df['AdoptionSpeed'] < 2)]

df = df.reset_index(drop=True, inplace=False)

# Change AdoptionSpeed

df['target'] = [1 if df['AdoptionSpeed'].iloc[i] == 4 else 0 for i in range(len(df))]

df = df.drop(['Description', 'AdoptionSpeed'], axis=1)

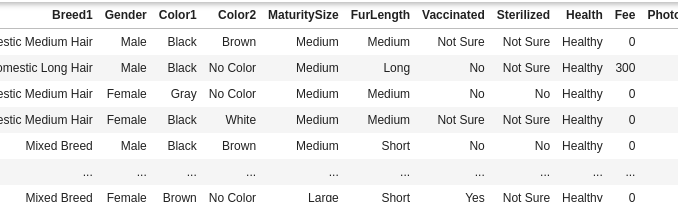

df

The goal of the modeling is to predict if a pet will be adopted or not, based on its type (cat or dog), age, breed, color, size, fur length, vaccination, health, etc.

# Prepare X and Y

X_df = df.drop(['target'], axis=1)Y = df[['target']]

Y = [Y['target'].iloc[1] for i in range(len(Y))]

# Create the dataset from df wrapped in dict, and Y list

ds_X_df_dict = tf.data.Dataset.from_tensor_slices((dict(df), Y))

batch_size = 5

ds = ds_X_df_dict.batch(batch_size, drop_remainder=True)

# For parallel processing: enable multi-thread usage

ds = ds.prefetch(1)

# Transpose the data columns per batch

ds_transposed_dict, ds_transposed_array = transpose_ds_per_batch(ds)

list(ds_transposed_dict.take(1).as_numpy_iterator())

In the dataset, we can see that there are a mix of columns with text and integers. tf.keras.layers can be used to convert all the text data to numbers such that all the data can be used to predict if a pet will be adopted.

Create a separate model for preprocessing and a separate model for prediction.

Preprocessing Model

Make a list of desired tf.keras.layer transformations per dataset column.

# Get column names from DataFrame

feature_col_names = df.columns[0:-1]

feature_col_names# Help users to select a specific feature processing per DataFrame column

default_selection_list = {}

for i in feature_col_names:

if str(df[f'{i}'].dtype).find('object') == -1:

print(f'feature {i} is numerical, recommendations are: num_Normalization, num_Discretization, num_IntegerLookup')

default_selection_list[f'{i}'] = 'num_Normalization'

else:

print(f'feature {i} is object/string, recommendations are: cat_StringLookup')

print(df[f'{i}'].value_counts().index.to_numpy())

default_selection_list[f'{i}'] = 'cat_StringLookup'

print('\n')

# Recommended selection per column

default_selection_list

# Let's change one to onehot just to test the tf.keras.layer!

default_selection_list['Health'] = 'cat_Onehot'

# MUTIPLE inputs!!batch_size = 5

column_schema = list(df.columns[0:-1])

out = [str(i) for i in df.dtypes[0:-1]]

column_schema_dtype = ['string' if i.find('object') != -1 else i for i in out]

# ----------------------------

# Preprocessing model

# ----------------------------

# ----------------------------

# Create inputs

# ----------------------------

inputs = generate_inputs(column_schema, column_schema_dtype)

# ----------------------------

# Perform transformations per input using the [default_selection_list]

# ----------------------------

layerlist = []

for ind, val in enumerate(list(default_selection_list.keys())):

selected_transformation = default_selection_list[val]

input_num = inputs[ind]

if selected_transformation == 'cat_StringLookup':

unique_str = np.unique(df[[val]])

layer = string_2_factorization(unique_str, input_num)

# outputs a float32, that is shape (-1,2orlarger)

elif selected_transformation == 'cat_Onehot':

unique_str = np.unique(df[[val]])

layer = string_2_onehot_layer(unique_str, input_num)

# outputs a float32, that is shape (-1,2orlarger)

elif selected_transformation == 'num_Normalization':

layer = tf.keras.layers.experimental.preprocessing.Normalization(axis=None)(input_num)

# output of Normalization layer is a float32, that is shape (-1,1)

layerlist.append(layer)

# ----------------------------

# ----------------------------

# Arrange each layer to have a single column, such that the layers can be stacked into a large X matrix

# ----------------------------

# Slice the layers into a single column and assign each column to a [dynamically named variables]

# https://www.tensorflow.org/guide/tensor_slicing

cnt = 0

#layerlist = [layer_onehot, layer_norm]

for a in layerlist:

r, c = a.shape

for i in range(c):

globals()[f"ll{cnt}"] = a[:,i:i+1]

cnt = cnt + 1

# Automatically make a list of layer variables using the [dynamic variables]

tot = [globals()[f"ll{i}"] for i in range(cnt)]

# ----------------------------

# Layers must be the same type (tf.int64) and the same [number of columns] to be in tf.stack

x = tf.stack(tot, axis=1)

# Drops one dimension so that x is (batch_size, num_of_final_cols=this value varies depending on the preprocessing)

outputs = tf.keras.layers.Flatten(input_shape=(batch_size,))(x)

preprocessing_model = tf.keras.Model(inputs, outputs)

preprocessing_model.summary()

The tf.keras.layers created many columns for the ‘X matrix’ because of the StringLookup setting is set to output_mode=’count’ where it increases the columns like a one-hot transformation, similarly CategoryEncoding uses output_mode=”one_hot” for the Health column. See the SUBFUNCTIONS below for the tf.keras.layers settings.

We can even run the preprocessing model using the compile and fit functions, to see if it functions; the output are the columns transformed.

# Run preprocessing_model : the output are the columns transformed

preprocessing_model.compile(optimizer='sgd', loss='mse', metrics=['mse'])

preprocessing_model.fit(ds_transposed_dict, epochs=2)

Training Model

# Output put column number of the preprocessing model

num_of_cols = output_num_of_cols

print('num_of_cols: ', num_of_cols)training_model = training_model_arch_pandas_numpy(num_of_cols, batch_size)

End-to-end Model 🐱🐈

inputs = generate_inputs(column_schema, column_schema_dtype)

outputs = training_model(preprocessing_model(inputs))

inference_model = tf.keras.Model(inputs, outputs)

# Run the end-to-end model

inference_model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=["accuracy"])inference_model.fit(ds_transposed_dict, epochs=10)

Predictions!

sample = {

'Type': 'Cat',

'Age': 3,

'Breed1': 'Tabby',

'Gender': 'Male',

'Color1': 'Black',

'Color2': 'White',

'MaturitySize': 'Small',

'FurLength': 'Short',

'Vaccinated': 'No',

'Sterilized': 'No',

'Health': 'Healthy',

'Fee': 100,

'PhotoAmt': 2,

}input_dict = {name: tf.convert_to_tensor([value]) for name, value in sample.items()}

# Give the input to the model

predictions = inference_model.predict(input_dict)

print('predictions: ', predictions)

index = np.argmax(predictions)

choices = ["quickly adopted", "not adpoted"]

result = choices[index]

print(f'This pet will be {result}')

print("This particular pet had a %.1f percent probability "

"of getting adopted." % (100 * predictions[0][index]))

def transpose_ds_per_batch(ds):syntax = 0

Xory = 0 # X=0, y=1

# Get column_schema from ds

column_schema = list(list(ds.take(1).as_numpy_iterator())[syntax][Xory].keys())

# Get the batch_size from ds

batch_size = len(list(ds.take(1).as_numpy_iterator())[syntax][Xory][f'{column_schema[0]}'])

# ------------------------------

# Transpose ds per batch

# ------------------------------

# Transpose the columns so they fit into the model

ds_Y = ds.map(lambda x, y: tf.reshape(y, [batch_size,1]))

ds_X_dict = {}

for i, val in enumerate(column_schema):

col_ds = ds.map(lambda x, y: tf.reshape(x[f'{val}'], [batch_size,1])).cache()

# Making a Dataset from a dictionary

ds_X_dict[f'{val}'] = col_ds

# OR

# Making a Dataset from array columns

if i == 0:

ds_X = col_ds

else:

ds_X = tf.data.Dataset.zip((ds_X, col_ds))

ds_transposed_dict = tf.data.Dataset.zip((ds_X_dict, ds_Y))

ds_transposed_array = tf.data.Dataset.zip((ds_X, ds_Y))

return ds_transposed_dict, ds_transposed_array

def string_2_factorization(*args):

# String data --> factorization

# This is factorization: converts size strings to indices; e.g. ['small'] -> [1]

# https://www.tensorflow.org/api_docs/python/tf/keras/layers/StringLookup# Maximum size of the vocabulary for this layer

max_tokens = 5

vocabulary = args[0] # unique_str

if len(args) > 1:

input_col = args[1]

# Creating a lookup layer with a known vocabulary

# layer_factorization = tf.keras.layers.StringLookup(max_tokens=max_tokens, vocabulary=vocabulary)(input_col)

# layer_factorization = tf.keras.layers.StringLookup(max_tokens=max_tokens, vocabulary=vocabulary[0:max_tokens-1])(input_col)

# output of StringLookup layer is a int64

# Token count output

layer_factorization = tf.keras.layers.StringLookup(max_tokens=max_tokens, vocabulary=vocabulary[0:max_tokens-1], output_mode='count')(input_col)

# output of StringLookup layer is a float32

else:

layer_factorization = tf.keras.layers.StringLookup(max_tokens=max_tokens, vocabulary=vocabulary)

return layer_factorization

def string_2_onehot_layer(*args):

# String data --> factorization --> one-hot

# This is factorization: converts size strings to indices; e.g. ['small'] -> [1]# Maximum size of the vocabulary for this layer

max_tokens = 5

# vocabulary needs to be set or use adapt, it is the unique values in the text

# an array of strings or a string path to a text file

vocabulary = args[0] # unique_str

print('vocabulary: ', vocabulary)

if len(args) > 1:

input_col = args[1]

# Turns string categorical values into an encoded representation that can be read by an Embedding or Dense layer

layer_factorization = tf.keras.layers.StringLookup(max_tokens=max_tokens, vocabulary=vocabulary[0:max_tokens-1])(input_col)

# output of StringLookup layer is a int64

# CategoryEncoding turns integer categorical features into one-hot, multi-hot, or count dense representations

# This transforms the string to a int64. Apply one-hot encoding to the indices

num_tokens = len(vocabulary)+1

# OR

layer_strlookup = tf.keras.layers.StringLookup(max_tokens=max_tokens, vocabulary=vocabulary[0:max_tokens-1])

num_tokens = layer_strlookup.vocabulary_size()

print('num_tokens: ', num_tokens)

layer_onehot = tf.keras.layers.CategoryEncoding(num_tokens=num_tokens, output_mode="one_hot")(layer_factorization)

else:

# Need to call string_2_factorization in addition to this function

num_tokens = len(vocabulary)+1

print('num_tokens: ', num_tokens)

layer_onehot = tf.keras.layers.CategoryEncoding(num_tokens=num_tokens, output_mode="one_hot")

return layer_onehot

def stringORfloat_2_int_layer(*args):# Turns integer categorical values into an encoded representation

vocabulary = args[0] # unique_str

max_tokens = len(vocabulary)+1 # want each unique string to be a token

if len(args) > 1:

input_col = args[1]

# Creating a lookup layer with a known vocabulary

layer_int64 = tf.keras.layers.IntegerLookup(max_tokens=max_tokens, vocabulary=vocabulary)(input_col)

# Creating a lookup layer with an adapted vocabulary

# layer_int64 = tf.keras.layers.IntegerLookup()(input_col)

else:

layer_int64 = tf.keras.layers.IntegerLookup(max_tokens=max_tokens, vocabulary=vocabulary)

return layer_int64

def training_model_arch_pandas_numpy(num_of_cols, batch_size):inputs = tf.keras.Input(shape=(num_of_cols,))

x = tf.keras.layers.Flatten(input_shape=(batch_size,))(inputs)

x = tf.keras.layers.Dense(32, activation='relu', name='h1')(x)

x = tf.keras.layers.Dropout(0.5)(x)

outputs = tf.keras.layers.Dense(2, activation='softmax', name='outputs')(x)

training_model = tf.keras.Model(inputs, outputs)

return training_model

def generate_inputs(column_schema, column_schema_dtype):num_of_cols_per_input_col = 1

# Create a [dynamic input variables]

for ind, val in enumerate(column_schema):

globals()[f"input{ind}"] = tf.keras.Input(shape=(num_of_cols_per_input_col,),

dtype=column_schema_dtype[ind], name=val)

num_of_input_cols = len(column_schema)

# Automatically make a list of input variables using the [dynamic variables]

inputs = [globals()[f"input{i}"] for i in range(num_of_input_cols)]

return inputs

I hope this blog post helps someone, I found that using the tf.keras.layers were really difficult to use with the dataset. It requires practice knowing how to input each dictionary data column into the model, and then know how to restack the transformed layers.

- Inspiration for working with tf.keras.layer in the model: https://github.com/GoogleCloudPlatform/training-data-analyst/blob/master/courses/machine_learning/deepdive2/introduction_to_tensorflow/labs/preprocessing_layers.ipynb

- https://www.coursera.org/learn/intro-tensorflow

- Tensorflow Functional API: https://keras.io/guides/functional_api/

Happy Practicing! 👋

Be the first to comment