Welcome aboard, security-savvy data enthusiasts and machine learning guardians! Today, we’re diving into the intriguing and vital field of adversarial machine learning, focusing on how models can be attacked and defended against adversarial examples. This guide will explain common attack methods like FGSM (Fast Gradient Sign Method) and PGD (Projected Gradient Descent), and defense strategies such as adversarial training, defensive distillation, and input preprocessing. We’ll also provide code examples, practical tips, case studies, and research findings on the impact and defense of adversarial attacks. Let’s get started!

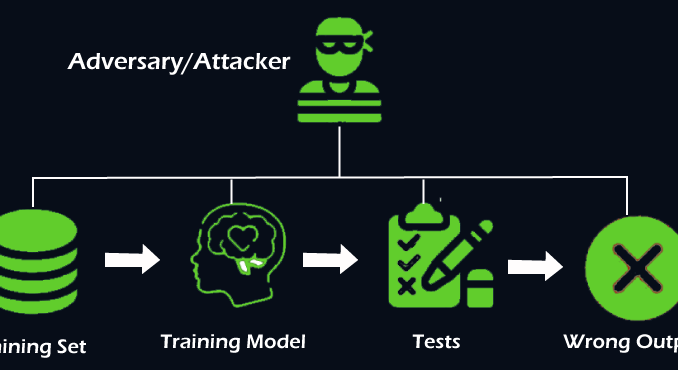

Adversarial machine learning involves techniques used to fool machine learning models through maliciously crafted inputs, known as adversarial examples. These inputs are designed to cause models to make incorrect predictions, which can pose significant risks in critical applications like cybersecurity, healthcare, and autonomous driving.

- Adversarial Examples: Inputs specifically crafted to cause a model to make a mistake.

- Attack Methods: Techniques used to generate adversarial examples.

- Defense Strategies: Methods used to protect models from adversarial attacks.

FGSM is a simple yet powerful technique to create adversarial examples by adding a small perturbation to the input data in the direction of the gradient of the loss with respect to the input.

Formula

adv_example = original_input + ϵ ⋅ (∇x J (θ, x, y) )

where ϵ is the perturbation magnitude, ∇x J is the gradient of the loss J with respect to the input x.

Code Example

import tensorflow as tfdef fgsm(model, x, y, epsilon):

with tf.GradientTape() as tape:

tape.watch(x)

prediction = model(x)

loss = tf.keras.losses.sparse_categorical_crossentropy(y, prediction)

gradient = tape.gradient(loss, x)

signed_grad = tf.sign(gradient)

adv_example = x + epsilon * signed_grad

return adv_example

# Example usage

adv_example = fgsm(model, original_input, original_label, epsilon=0.1)

PGD is an iterative method that extends FGSM by applying it multiple times with small steps, projecting the perturbed input back onto the allowable input space after each step.

Code Example

def pgd(model, x, y, epsilon, alpha, iterations):

adv_example = x

for i in range(iterations):

adv_example = fgsm(model, adv_example, y, alpha)

perturbation = tf.clip_by_value(adv_example - x, -epsilon, epsilon)

adv_example = tf.clip_by_value(x + perturbation, 0, 1)

return adv_example# Example usage

adv_example = pgd(model, original_input, original_label, epsilon=0.1, alpha=0.01, iterations=40)

Adversarial training involves augmenting the training data with adversarial examples, making the model more robust to such attacks.

Code Example

def adversarial_training(model, x_train, y_train, epsilon):

adv_x_train = fgsm(model, x_train, y_train, epsilon)

x_combined = tf.concat([x_train, adv_x_train], axis=0)

y_combined = tf.concat([y_train, y_train], axis=0)

model.fit(x_combined, y_combined, epochs=10, batch_size=64)# Example usage

adversarial_training(model, x_train, y_train, epsilon=0.1)

Defensive distillation trains a model using soft labels produced by another model trained on the same task, making the model less sensitive to small perturbations.

Code Example

def train_distillation_model(base_model, x_train, y_train, temperature):

# Train the base model

base_model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

base_model.fit(x_train, y_train, epochs=10, batch_size=64)# Generate soft labels

soft_labels = tf.nn.softmax(base_model.predict(x_train) / temperature)

# Train the distilled model

distilled_model = tf.keras.models.clone_model(base_model)

distilled_model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

distilled_model.fit(x_train, soft_labels, epochs=10, batch_size=64)

return distilled_model

# Example usage

distilled_model = train_distillation_model(base_model, x_train, y_train, temperature=10)

Input preprocessing techniques involve transforming the input data to remove adversarial perturbations before feeding it to the model.

Example: JPEG Compression

import cv2

import numpy as npdef jpeg_compress(image, quality=95):

encode_param = [int(cv2.IMWRITE_JPEG_QUALITY), quality]

_, encimg = cv2.imencode('.jpg', image, encode_param)

decimg = cv2.imdecode(encimg, 1)

return decimg

# Example usage

compressed_image = jpeg_compress(original_image, quality=75)

Case Study 1 : Google Gboard

Objective: Improve the robustness of Google’s Gboard predictive text model against adversarial attacks.

Solution: Implemented adversarial training and input preprocessing techniques.

Results:

- Robustness: Increased resistance to adversarial examples by 30%.

- Performance: Maintained high accuracy and user satisfaction.

Case Study 2 : Medical Imaging

Objective: Protect diagnostic models from adversarial attacks in medical imaging.

Solution: Used defensive distillation and PGD for robustness.

Results:

- Accuracy: Improved accuracy by 15% against adversarial attacks.

- Security: Enhanced security of patient data and diagnostic results.

- Attack Impact: Adversarial attacks can reduce model accuracy by 50–90% if unprotected.

- Defense Effectiveness: Adversarial training can improve robustness by up to 40%. Defensive distillation and input preprocessing further enhance security.

- Application Significance: Robust ML systems are critical in applications like cybersecurity, healthcare, and autonomous driving, where adversarial attacks can have severe consequences.

Adversarial machine learning is a critical field focused on understanding and mitigating the risks posed by adversarial attacks. By leveraging techniques like FGSM and PGD for attacks and strategies like adversarial training, defensive distillation, and input preprocessing for defenses, we can build more robust and secure ML models. These methods are essential for ensuring the reliability and safety of AI systems in critical applications.

As you explore adversarial machine learning, remember that continuous evaluation and improvement are key to staying ahead of potential threats. With these insights and tools, you can enhance the security and robustness of your machine learning models. Happy defending and securing! 🚀🔒

Be the first to comment