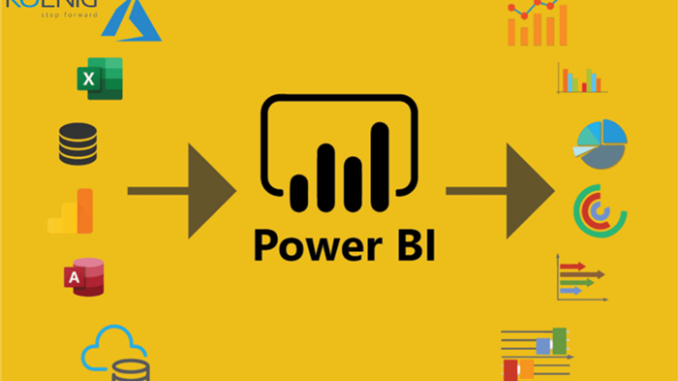

Unleashing the Power of Data: An Introduction to Power BI

Unleashing the Power of Data: An Introduction to Power BI In today’s digital world, data is king. Whether it’s sales figures, customer behavior, or operational metrics, businesses are drowning in a sea of information. But […]