In the last article, we learned about Residual Connections and their importance in neural networks to prevent gradient vanishing. In this article we we will learn how to build a Residual module and how to implement a full convolutional neural network with residual connections, as well as to compare the performance with a sequential convolutional neural network. Keep reading!

The first step in this article is to build a residual module. If haven’t read my past articles on convolutional networks, I strongly recommend you do so, and get familiar with convolutional layers, batch normalization, and activation functions.

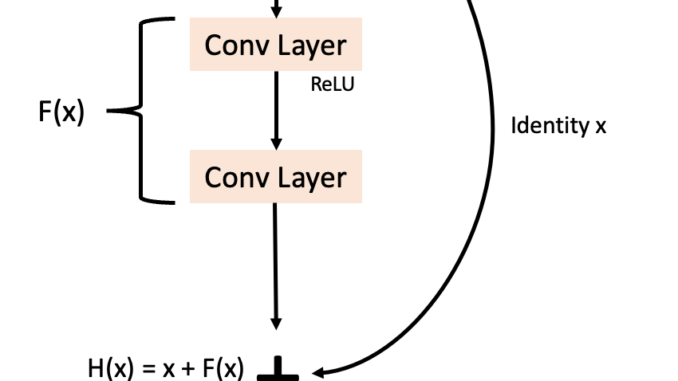

Now that you have it all, let’s start building a Residual module. Remember the following image, where we have an input X, a first convolutional layer followed by an activation function, and a second convolutional layer without an activation function. In the next step, that input value X and the result of the two convolution layers are summed up, and followed by an activation function.

Be the first to comment