The backbone of this application are the agents and their interactions. Overall, we had two different types of agents :

- User Agents: Agents attached to each user. Primarily tasked with translating incoming messages into the user’s preferred language

- Aya Agents: Various agents associated with Aya, each with its own specific role/job

User Agents

The UserAgent class is used to define an agent that will be associated with every user part of the chat room. Some of the functions implemented by the UserAgent class:

1. Translate incoming messages into the user’s preferred language

2. Activate/Invoke graph when a user sends a message

3. Maintain a chat history to help provide context to the translation task to allow for ‘context-aware’ translation

class UserAgent(object):def __init__(self, llm, userid, user_language):

self.llm = llm

self.userid = userid

self.user_language = user_language

self.chat_history = []

prompt = ChatPromptTemplate.from_template(USER_SYSTEM_PROMPT2)

self.chain = prompt | llm

def set_graph(self, graph):

self.graph = graph

def send_text(self,text:str, debug = False):

message = ChatMessage(message = HumanMessage(content=text), sender = self.userid)

inputs = {"messages": [message]}

output = self.graph.invoke(inputs, debug = debug)

return output

def display_chat_history(self, content_only = False):

for i in self.chat_history:

if content_only == True:

print(f"{i.sender} : {i.content}")

else:

print(i)

def invoke(self, message:BaseMessage) -> AIMessage:

output = self.chain.invoke({'message':message.content, 'user_language':self.user_language})

return output

For the most part, the implementation of UserAgent is pretty standard LangChain/LangGraph code:

- Define a LangChain chain ( a prompt template + LLM) that is responsible for doing the actual translation.

- Define a send_text function thats used to invoke the graph whenever a user wants to send a new message

For the most part, the performance of this agent is dependent on the translation quality of the LLM, as translation is the primary objective of this agent. And LLM performance can vary significantly for translation, especially depending on the languages involved. Certain low resource languages don’t have good representation in the training data of some models and this does affect the translation quality for those languages.

Aya Agents

For Aya, we actually have a system of separate agents that all contributes towards the overall assistant. Specifically, we have

- AyaSupervisor : Control agent that supervises the operation of the other Aya agents.

- AyaQuery : Agent for running RAG based question answering

- AyaSummarizer : Agent for generating chat summaries and doing task identification

- AyaTranslator: Agent for translating messages to English

class AyaTranslator(object):def __init__(self, llm) -> None:

self.llm = llm

prompt = ChatPromptTemplate.from_template(AYA_TRANSLATE_PROMPT)

self.chain = prompt | llm

def invoke (self, message: str) -> AIMessage:

output = self.chain.invoke({'message':message})

return output

class AyaQuery(object):

def __init__(self, llm, store, retriever) -> None:

self.llm = llm

self.retriever = retriever

self.store = store

qa_prompt = ChatPromptTemplate.from_template(AYA_AGENT_PROMPT)

self.chain = qa_prompt | llm

def invoke(self, question : str) -> AIMessage:

context = format_docs(self.retriever.invoke(question))

rag_output = self.chain.invoke({'question':question, 'context':context})

return rag_output

class AyaSupervisor(object):

def __init__(self, llm):

prompt = ChatPromptTemplate.from_template(AYA_SUPERVISOR_PROMPT)

self.chain = prompt | llm

def invoke(self, message : str) -> str:

output = self.chain.invoke(message)

return output.content

class AyaSummarizer(object):

def __init__(self, llm):

message_length_prompt = ChatPromptTemplate.from_template(AYA_SUMMARIZE_LENGTH_PROMPT)

self.length_chain = message_length_prompt | llm

prompt = ChatPromptTemplate.from_template(AYA_SUMMARIZER_PROMPT)

self.chain = prompt | llm

def invoke(self, message : str, agent : UserAgent) -> str:

length = self.length_chain.invoke(message)

try:

length = int(length.content.strip())

except:

length = 0

chat_history = agent.chat_history

if length == 0:

messages_to_summarize = [chat_history[i].content for i in range(len(chat_history))]

else:

messages_to_summarize = [chat_history[i].content for i in range(min(len(chat_history), length))]

print(length)

print(messages_to_summarize)

messages_to_summarize = "\n ".join(messages_to_summarize)

output = self.chain.invoke(messages_to_summarize)

output_content = output.content

print(output_content)

return output_content

Most of these agents have a similar structure, primarily consisting of a LangChain chain consisting of a custom prompt and a LLM. Exceptions include the AyaQuery agent which has an additional vector database retriever to implement RAG and AyaSummarizer which has multiple LLM functions being implemented within it.

Design considerations

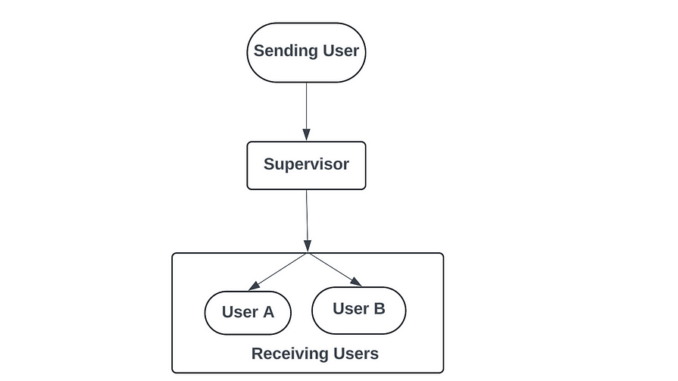

Role of AyaSupervisor Agent: In the design of the graph, we had a fixed edge going from the Supervisor node to the user nodes. Which meant that all messages that reached the Supervisor node were pushed to the user nodes itself. Therefore, in cases where Aya was being addressed, we had to ensure that only a single final output from Aya was being pushed to the users. We didn’t want intermediate messages, if any, to reach the users. Therefore, we had the AyaSupervisor agent that acted as the single point of contact for the Aya agent. This agent was primarily responsible for interpreting the intent of the incoming message, direct the message to the appropriate task-specific agent, and then outputting the final message to be shared with the users.

Design of AyaSummarizer: The AyaSummarizer agent is slightly more complex compared to the other Aya agents as it carries out a two-step process. In the first step, the agent first determines the number of messages that needs to be summarized, which is a LLM call with its own prompt. In the second step, once we know the number of messages to summarize, we collate the required messages and pass it to the LLM to generate the actual summary. In addition to the summary, in this step itself, the LLM also identifies any action items that were present in the messages and lists it out separately.

So broadly there were three tasks: determining the length of the messages to be summarized, summarizing messages, identifying action items. However, given that the first task was proving a bit difficult for the LLM without any explicit examples, I made the choice to have this be a separate LLM call and then combine the two last two tasks as their own LLM call.

It may be possible to eliminate the additional LLM call and combine all three tasks in one call. Potential options include :

- Providing very detailed examples that cover all three tasks in one step

- Generating lot of examples to actually finetune a LLM to be able to perform well in this task

Role of AyaTranslator: One of the goals with respect to Aya was to make it a multilingual AI assistant which can communicate in the user’s preferred language. However, it would be difficult to handle different languages internally within the Aya agents. Specifically, if the Aya agents prompt is in English and the user message is in a different language, it could potentially create issues. So in order to avoid such situations, as a filtering step, we translated any incoming user messages to Aya into English. As a result, all of the internal work within the Aya group of agents was done in English, including the output. We didnt have to translate the Aya output back to the original language because when the message reaches the users, the User agents will take care of translating the message to their respective assigned language.

Be the first to comment