There are tons of ways to jump into Machine Learning and AI. In this post, I’ll share how I got my server set up for ML/AI fun, including Python, GPUs, CUDA, and all that good stuff.

· Server Selection

· Server Configuration

I’m one of those people who used to build my own hardware and had fun tinkering with Linux back in the day, before package managers made life easier. If you’re like me, you probably remember the days of configure/make/sudo make install. But I digress.

Don’t get me wrong — I love cloud services and know AWS, GCP, and Microsoft offer a ton of options for ML/AI. But honestly, just figuring out what each service does can be a challenge. Sometimes, I just want some good old-fashioned bare metal to get the job done, so I went with a Dedicated GPU Server. After a bit of searching, I found that https://www.gpu-mart.com/ had some solid options at decent prices. ChatGPT helped me pick out a spec that seemed just right for what I needed:

- Dual 18-Core E5–2697v4

- 256GB RAM

- 240GB SSD + 2TB NVMe + 8TB SATA

- GPU: 3 x Nvidia V100

Operating System

I went with my trusty go-to Linux server, Ubuntu 22.04. To be honest, I initially started with 24.04 because, hey, who doesn’t like the latest and greatest? But I quickly found out that CUDA and NVIDIA drivers aren’t quite there yet on 24.04. It’s not that I’m against other distros — Ubuntu is just where I feel at home and have for decades. It’s always met all my needs.

I’ll start off by ensuring that my server of update to date with all packages, and perform a reboot, just in case the kernel was updated.

sudo apt update

sudo apt full-upgrade -y

sudo apt autoremove -y

sudo apt autoclean -y

sudo reboot

Next, we need to make sure we’ve got the basic packages needed for compiling and building software on Linux.

- build-essential: This is your go-to package that pulls in all the basic tools like GCC, g++, and make — everything you need to compile stuff from source.

- pkg-config: It helps you manage library flags so you don’t have to manually hunt them down when you’re compiling software.

- cmake: This one’s a build system generator — it’s like a smart assistant that figures out how to build your project across different environments.

- ninja-build: Think of this as a super-fast alternative to make. It speeds up the build process, especially for larger projects.

sudo apt install build-essential pkg-config cmake ninja-build

Python

Alright, I’ll be honest — I’m no Python guru. I actually much prefer working with Go. But if you want to dive into Machine Learning and AI these days, Python is kind of a necessary evil. Okay, maybe it’s not that evil — it’s just not Go.

The reality is that while there are some ML efforts in Go (and I honestly think Go would be way better for training and testing models), Python still has the most robust and mature ML/AI ecosystems right now. As tempting as it is to think about porting everything to Go, it’s probably faster to just switch languages and, you know, use the right tool for the job.

I installed Miniconda, which is a lightweight version of the full Anaconda. For a quick background, Anaconda is a popular distribution for Python and R, packed with tons of data science and machine learning tools. Miniconda, on the other hand, just includes the essentials — Python and Conda, a package manager that I find better at handling dependencies than pip. Plus, managing environments with it is a bit easier too.

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh

bash Miniconda3-latest-Linux-x86_64.sh

After installing Miniconda, there are a few prep tasks you’ll need to take care of, but don’t worry — the installer will guide you through what to do.

NVIDIA Drivers

Now hang tight, because this part gets a little tricky, and honestly, I’ve never quite mastered it. There seem to be plenty of ways to either fail or succeed here. As you’ll remember, I’m setting up a server with three NVIDIA Tesla V100 GPUs, so getting the right drivers installed is crucial. This is key for CUDA (which we’ll get to soon) to properly tap into the GPUs, and in ML/AI, speed is everything. My server came with the GPUs already configured, but it didn’t have the NVIDIA drivers I needed. So, I went ahead and installed the latest server edition of the NVIDIA drivers to keep things running smoothly. Spoiler alert: it failed miserably the first few (okay, many) times.

After some frustrating web surfing, I came across the guide in the link above and realized that my kernel version was pretty outdated for the drivers and CUDA I wanted to install. Turns out, installing linux-generic-hwe-22.04 might solve the issue. According to ChatGPT, here’s the deal with that:

The

linux-generic-hwe-22.04package is part of Ubuntu’s Hardware Enablement (HWE) stack for the 22.04 LTS release. Basically, it gives you a newer kernel than the one that originally came with Ubuntu 22.04, which means better hardware support, performance boosts, and security updates. By installing this package, you get the latest kernel improvements while still keeping the stability of the LTS version. It’s perfect if you’ve got newer hardware or just want the latest optimizations without jumping to a newer Ubuntu version.

sudo apt install linux-generic-hwe-22.04

sudo reboot

sudo apt-get install linux-headers-$(uname -r)

Then I went ahead and installed the NVIDIA drivers.

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-keyring_1.1-1_all.deb

sudo dpkg -i cuda-keyring_1.1-1_all.deb

sudo apt update

sudo apt -y install cuda-toolkit-12-2

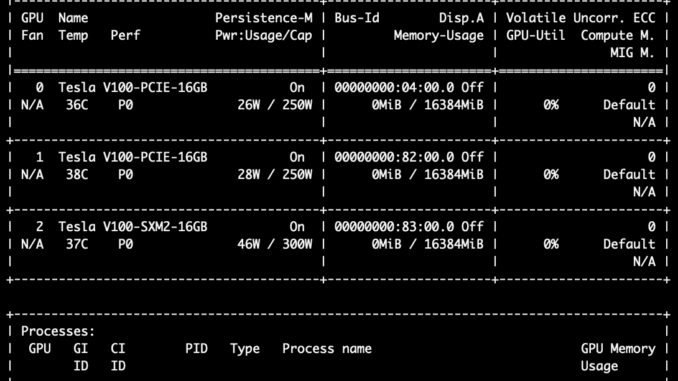

And voilà! I ran the nvidia-smi command, and this is the output you want to see:

The server is now all set and ready to start using GPU acceleration for all your ML/AI tasks.

A quick word of caution, especially if you’re not familiar with this territory: The NVIDIA drivers and firmware come with kernel modules, which can be a bit tricky to handle. You need to keep this in mind when working with them. If you’re upgrading drivers, reinstalling, or doing anything where there’s already an existing install of NVIDIA kernels, make sure to unload the kernel modules before taking any actions and then reboot afterward. You can check if any kernel modules are loaded by using this command:

lsmod | grep nvidia

If you see something like the output below, it means you have kernel modules loaded, and you’ll need to unload them.

You can unload the kernel modules with the following commands, and you need to do it in this sequence because the first three modules depend on nvidia, so it should be the last one to unload:

sudo rmmod nvidia_drm

sudo rmmod nvidia_modeset

sudo rmmod nvidia_uvm

sudo rmmod nvidia

You might also see something like:

Failed to initialize NVML: Driver/library version mismatch

NVML library version: 560.28

The fix for that is to unload the kernel modules and then run nvidia-smi to load the updated kernel modules.

Remember when I mentioned that I’m no Python guru? Well, I’m not going to pretend otherwise. So, to give credit where it’s due, a lot of what I’m going to share here is inspired by the brilliant post by Amir Saniyan, which you can check out here: https://gist.github.com/amir-saniyan/b3d8e06145a8569c0d0e030af6d60bea

First, let’s dive into virtual environments. These are like separate instances with different configurations of Python, and you can switch between them to test, say, different versions of Python or a specific library. Virtual environments give you the option to have multiple isolated spaces that can be independently configured. This is super handy if you want to experiment with different versions of modules or try out different development and testing strategies. You can easily switch between environments, making it quick to test the same code in various setups — pretty neat if you ask me. I’ll walk you through a few that I set up for myself, but there are plenty more suggestions in the inspirational post I mentioned earlier. I should note that I’m using Conda instead of Python virtual environments — almost the same but with some differences. Definitely check it out!

Machine Learning Environment

The first environment is my basic machine learning setup, with all the essential modules I use:

- NumPy: Handles arrays and math operations — perfect for crunching numbers.

- SciPy: Builds on NumPy with extra tools for scientific and technical computing.

- Matplotlib: The go-to for creating all kinds of plots and charts.

- IPython: An upgraded Python shell with cool features like tab completion and syntax highlighting.

- Jupyter: A web app for creating and sharing notebooks with live code, visualizations, and notes.

- Pandas: Makes data manipulation a breeze with its powerful DataFrame structure.

- SymPy: Lets you do algebra and calculus symbolically, not just with numbers.

- scikit-learn: Simplifies machine learning with tools for building and testing models.

- pip: Pip is the default package manager for Python. It’s what you use to install and manage Python libraries and packages, making it easy to add new tools to your projects.

- ipykernel: IPykernel is a package that provides the Python backend for Jupyter notebooks. It allows you to run Python code in a Jupyter notebook, enabling you to interactively write and test your code.

$ conda create -n ml-c conda-forge pip numpy scipy matplotlib ipython jupyter pandas sympy scikit-learn ipykernel

$ conda activate ml

RapidAI

RapidsAI has developed a blazing fast suite of packages for GPU acceleration, and I highly recommend setting up an environment with them. The install process can be a bit tricky to figure out, so here’s the short version. Head over to RapidsAI Install, use the form under “Install Rapids” to configure what you need, and then click “copy command.” You’ll get something like the command below. But before we dive in, let me explain what I’m installing in my environment.

- cudf: A GPU-accelerated DataFrame library that works like Pandas but faster, designed for processing large datasets on GPUs.

- cuml: A machine learning library that runs on GPUs, offering fast implementations of algorithms like those in scikit-learn.

- cugraph: A GPU-accelerated graph analytics library, great for running complex graph algorithms quickly.

- cuxfilter: A GPU-accelerated dashboarding library for interactive data visualization, helping you create fast, responsive visualizations.

- cucim: A GPU-accelerated library for image processing, particularly useful in medical imaging and other large-scale image analysis tasks.

- cuspatial: A library for spatial and geographic data processing on GPUs, enabling faster analysis of large spatial datasets.

- cuproj: A GPU-accelerated version of the PROJ library for coordinate transformations, making geospatial computations faster.

- pylibraft: A Python library that provides foundational components for building GPU-accelerated machine learning algorithms.

- raft-dask: A Dask-based library that enables scalable and distributed GPU-accelerated machine learning using RAFT components.

- cuvs: A package designed for video processing on GPUs, making it easier to work with and analyze video data at high speeds.

- python=3.11: The latest version of Python, the core programming language you’re using.

- ‘cuda-version>=12.0,: Specifies the version range for CUDA, the platform that enables GPU acceleration, ensuring compatibility with your setup.

- ‘pytorch=*=cuda’: Installs PyTorch with CUDA support, a deep learning framework that can leverage GPUs for faster model training.

- tensorflow: Another popular deep learning framework, designed for building and training neural networks, also supports GPU acceleration.

- xarray-spatial: A library for fast spatial analysis on large datasets, working seamlessly with xarray for processing raster data.

- graphistry: A visual graph analytics tool that helps you explore and visualize large graphs quickly and interactively.

- dash: A web application framework designed for building analytical web apps in Python, perfect for creating interactive dashboards.

conda create -n rapids -c rapidsai -c conda-forge -c nvidia \

cudf=24.08 cuml=24.08 cugraph=24.08 cuxfilter=24.08 cucim=24.08 cuspatial=24.08 cuproj=24.08 pylibraft=24.08 raft-dask=24.08 cuvs=24.08 python=3.11 'cuda-version>=12.0, 'pytorch=*=*cuda*' tensorflow xarray-spatial graphistry dash

With everything set up, you’re now ready to dive into Machine Learning and Artificial Intelligence using your GPU-accelerated server. One tool that will be especially helpful in this context is Jupyter Notebook, which can seamlessly take advantage of the various environments you’ve configured. In the next article on this subject, I’ll walk you through how to set up a Jupyter Notebook server that’s fully integrated with your different virtual environments. Stay tuned, and happy coding!

Be the first to comment