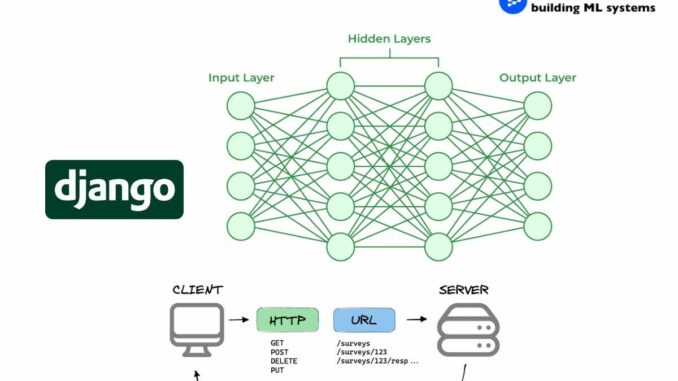

In today’s data-driven world, integrating machine learning and deep learning capabilities into web applications has become increasingly popular. Django, a powerful Python web framework, provides an excellent foundation for building APIs that leverage the power of deep learning models.

In this guide, we’ll explore the process of developing a Deep Learning API using the Django REST Framework. We’ll cover the necessary steps, best practices, and provide detailed code examples to help you create a robust and scalable API.

The original & complete content is at UnfoldAI blog: https://unfoldai.com/developing-deep-learning-api-django/

Django continues to offer several compelling reasons that make it an ideal choice for developing machine learning applications:

- Rapid development: Django’s “batteries-included” philosophy means it comes with a wide range of built-in features and tools, allowing you to focus on writing the core logic of your application rather than spending time on boilerplate code.

- Security: Django takes security seriously and provides built-in protection against common web vulnerabilities such as cross-site scripting (XSS), cross-site request forgery (CSRF), and SQL injection. It ensures that your application and sensitive data remain secure.

- Scalability: Django’s architecture is designed to handle high traffic and scale well. It follows the Model-View-Controller (MVC) pattern, which promotes a clean separation of concerns and allows for easy scaling of your application as it grows.

- Extensive ecosystem: Django has a thriving community and a vast ecosystem of packages and extensions. This means you can leverage existing libraries and tools to speed up development and add additional functionality to your application.

It can be interesting for you to check the recent Django developers survey.

Before we explore further the process of building our Deep Learning API, let’s ensure we have the necessary prerequisites in place:

1. Python: Make sure you have Python 3.9 or above installed on your system. We recommend using the latest stable version of Python.

2. Deep Learning libraries: Install TensorFlow 2.16.2 and Keras 3.4.1, which are powerful deep learning frameworks that we’ll utilize in our API. You can install them using pip:

pip install tensorflow==2.16.2 keras==3.4.1

3. Django and Django REST framework: Install Django and the Django REST Framework using pip:

pip install django djangorestframework

4. IDE: Choose an Integrated Development Environment (IDE) that you’re comfortable with. We recommend using PyCharm, Visual Studio Code, or JetBrains’ DataSpell, as they provide excellent support for Python and Django development. Check here more insights about the best IDE for 2024.

Now that our environment is set up, let’s create a new Django project and app for our Deep Learning API:

1. Open a terminal or command prompt and navigate to the directory where you want to create your project.

2. Create a new directory for your project and navigate into it:

mkdir deep_learning_api

cd deep_learning_api

3. Create a new Django project using the django-admin command:

django-admin startproject api_project .

This will create a new Django project named “api_project” in the current directory.

4. Create a new Django app within the project for our deep learning functionality:

python manage.py startapp deep_learning_app

This will create a new app named deep_learning_app within our project.

With our project structure in place, let’s configure our deep learning app:

Open the settings.py file located in the project directory (api_project/settings.py) and add the following lines to the INSTALLED_APPS list:

INSTALLED_APPS = [

# ...

'rest_framework',

'deep_learning_app',

]

This tells Django to include the Django REST Framework and our deep learning app in the project.

In the same settings.py file, add the following line to specify the directory where we’ll store our deep learning models:

MODELS = os.path.join(BASE_DIR, 'models')

This sets the MODELS variable to the path of the “models” directory within our project.

Create a new file named apps.py in the deep_learning_app directory and add the following code:

import os

from django.apps import AppConfig

from django.conf import settings

import kerasclass DeepLearningAppConfig(AppConfig):

default_auto_field = 'django.db.models.BigAutoField'

name = 'deep_learning_app'

model_path = os.path.join(settings.MODELS, 'your_model.keras')

model = keras.models.load_model(model_path)

This code defines a configuration class for our deep learning app. It loads a pre-trained deep learning model from the specified file path (your_model.keras) when the app is initialized. Note that we’re now using the .keras format, which is the recommended format for Keras 3+.

Create a new file named views.py in the deep_learning_app directory and add the following code:

from rest_framework.decorators import api_view

from rest_framework.response import Response

from .apps import DeepLearningAppConfig

import numpy as np@api_view(['POST'])

def predict(request):

# Get input data from request

input_data = request.data['input']

# Preprocess input data

preprocessed_data = preprocess(input_data)

# Make predictions using loaded model

prediction = DeepLearningAppConfig.model.predict(preprocessed_data)

# Postprocess and format the prediction

result = postprocess(prediction)

return Response({'prediction': result})

def preprocess(input_data):

# Implement your preprocessing logic here

# For example, converting input to numpy array and normalizing

return np.array(input_data).astype('float32') / 255.0

def postprocess(prediction):

# Implement your postprocessing logic here

# For example, converting prediction probabilities to class labels

return np.argmax(prediction, axis=1).tolist()

The original & complete content is at UnfoldAI blog: https://unfoldai.com/developing-deep-learning-api-django/

This code defines a view function named predict that handles POST requests to our API endpoint. It retrieves the input data from the request, preprocesses it, makes predictions using the loaded deep learning model, postprocesses the predictions, and returns the result as a JSON response. We’ve also added simple preprocess and postprocess functions as examples.

Create a new file named urls.py in the deep_learning_app directory and add the following code:

from django.urls import path

from . import viewsurlpatterns = [

path('predict/', views.predict, name='predict'),

]

This code defines the URL patterns for our deep learning app. It maps the /predict/ endpoint to the predict view function we defined earlier.

Update the project-level urls.py file (api_project/urls.py) to include the URL patterns from our deep learning app:

from django.urls import include, pathurlpatterns = [

# ...

path('api/', include('deep_learning_app.urls')),

]

This tells Django to include the URL patterns from our deep learning app under the /api/ endpoint.

With our Deep Learning API set up, let’s test it to ensure it’s working as expected:

1. Make sure you have your pre-trained deep learning model file (your_model.keras) placed in the models directory within your project.

2. Open a terminal or command prompt and navigate to the project directory.

3. Run the following commands to apply database migrations and start the development server:

python manage.py makemigrations

python manage.py migrate

python manage.py runserver

Use a tool like Postman or cURL to send a POST request to http://localhost:8000/api/predict/ with the necessary input data. For example, using cURL:

curl -X POST -H "Content-Type: application/json" -d '{"input": [0, 1, 2, 3, 4]}' http://localhost:8000/api/predict/

Replace the input data with actual data required by your deep learning model.

The API should respond with the predicted output in JSON format.

When it comes to deploying your Deep Learning API to production, there are several factors to consider:

- Web server: Use a production-grade ASGI server like Uvicorn or Hypercorn to serve your Django application. These servers are designed to handle high traffic and provide better performance compared to the development server.

- Reverse proxy: Configure a reverse proxy server like Nginx in front of your web server. Nginx can handle tasks such as load balancing, SSL termination, and serving static files efficiently.

- Containerization: Consider using containerization technologies like Docker to package your application along with its dependencies. Containerization provides a consistent and reproducible environment across different deployment platforms.

- Scalability: Assess the scalability requirements of your API based on the expected traffic and computational resources needed. You may need to deploy your application on multiple servers or use cloud platforms that provide auto-scaling capabilities.

- Monitoring and logging: Implement monitoring and logging mechanisms to keep track of your API’s performance, errors, and usage. Tools like Prometheus, Grafana, and the ELK stack can help you gain insights into your application’s behavior.

- Security: Ensure that your API is secure by following best practices such as using HTTPS, implementing authentication and authorization mechanisms, and regularly updating your dependencies to address any security vulnerabilities.

- Model versioning: Implement a system for versioning your machine learning models. This allows you to easily roll back to previous versions if needed and maintain multiple versions of your model in production.

Learn to build smart AI apps that combine large language models with your own data using Django in our practical guide, “Build RAG with Django“.

Developing a Deep Learning API with Django continues to provide a powerful and flexible way to integrate machine learning capabilities into your web applications. By following the steps outlined in this comprehensive guide and leveraging the Django REST Framework, you can create a robust and scalable API that serves predictions from your deep learning models.

Remember to handle data preprocessing, model loading, and postprocessing efficiently to ensure optimal performance. Additionally, consider the deployment aspects, such as using production-grade ASGI servers, containerization, and monitoring, to ensure your API is reliable and can handle the desired traffic.

With Django’s extensive features and the integration of modern deep learning libraries like Keras 3, you have the tools to build cutting-edge applications that harness the power of artificial intelligence. So go ahead, experiment with different models and architectures, and create innovative solutions that solve real-world problems.

Be the first to comment