If you’re machine learning enthusiast you might have come across this term. Actually these are normally found in every loss function such as Binary Cross entropy, Categorical Cross entropy etc. But what does that truly means?

It really has different meanings in different domains.

In statistics log-odds are logits.

In Logistic regression logits are Wx+b (you know these).

In deep learning, logits are the inputs of the last neuron layer.

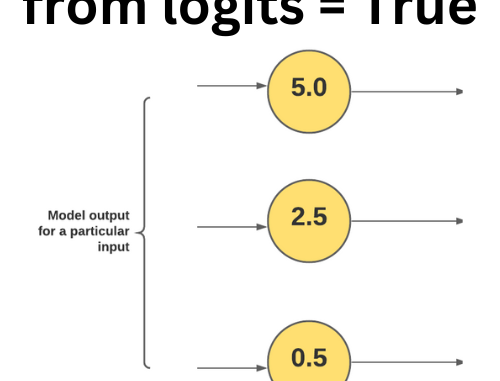

So, they are un-normalized outputs of model before they are transformed into probabilities. The outputs can be any real number that means positive or negative.

tf.keras.layers.Dense(10, activation = None)

If we didn’t use a SoftMax layer in the final layer, we should say from_logits=True when defining the Loss function.

loss_fn = CategoricalCrossentropy(from_logits=True)

tf.keras.layers.Dense(10, activation='softmax')

In the final layer if we have used SoftMax/Sigmoid layer we should say from_logits=False

loss_fn = CategoricalCrossentropy(from_logits=False)

Note that we don’t have to specifically tell the loss function about logits here, We can also write

loss_fn = CategoricalCrossentropy()

Note that these loss functions are designed to work with probability distributions directly. They expect the model’s output to be probabilities, not raw logits. If you pass raw logits to these functions without setting from_logits=True, you might get unexpected results.

Hope you understood 🙂

Be the first to comment