TensorFlow GNN, or TensorFlow Graph Neural Networks, is a library designed to simplify building and working with Graph Neural Networks (GNNs) on the TensorFlow platform. GNNs are a powerful type of neural network specifically designed to handle data structured as graphs, networks of nodes connected by edges.

What is GNN?

A graph neural network (GNN) is a type of artificial neural network that can process data that can be represented as graphs. Graphs are data structures that contain nodes and edges, where nodes can be entities such as people, places, or things, and edges can define the relationships between nodes. GNNs use a technique called message passing, where nodes update their representations by exchanging information with their neighbors. GNNs can perform various tasks such as classification, prediction, and analysis of graph data.

A GNN, or Graph Neural Network, consists of several key components:

1: Data Representation:

- Graph: The core data structure is a graph, consisting of nodes (also called vertices) representing entities and edges (links) connecting them.

- Features: Each node can have associated features containing additional information (e.g., numerical attributes, text descriptions).

2. Message Passing Mechanism:

- This is the heart of GNNs. Nodes iteratively exchange information with their neighbors through edges.

- At each step, a message function aggregates information from a node’s neighbors and its own features.

- This message is then transformed using a neural network layer, capturing the node’s local context.

3. Node Update Function:

- This function combines the aggregated message with the node’s current state, producing a new updated state.

- This new state represents the node’s updated understanding of itself and its surroundings.

4. Layers and Propagation:

- Multiple layers of message passing and node updates are stacked, allowing information to propagate across the graph.

- Each layer builds upon the previous one, gradually capturing increasingly complex relationships.

5. Output Generation:

Depending on the task, the output can be:

- Node-level: Classification (e.g., predicting node category), regression (e.g., predicting node property value).

- Edge-level: Predicting edge existence or attributes.

- Graph-level: Predicting properties of the entire graph (e.g., molecule stability).

TensorFlow Graph Neural Networks (GNNs) refer to a set of tools, modules, and frameworks within TensorFlow designed specifically for building and training Graph Neural Networks (GNNs). Graph Neural Networks are a type of neural network architecture designed to operate on graph-structured data, where nodes represent entities, and edges represent relationships between these entities.

TensorFlow provides a variety of functionalities for working with graphs and building GNNs, including:

1: Graph Construction:

TensorFlow offers utilities for constructing graphs, manipulating graph structures, and representing them in a format suitable for neural network processing.

2: Graph Neural Network Layers:

TensorFlow includes implementations of various graph neural network layers such as Graph Convolutional Networks (GCNs), Graph Attention Networks (GATs), GraphSAGE, etc. These layers enable the application of neural network operations on graph-structured data.

3: Training and Optimization:

TensorFlow provides tools for training GNNs using standard optimization techniques such as gradient descent, backpropagation, and various optimization algorithms.

4: Graph Representation Learning:

TensorFlow facilitates learning representations of nodes, edges, and entire graphs through techniques like node embedding, graph embedding, and graph-level prediction.

5: Integration with Deep Learning Pipelines:

TensorFlow seamlessly integrates GNNs into deep learning pipelines, allowing users to combine graph data with other types of data and models.

6: Scalability and Performance:

TensorFlow is designed to handle large-scale data and computation efficiently, making it suitable for training and deploying GNNs on massive graphs.

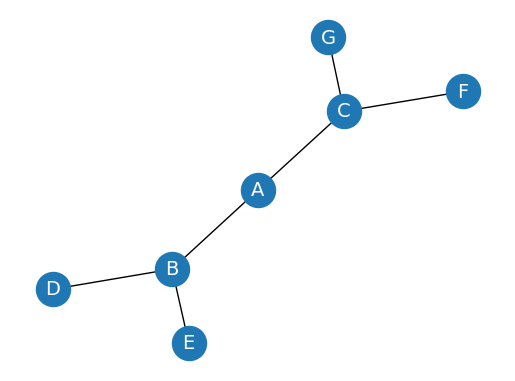

import networkx as nx

import matplotlib.pyplot as pltG = nx.Graph()

G.add_edges_from([('A', 'B'), ('A', 'C'), ('B', 'D'), ('B', 'E'), ('C', 'F'), ('C', 'G')])

plt.axis('off')

nx.draw_networkx(G,

pos=nx.spring_layout(G, seed=0),

node_size=600,

cmap='coolwarm',

font_size=14,

font_color='white'

)

Output:

import tensorflow as tf

import tensorflow_gnn as gnn# Define the graph

edges = [('A', 'B'), ('A', 'C'), ('B', 'D'), ('B', 'E'), ('C', 'F'), ('C', 'G')]

graph = gnn.Graph(edges, node_sets={

'all_nodes': list(set([node for edge in edges for node in edge]))

})

# Define the node features

node_features = {

'all_nodes': tf.ones([graph.num_nodes('all_nodes'), 1])

}

# Create the GNN model

model = gnn.keras.models.GraphConvModel(

node_sets={

'all_nodes': gnn.keras.layers.NodeSet(

gnn.keras.layers.GraphConv(1, 16, activation='relu'),

gnn.keras.layers.GraphPool(pooling_type='sum')

)

},

output_node_sets=['all_nodes']

)

# Compile and train the model

model.compile(optimizer='adam', loss='mse')

model.fit(graph, node_features, epochs=5)

# Make predictions on the graph

predictions = model(graph, node_features)

# Print the predictions

print(predictions)

Be the first to comment