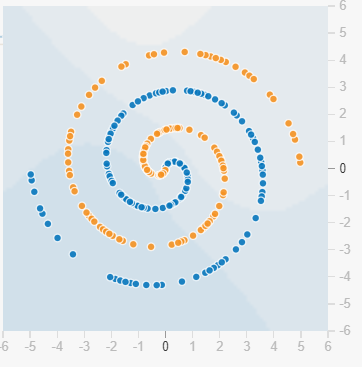

For this example, We will use one of the more complex datasets offered by the playground. The spiral dataset was selected in this scenario.

The goal here is to build an optimal neural network capable of detecting the pattern in this dataset. To find this optimal network, several metrics were evaluated including the minimum size of the network(how many layers and neurons there are), the minimum loss value(how accurate the model is), and the minimum number of epochs(how quick the model can learn the pattern).

We will perform something called Hyperparameter Tuning where we adjust several parameters in the playground to find which model gives the best results for the key metrics in evaluating our model.

Some of these parameters that we adjusted for this model include:

- Activation Function

- Learning Rate

- Regularization

- Regularization Rate

- Dropout

We have also adjusted the size of the network by adding/removing neurons and hidden layers.

From our experimentation, we were able to find a good model that gives satisfactory results:

With this model, we were able to obtain a loss value of less than 0.04 under 300 epochs with a neural network of size 6,6 and 4 features. This was achieved by choosing the right training parameters. These parameters that were adjusted are as follows:

Activation Function — Refers to a function applied to the output of each neurons. This adds complexity to our model allowing it to recognize more complex patterns in our dataset. Some of these functions are a simple linear while others are more complex. In this solution, we chose the Swish function which is a non-linear function and more complex than functions such as ReLU.

Learning Rate — This value determines how aggressively our model changes its parameters(each weight and bias). It is important to find the proper balance for the learning rate. A value to high may lead to the model overshooting and missing the optimal solution while a value too low may take unnecessarily long to get to the optimal parameters. In this case, we went with the default value of 0.03

Network Size — This refers to the number of neurons and hidden layers in our model. Each increase in size, increases the complexity of our model by adding more parameters for the model to adjust during training. The size of this network is 4,6,6 with the numbers representing the number of neurons in each layer.

Regularization and Regularization Rate — In order to prevent overfitting, we introduce this option to come up with a model that performs well generally rather than one just one specific dataset. We added an L2 Regularization with a rate of 0.001 in this solution.

(Warning: This section will include some math)

In order to understand how the training process happened, we have to go deeper into the fundamentals of neural networks and the underlying mathematical concepts involved.

To make things simpler and shorter, we will create another model on the playground but for a simpler dataset. Let’s go with the 4 squares dataset.

We have built a very simple neural network with 2 hidden layers containing 3 neurons each. We used the default 2 input features and the other parameters were kept at their default settings.

In neural networks, we can treat each neuron as a function capable of taking multiple outputs and returning one output. We can represent this function with this basic equation as below:

Where Z(j), the output of the neuron is equal to the sum of output of the neuron multiplied by its weight for all the neurons connected to this neuron j plus the bias b(j).

If you know basic linear algebra, you can see that if we break down this equation, we can get the basic linear function:

However, if you look at the datasets from the playground, you can see that most of them are non-linear. This is where our Activation Function comes in. Generally, these activation functions are introduced to our neurons to address this non-linearity and make our network capable of learning more complex patterns.

For simplicity, we can start with one of the most basic activation functions, Rectified Linear Unit or “ReLU”. This activation function is represented by:

Based on the equation, this function will return the maximum of 0 and x. This only means that if the value of x is greater than 0 (positive) it will return the value of x. Otherwise, if the value is less than 0 (negative) it will return 0.

Going back to the basic function for the neurons, we can apply this activation function to get the actual output of the neuron j:

We repeat this process for each and every neuron in our network until we get to the last layer which will determine the output of our network.

During the training process of our model, these weights and biases are adjusted to come up with an ideal solution capable of accurately predicting output that matches our dataset.

However, these weights and biases are not simply randomly chosen by our machine. In order for our training to be effective, we must introduce a way to guide our model to find the right solution. First, we introduce a mechanism to reward or punish our model if it was able to predict correctly or incorrectly based on the data we used to train it. To implement this mechanism, we first introduce something called a Cost(or Loss)Function. These functions determine how much error our model produces compared to the expected results. As our training progresses, we should expect the value of this function to decrease over each iteration.

Imagine you are walking on a terrain full of hills and valleys and your goal is to find the lowest point in this terrain blindfolded and with only your feet to guide you. Which direction you should go and how big of a step you should take is determined by some mechanisms. In the context of neural networks, this is where Gradient Descent and Backpropagation comes in.

To decrease the loss/cost values, we implement what is called Gradient Descent. This adjusts each weight and bias in our network according to the loss/cost function from each iteration. Try to think of this as our legs trying to find which direction it should take and taking these steps to reach a lower point in our terrain.

How much should the Gradient Descent adjust these weights and biases is determined through Backpropagation. Going back to our imaginary terrain, we realize that we can actually use our feet to get a feel of the ground before taking a step. We can try to feel if the step is going to take us lower or higher and if we can take a bigger step to reach our lowest point faster.

Both of these mechanisms comes with each of their own rabbit holes which we will not delve into. This only serves to give you a small idea on how the training works.

Neural networks are some of the most powerful implementations of machine learning. It is capable of learning a wide variety of datasets. In order to understand how they work, we started with the basics with a relatively much simpler model. We tried to gain a basic understanding of the underlying mathematical concepts with simple examples.

For the next parts of this series, we will try to go deeper with our neural networks with more complex datasets. Eventually, we will create a model with some real-life practical applications specifically, computer vision.

Be the first to comment