Google’s TensorFlow is a second generation system, a popular open source library programmed in C++, Python and CUDA using data flow graphs. Developed by the Google Brain Team, it is also a math library used in various machine learning platforms such as neural networks.

The data graphs include the mathematical operations and multi-dimensional data array called ‘tensors’ that flow in between. This flexible architecture lets you deploy computations to one or more Central Processing Units (CPU) or Graphics Processing Units (GPU) in a desktop, server, or a mobile device without rewriting code.

This Open Source Library has made many improvements in the past releases. Google’s last release TensorFlow 1.5 was made public with a bunch of cool features with improvement in speed and ease of execution. Here are the major changes in this patch release:

Created by Nvidia, CUDA (Compute Unified Device Architecture) is a parallel computing platform that includes application programming interface (API). It allows various platforms to use CUDA-enabled GPUs to process and enhance operations, thus giving access to various information sets and parallel computing to execute complex models.

Google’s team working on TensorFlow has started to build CUDA 9 and cuDNN 7 (CUDA Deep Neural Network) to ease the installation which is quite convenient. Libraries have moved away from containers with incompatibility issues with ubuntu 14 and upgraded in ubuntu 16.

Eager Execution:

In the earlier versions of TensorFlow one had to create a computational graph with placeholders and variables, and then create a session to run the model. Like an example below.

But this enhancement lets you to execute it like NumPy with implementation of Eager Execution. This will make TensorFlow a lot less bulky and and a lot easier to use. Like the example here:

This also helps developers with:

- Debugging the runtime errors faster with integrated tools in Python.

- The flow of control with usage of dynamic models which are supported with easy Python commands.

- High order customized gradients and provide a strong support.

TensorFlow Lite:

TensorFlow Lite is built into TensorFlow 1.5 to support the mobile and embedded devices. You can train the model and save the file in a .tflite format which can be executed on the mobile device with low latency. It does not require any training nor does one need to upload the data onto the cloud.

If an image has to be classified, it is done on the mobile phone and will give the output result right there which is amazing. Like below: TensorFlow was designed from the basics for the deep neural network implementation on the mobile platforms. To understand how machine learning can work on a mobile platform and how it can be implemented productively, go here.

TensorFlow was designed from the basics for the deep neural network implementation on the mobile platforms. To understand how machine learning can work on a mobile platform and how it can be implemented productively, go here.

TensorFlow Lite deployment will include:

- C++ API on Android which is already updated and confined into a Java API

- TensorFlow Lite File (.tfile) can be loaded and used to invoke the Interpreter with C++ API. This is available for iOS and Android.

- Some of the interesting advantages of the Interpreter are its execution model. With a set of kernels required, the Interpreter executes the model. Kernels are selected with the support of the Interpreter, where the size is reduced to 100k and 300k with every kernel loaded. There is a significant reduction of size of the file from 1.5MB which is better to use on TensorFlow Mobile.

Enhanced GPU updates:

The Windows and Linux platform users using GPU now have an in-built CUDA 9 and cuDNN support. The NVidia’s CUDA 9.1 includes new algorithms and optimization processes that speed up AI and HPC apps on Volta GPUs, and also comprises of compiler optimization techniques to support for new developer tools, and fixes the bugs.

- Forward and back propagation paths for many layer types like pooling, batch normalisation, dropout, ReLU (Rectified Linear Unit), Sigmoid, softmax and Tanh can be implemented.

- The forward and backward convolution routines, including cross-correlation, are designed for convolutional neural nets and LSTM Recurrent Neural Networks (RNN).

- Arbitrary dimension ordering, striding, and sub-regions for 4D tensors is an easy integration for any neural network implementation.

- Context-based API permits easy multi-threading.

- cuDNN is supported on Windows, MacOS and Linux systems with Pascal, Volta, Kepler, Maxwell Tegra K1, Tegra X1 and Tegra X2 GPUs.

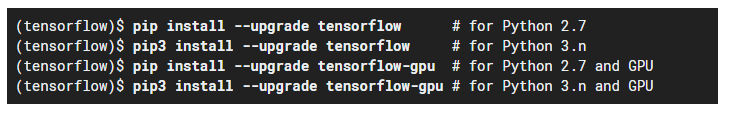

To get TensorFlow 1.5 on Windows, you can use the standard pip installation (or pip3 if you use Python3)

In Linux, do it as follows:

Be the first to comment