When loading data, it is always important to load the data with performance and scalability in mind.

For lakehouse tables to return queries quickly and to scale it is essential to load your lakehouse tables with partitions.

What I am going to show you in my blog post today is how to load data into a Lakehouse table where the table will be automatically partitioned by Year/Month/Day.

NOTE: To partition the table, I always need to find a column which has got the Date or Date Time which describes the transaction of the table.

Below are the advantages of creating Lakehouse tables with partitions (which I got from Bing Co-Pilot)

Certainly! Partitioning Lakehouse tables offers several advantages:

- Improved Query Performance:

- Partitioning allows you to divide large tables into smaller, manageable partitions based on specific criteria (e.g., date ranges, regions, or categories).

- Queries can then target specific partitions, reducing the amount of data scanned and improving query performance.

- Efficient Data Loading:

- When loading data into a partitioned table, you only need to update the relevant partition(s) rather than the entire table.

- This reduces the time and resources required for data ingestion.

- Easy Roll-in and Roll-out of Data:

- Adding new data to a partitioned table is straightforward. You can easily incorporate new ranges or categories as additional partitions.

- Similarly, removing old or obsolete data involves dropping specific partitions.

- Easier Administration of Large Tables:

- Managing large tables becomes more manageable with partitioning.

- Maintenance tasks like backups, indexing, and statistics can be performed on individual partitions.

- Flexible Index Placement:

- You can create indexes on specific partitions, optimizing query performance for specific subsets of data.

- This flexibility allows you to fine-tune indexing strategies.

- Avoiding File Listing Operations:

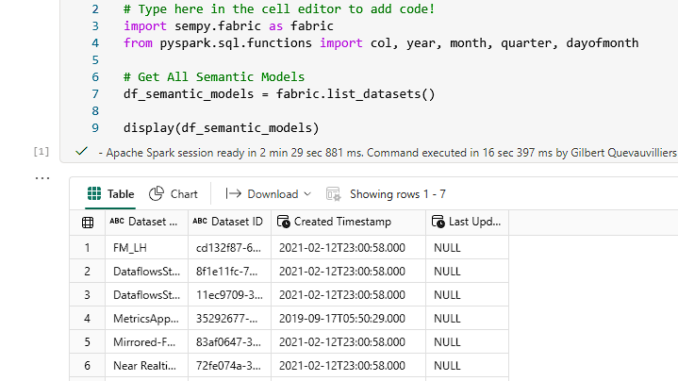

In my example I am going to be querying the Fabric Datasets.

In this dataset that gets returned it has got a column called Created Timestamp. This is the column I am going to use to partition my table with.

The next code cell is where I am going to create my new columns which will be used to partition the table.

NOTE: I have given my Partitioned columns a suffix of “_p” so that I always know that these columns are used for partitioning. I also know when I am querying this table to use these columns to assist with query performance.

# Create Spark dataframe

sparkDF=spark.createDataFrame(df_semantic_models)

# Create the Columns to Partition By

sparkDF = sparkDF.withColumn('Year_p', year(col("Created Timestamp")))

sparkDF = sparkDF.withColumn('Month_p', month(col("Created Timestamp")))

sparkDF = sparkDF.withColumn('Day_p', dayofmonth(col("Created Timestamp")))

display(sparkDF)

As shown in the output below I can now see my columns created.

One additional step I did was to remove the spaces from any of the column names so that it will load successfully into the Delta table.

The final step is to now load into the Lakehouse table where I am specifying the partition columns to be used.

# Create Table with partitions using columns

# Write Data to Table

sparkDF.write.mode("append").format("delta").partitionBy("Year_p","Month_p","Day_p").save("Tables/Blog_PartitionBy")

A quick way for me to validate that the table is partitioned was to go into the Lakehouse table, then right click and select View Files

I could then see the folders created, which match my partitioning from my notebook.

In this blog post I have shown you how to create a Lakehouse table with partitions.

If you have any questions or comments, please let me know.

I plan on creating another blog post in future where I will partition files that are being written to the Lakehouse files.

Be the first to comment