Hey there, fellow data enthusiasts! Are you ready to harness the power of BERT for sentiment analysis? Well, you’ve come to the right place. I’ve crafted a walkthrough to help you build a sentiment classifier using the mighty BERT, TensorFlow, and the IMDB movie reviews dataset. So grab your favorite beverage, and let’s dive into the world of Natural Language Processing (NLP) together.

Decoding BERT: Your AI Sidekick for Understanding Human Language

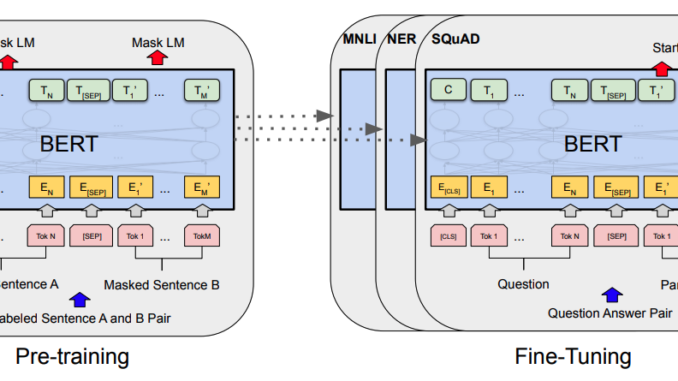

Let’s unwrap the mystery that is BERT. BERT, or Bidirectional Encoder Representations from Transformers, is a groundbreaking model that Google introduced to the NLP landscape. It’s designed to understand the nuances of human language by considering the context of words from both directions — left and right. Picture BERT as a highly attentive reader that captures every detail in a sentence, enabling it to grasp the overall sentiment.

BERT comes in two flavors: the base model, a lean yet powerful version, and the large model, the heavyweight champion with even more processing power. Check out this cool visual from the creators of BERT to get a sense of how it works:

Figure 2. Peek into the BERT network (Image from the BERT paper)

While BERT’s rival GPT-3 might have stolen some of the limelight, BERT remains a go-to choice due to its accessibility and impressive performance. Here’s a snapshot comparing BERT with other NLP models like GPT and ELMo:

Figure 3. BERT vs. GPT vs. ELMo (Image from the BERT paper)

Embracing the Hugging Face: The NLP Swiss Army Knife

When it comes to implementing BERT, the Hugging Face Transformers library is your best friend. It’s a treasure trove of pre-trained models ready to tackle a wide array of text-based tasks. And the best part? It’s compatible with TensorFlow, making it a breeze to integrate into your projects.

Crafting a Sentiment Analysis Model with BERT and TensorFlow

Let’s get into the nuts and bolts of building our sentiment analysis model. We’ll start by installing the Hugging Face Transformers library to get our hands on the pre-trained BERT model. Then, we’ll prepare our dataset, which involves downloading the IMDB Reviews Dataset, cleaning it up, and splitting it into training and test sets.

Once we have our data in order, we’ll craft input sequences that BERT can understand using the Transformers library’s handy utilities. With our sequences ready, we’ll configure and fine-tune the BERT model, teaching it to differentiate between positive and negative movie reviews.

A Sneak Peek at Our BERT Model for Sentiment Classification

Here’s a glimpse of what our BERT model looks like under the hood:

Figure 4. Inside our BERT sentiment classifier

IMDB Dataset: Your Training Ground for Sentiment Analysis

The IMDB Reviews Dataset is a collection of movie reviews used for binary sentiment classification. It’s perfect for training models to detect if a review is thumbs-up or thumbs-down. We’ll only use the training set for our model, but the dataset also includes a test set and additional unlabeled reviews.

From Reviews to Input Sequences: Prepping Data for BERT

After getting the IMDB dataset, we’ll transform the reviews into a format that BERT can digest. We’ll use the InputExample function to create input sequences from our reviews, then tokenize them using BERT’s tokenizer.

Training and Prediction: Bringing Your Model to Life

With our input sequences ready, we’ll train the BERT model and fine-tune it for sentiment analysis. This process involves choosing the right optimizer, loss function, and accuracy metrics. Once trained, we’ll feed new movie reviews into the model and watch as it predicts the sentiment with impressive accuracy.

Celebrate Your Achievement and Keep Learning

Congratulations on reaching the end of this journey! You’ve now got a sentiment analysis model powered by BERT, ready to tackle real-world data. But don’t stop here; continue exploring the endless possibilities of NLP and machine learning.

Engage with the Community and Share the Knowledge

If you’ve enjoyed this guide and found it helpful, why not spread the joy? I’d love for you to give this article 50 claps (yes, you can clap up to 50 times on Medium!) and follow me for more insights into the world of NLP and AI.

Ready to Predict Sentiment with Your Own BERT Model?

If you’re excited to try out sentiment analysis with BERT, I’ve got a treat for you. My Google Colab notebooks are packed with the full code for this project and more. Subscribe to my mailing list, and let’s continue this exciting journey together.

Remember, the world of NLP is vast and ever-evolving. Stay curious, keep experimenting, and let’s connect the dots in this fascinating AI landscape. Happy modeling!

Be the first to comment