I’ve wanted to learn more about neural nets and in particular TensorFlow for a while now. I recently had a bit more time to dedicate to it, so began to write about what I had learned and some of the basic examples I had ran through.

Even though this information has been covered before, I decided to post it since it could help other beginners like myself. I’ve focused on parts which I thought were essential; hopefully making the subject matter as clear as possible without losing substance.

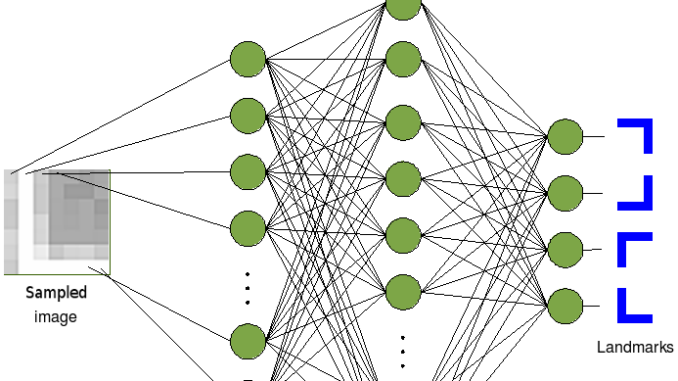

The usual picture of a neural net as a bunch of nodes connect by lines can make the subject matter appear somewhat esoteric.

Then you realise that the picture you have in your head does not touch the surface of neural nets used in the wild; further adding to the mystery.

This impression is compounded when you realise the subject is a mixture of computer science, mathematics, statistics and neurology; which are already difficult subjects in their own right. All this can seem overwhelming but when you break the concepts into parts it starts to make sense.

Objective and Approximation

To start breaking this down lets focus on two concepts which I thought cut through the noise:

- A neural net, no matter the complexity, is nothing more than a very complex function.

- We approximate this very complex function using multiple simple non linear functions in combination.

The objective of training a neural net is to find a very complex function which maps one set to another; the set and mapping completely depend on the problem you are trying to solve. Sets have a long history in mathematics which I could spend this whole article getting into. Rather than getting into that, you should see it as nothing more than mapping a series of numbers to another series of numbers. This might seem strange at first; however, it is not strange when you realise that everything can be represented by numbers: an image is nothing more than a series of numbers representing pixels; sentences can be represented by numbers, with each word being a number or a vector of numbers depending on how you want to display the information. All problems can be represented, in someway, by numbers.

We approximate this complex function by combining lots of non linear functions. The ability to approximate almost any function as a combination of non linear functions is known as the universal approximation theorem. For anyone familiar with Taylor or Fourier series this will make some sense; sometimes it is easier to break a function into parts with each part approximating the original function.

So we have the objective of finding a complex function which we will approximate using multiple non linear functions.

Activation Functions

These non linear functions are known as activation functions.

Above you can see three types of parameters

- Inputs (X).

- Weights (W).

- Bias (B).

The inputs are from the data or from the previous layer. The weights and biases are what does the “learning”: the parameters we will change to produce our complex function which will solve our problem.

There are many different functions which can take these weights and biases as input.

There are advantages and disadvantages to each function but before understanding that we need to understand how we will learn the correct weights and biases.

Loss Function

The term “learning” is a really abstract term which completely misses the point of what you are doing. When you “learn” you are setting up all the weights and biases with the correct values to map inputs to as close as possible to the known outputs. The accuracy between the calculated outputs and the known outputs, which we know in supervised learning, is measured using a loss function.

The best known loss function is quadratic loss which is used in least squares fitting. With this loss it is clear you are trying to get your fitting function as close to the data points as possible. However, more elaborate functions might be more difficult to visualise.

Above you can see an example of a loss function for two parameters. This image is incredible useful; however, you need need to keep a few things in mind when your models get more complex.

- Increase the number of dimensions for each weight and bias parameter.

- Understand that the node types (activation functions) and how you connect them change the shape of the loss landscape.

- The shape of the landscape is not known to you.

The last point is important. The diagram above makes you think that you could easily find the smallest value of the loss function; however, you need to find this iteratively.

Keeping this point in mind, we then need find a method for descending the unknown loss landscape. This method follows a set procedure.

- Initialise weights.

- Propagate inputs through weights to get value of output.

- Find value of loss function.

- Back propagate the weights trying to minimise the loss.

- Repeat.

The first three parts are quite obvious to follow. The fourth part, back propagation, is the part you need to go through in a bit more detail. To get a feel for how this is done there is a good tutorial which I followed and then surmised below.

Back Propagation

Back propagation is using input data to iteratively update the weights. The process starts from the output layer and works backwards. To demonstrate this we will use an example activation and loss function.

First lets define a few terms

We are using a quadratic loss function and sigmoid function as the activation; this would change depending on the problem.

Now we have defined our terms we can write down the update function which will change the weights iteratively:

The first term of the update equation is the change of weights due to change in loss and the second is the momentum term. The only term we need to calculate is the change of weights due to change in loss. The momentum is simply the change due to the last iteration; this term is added to avoid local minimums.

Now we have the update rule, and have broken it into parts using chain rule, we need to work out the functions for each part. One of the terms is the partial derivative of the activation, the sigmoid function, relative to the inputs.

Other terms, which are easier to determine, are the change in inputs relative to weights and the previous layer’s activations:

Finally, we put all these partial derivative together and determine how the loss changes relative to the weights.

Now we need to find how the loss changes with activation.

Using all of this we can move back, layer after layer, determining how the weights should change. Let’s now use what we have learned to understand some typical examples.

Using the concepts above we can begin our example fits starting with a fully connected network. Each of the examples uses the same docker image to create the required environment to run TensorFlow. I start this container with my code mounted from my local machine and allow TensorBoard to run from port 6006.

docker run -p 6006:6006 -v `pwd`:/mnt/ml-mnist-examples -it tensorflow/tensorflow bash

Now we have the environment we need to consider the dataset we will be playing with. The dataset I used to learn was MNIST: 60,000 small square 28×28 pixel grayscale images of handwritten single digits between 0 and 9.

The code I used was the same as the tutorial which you can find online.

When I first seen this I was perplexed. Even with the basic reading I had done I had to understand what the code was doing. So I went through it step by step.

The data is loaded from the normal training set. The input is an array 28×28 with each point having a number from 0 to 255. This is why we divide by 255 so each entry is between 0 and 1.

(x_train, y_train),(x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

Looking at the structure of the inputs we can see it is a large 3D matrix.

xtrain[entry][x_pos][y_pos]

entry going up to 60,000

x_pos/y_pos going up to 27 in each direction

The output is nothing but a one dimensional vector specifying the value of the digit between 0 and 9.

y_train[entry]

entry going up to 60,000

Now we get to the setup of the neural network. The first layer is the input layer which is the same as the input image.

tf.keras.layers.Flatten(input_shape=(28, 28))

Next we have the hidden layer using the Relu activation function.

tf.keras.layers.Dense(512, activation='relu')

The documentation describes this part quite well:

Denseimplements the operation:output = activation(dot(input, kernel) + bias)whereactivationis the element-wise activation function passed as theactivationargument,kernelis a weights matrix created by the layer, andbiasis a bias vector created by the layer (only applicable ifuse_biasisTrue).

So we are linking all the input pixels to 512 hidden layer nodes which will begin to workout features of the digits.

Dropout, which is used in this network, helps alleviate the problem of overfitting. Basically it sets the activations of some output nodes to zero during training. This is done to ensure the net is generalising and not just “remembering” the input data. Let’s set 20% of the nodes to 0 each cycle.

tf.keras.layers.Dropout(0.2)

Finally, we get to the output layer which uses a softmax activation function which is used for multi classification problems. This is done since all the outputs should be probabilities which sum to 100%.

tf.keras.layers.Dense(10, activation='softmax')

Looking at the model summary we can see all the parameters we need to train.

To really understand what is going on we need to know where these ~407k parameters come from.

- Flatten takes a 2D matrix and creates a 1D output, giving 28×28=784 pixels.

- First dense layer is 512 nodes. A weight per 784 pixels which is connected to the nodes and a bias per node. Giving a total=512×784+512=401920.

- Dropout is the same number of nodes in the layer below with no parameters to change.

- Second dense layer is a weight per connection to node plus bias per node. Giving a total=512×10+10=5130.

- Total=401920+5130=407050.

Now we have the full network we want to do the iterative fit.

model.compile(optimizer='adam',loss='sparse_categorical_crossentropy',metrics=['accuracy'])

Adam optimiser is just a form of stochastic gradient descent which specifies how we should move down the loss function. This particular loss function is used for categorisation.

Now specify how we want to store the output for TensorBoard which we will use later.

log_dir = "logs/fit/" + datetime.datetime.now().strftime("%Y%m%d-%H%M%S")tensorboard_callback = tf.keras.callbacks.TensorBoard(log_dir=log_dir, histogram_freq=1)

Finally, we can run through the full dataset 5 times or epochs:

model.fit(x=x_train,y=y_train,epochs=5,validation_data=(x_test, y_test),callbacks=[tensorboard_callback])

We should have output generated and stored in logs directory. We can then look at that data using TensorBoard:

tensorboard --logdir logs --host 0.0.0.0

Be the first to comment