As a software engineer specializing in mobile application development, there is a gap when it comes to running AI models directly within mobile applications. The common approach for integrating AI is to leverage a backend, typically hosted on cloud services like AWS, to handle the heavy lifting. While effective, this method relies on several factors such as cost, manpower, and others but with Tensorflow.js there is a shortcut.

TensorFlow is a library developed by Google, an end-to-end platform for machine learning. Within the ecosystem, there is a library for Javascript developers called Tensorflow.js which enables AI models to run directly in the browser or on-device. This Tensorflow.js works in three ways:

1. Running Existing Models — This allows you to run pre-trained Javascript models or convert your Python model using the TensorFlow converter and integrate it into the Javascript app.

2. Retraining Existing Models also known as Transfer Learning — This feature allows you to retrain pre-existing models with your data which is available by installing the required packages.

3. Develop your ML model with Javascript — This allows you to build and train your models directly in JavaScript using the TensorFlow libraries.

STEP 1

This post focuses on the first key capability of TensorFlow.js: running existing models. I’ve already trained an image classification model in Python using my Jupyter Notebook. The model was trained on the CIFAR-10 dataset from TensorFlow, which comprises 5,000 images across ten distinct categories: airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck.

The model is a simple one and the development process involves the following steps:

– Loading the CIFAR-10 dataset and splitting it into training and testing sets.

– Reshaping the dataset by flattening the images into one-dimensional arrays.

– Normalizing the pixel values by dividing the dataset by 255.

– Building the model with two layers of 2D convolutional blocks using the ReLU activation function, followed by a dense layer with a softmax activation function.

After building and testing the model, the final step is to save it using the save method. Depending on your preference, the model can be saved in .keras, .h5, or .tf format just by passing the save_format='tf' to save().

After saving the model in the directory of your choice, open that directory and ensure the file or folder has been created. The next step is to convert the saved model to a JSON format, as this is the format we will use when importing it into our React Native application.

Model Conversion

To convert the model, you are going to install Tensorflowjs. Although I encountered several challenges during this process, I’ll break it down into the simplest steps. Here’s how you can do it on a Mac terminal:

1. Upgrade pip: start by running pip install --upgrade pip to ensure you have the latest version of pip.

2. Install TensorFlow.js: next, install TensorFlow.js by running pip install tensorflowjs

3. Create a Virtual Environment: set up a virtual environment by executing python3 -m venv .venvcommand.

4. Activate the Virtual Environment: activate it with the command source .venv/bin/activate.

5. Convert the Model: run the TensorFlow.js converter to transform your model into JSON format. The command looks like this tensorflowjs_converter --input_format=keras imgClass.keras tfjs_model Replace keras with the format you originally saved your model in, imgClass.keras with the path to your saved file or folder, and tfjs_model with the path where you want the converted files to be stored.

6. Deactivate the Virtual Environment: finally, deactivate the virtual environment by running deactivate.

By following these steps, you should find a new folder in your specified directory containing both a .bin file and a .json file. If you encounter any issues, this link will help.

Congratulations you have completed the first step.

STEP 2

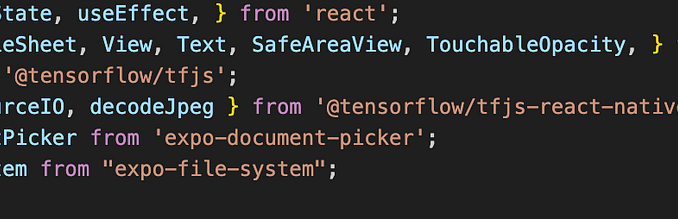

In this step, we’re using React Native Expo, primarily because the `tfjs-react-native` library integrates well with Expo’s ecosystem. While `tfjs-react-native` can work in a bare React Native project, Expo simplifies the process. The `bundleResourceIO` function is also used to load model files like JSON and `.bin`, which can be effectively managed within the Expo environment.

Setting Up the Project

– Create a New React Native Expo Project

– Install TensorFlow Libraries — when installing the TensorFlow reactnative package refer to the documentation linked here.

– Handle Dependencies — when installing the expo camera and other required libraries use the “ — legacy-peer-deps” flag. This is necessary because `tfjs-react-native` relies on an older version of `expo-camera`, which can cause conflicts with other packages. It is frustrating but that’s the only way I know it will work.

Configuring Your Project

The React Native project will not recognize the `.bin` file generated by the TensorFlow converter and for that you’ll need to create a `metro.config.js` file. Expo doesn’t include this by default, so you’ll manually configure it to ensure proper bundling and reading of your assets and it should look like this.

Importing and Using the Model

Once your configuration is set up, you can begin importing your model files. Move the JSON and `.bin` files into your project directory for easier access. After importing them, use the `bundleResourceIO` function to load and bundle these files together. Once bundled, you can load the model and access its contents. That’s how you import your saved model from Python directly into react native skipping the backend stress.

BONUS: Testing Your Model

The next step is to import the images to test the model predictions properly. I have saved the test images on my mobile device so I only need to upload it and start predicting. Here’s how:

1. Import Images: use the `expo-document-picker` to select and upload the image.

2. Convert and Decode Images: Use the `expo-file-system` library to convert the images to Base64 format, decode them, and normalize the tensors by dividing by 255, just as I did during training.

3. Reshape and Predict: After normalization, reshape the images to match the model’s input requirements and use the `predict` method to make predictions.

This approach allows you to test your model directly within your React Native app, ensuring it performs as expected.

Congratulations you made it to the end. There is alot more functionality using the Tensorflowjs. Have fun exploring.

Be the first to comment