As this is an ongoing series, if you haven’t done so yet, you might want to consider starting at one of the previous sections: 1st, 2nd, and 3rd.

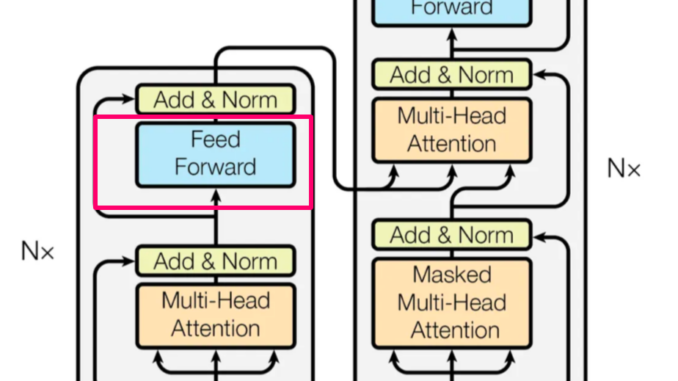

This fourth section will cover the essential Feed-Forward layer, a fundamental element found in most deep-learning architectures. While discussing vital topics common to deep learning, we’ll emphasize their significant role in shaping the Transformers architecture.

A feed-forward linear layer is basically a bunch of neurons, each of which is connected to a bunch of other neurons. Look at the image below. A, b, c, and d are neurons. They hold some input, some numbers representing some data we want to understand (pixels, word embeddings, etc.). They are connected to neuron number 1. Each neuron has a different connection strength. a-1 is 0.12, b-1 is -0.3, etc. In reality, all the neurons in the left column are connected to all the neurons in the right column. It makes the image unclear so I didn’t make it this way, but it’s important to note. Exactly in the same way we have a-1, we also have a-2, b-2, c-2, d-3, etc. Each of the connections between two neurons has a different “connection strength”.

There are two important things to note in that architecture:

1. As mentioned, Every node (neuron), is connected to every other node. All of the four a,b,c,d are connected to every other neuron (1,2,3). Think of this image as a chain of command. 1,2,3 are commanders. They get reports from soldiers a,b,c,d. A knows something, about something, but it doesn’t have a very wide view. 1 knows more, as it gets reports from both a, b c, and d. The same goes for 2 and 3 which are also commanders. These commanders (1,2,3) are also passing reports to higher-up commanders. Those commanders after them, get reports both from a,b,c,d, and from 1,2,3, as the next layer (each column of neurons is a layer) is also fully connected in exactly the same way. So the first important thing to understand is that 1 has a broader view than a and the commander in the next layer will have a broader view than 1. When you have more dots, you can make more interesting connections.

2. Each node has a different connection strength to every other Node in the next layer. a-1 is 0.12, b-1 is -0.3. The numbers I put here are obviously made up, but they are of reasonable scale, and they are learned parameters (e.g. they change during training). Think of these numbers as of how much 1 counts on a, on b, etc. From the point of view of commander 1, a is a little bit trustable. You shouldn’t take everything he says for granted, but you can count on some of his words. B is very different. This node usually diminishes the importance of the input it gets. Like a laid-back person. Is this a tiger? Nah, just a big cat. This is an oversimplification of what happens but the important thing to note is this: Each neuron holds some input, whether it is raw input or a processed input, and passes it on with its own processing.

Do you know the game Chinese Whispers? you sit in a row with 10 people and you whisper to the next person a word, say, “Pizza”. Person 2 has heard something like “Pazza” so they pass on “Pazza” to person 3. Person 3 heard “Lassa” (it’s a whisper after all) so he passes on “Lassa”. The 4th person has heard “Batata” so he passes on Batata, and so on. When you ask the 10th person what did you head? he says: Shambala! How did we get from Pizza to Shambala? shit happens. The difference between that game and what a neural network does is that each such person will add its useful processing. Person 2 won’t say “Pazza”, he will say, “Pazza is an Italian dish, it’s great”. Person 3 will say “Lassa is an Italian dish, common all over the word”, etc. Each person (layer), adds something hopefully useful.

This is basically what happens. Each neuron gets an input, processes it, and moves it on. To match the fully connected layer, I suggest an upgrade to the Chinese Whisperers: from now on you play with multiple rows and each person whispers to any other person in every other line. The people in position 2 onwards get whispers from many people and need to understand how much “weight” (importance) they give to each person, This is a Feed Forward Layer.

Why do we use such layers? because they allow us to do add useful calculations. Think of it a bit like of the wisdom of the crowd. Do you know the story of guessing a steer’s weight? In 1906, someplace in England, someone brings a steer to an exhibition. The presenter asks 787 random people to guess its weight. What would you say? How much does the steer weigh?

The average of all their guesses was 1197 pounds (542 KG). These are guesses of random people. How far were they? 1 pound, 450 grams. The Steer’s weight is 1198. The story is taken from here and I don’t know if the details are right or not, but back to our business, you can think of linear layers as doing something like that. You add more parameters, more calculations (more guesses), and you get a better result.

Let’s try to imagine a real scenario. We give the network an image and we want to decide whether it’s an apple or an orange. The architecture is based on CNN layers, which I won’t get into as they are beyond the scope of this series, but basically, it’s a computation layer that is able to recognize specific patterns in an image. Each layer is able to recognize growing complications of patterns. For example, the first layer can’t notice almost anything, it just passes the raw pixels, the second layer recognizes vertical lines, the next layer has heard there are vertical lines, and from other neurons, it has heard there are vertical lines very near. It does 1+1 and thinks: Nice! It’s a corner. That is the benefit of getting inputs from multiple sources.

The more calculations we do, we might imagine, the better results we can get. In reality, it doesn’t really work this way, but it does have some truth in it. If I do more calculations and consult with more people (neurons), I can generally reach better results.

Activation Function

We will stack another vital building block of a basic and very important concept in deep learning as a whole, and then we’ll connect the dots to understand how this is related to Transformers.

Fully connected layers, great as they are, suffer from one big disadvantage. They are linear layers, they only do linear transformations, linear calculations. They add and multiply, but they can’t transform the input in “creative” ways. Sometimes adding more power doesn’t cut it, you need to think of the problem completely differently.

If I make $10, I work 10 hours a day, and I want to save $10k faster, I can either work more days every week or work additional hours every day. But there are other solutions out there, aren’t there? So many banks to be robbed, other people not needing their money (I can spend it better), getting better-paid jobs, etc. The solution is not always more of the same.

Activation functions to the rescue. An activation function allows us to make a non-linear transformation. For example taking a list of numbers [1, 4, -3, 5.6] and transforming them into probabilities. This is exactly what the Softmax activation function does. It takes these numbers and transforms them to [8.29268754e-03, 1.66563082e-01, 1.51885870e-04, 8.24992345e-01]. These 5 numbers sum to 1. It is written a bit hectic but each e-03 means the first number (8) starts after 3 zeros (e.g. 0.00 and then 82926. The actual number is 0.00829268754). This Softmax activation function has taken integers and turned them into floats between 0 and 1 in a way that preserves the gaps between them. You might imagine how extremely useful this is when wanting to use statistical methods on such values.

There are other types of activation functions, one of the most used ones is ReLU (Rectifies Linear Unit). It’s an extremely simple (yet extremely useful) activation function that takes any negative number and turns it to 0, and any non-negative number and leaves it as it is. Very simple, very useful. If I give the list [1, -3, 2] to ReLU, I get [1, 0, 2] back.

After scaring you with Softmax, you might have expected something more complicated, but as someone once told me “Luck is useful”. With this activation function, we got lucky.

The reason we need these activation functions is that a nonlinear relationship cannot be represented by linear calculations (fully connected layers). If for every hour I work I get $10, the amount of money I get is linear. If for every 5 hours of working straight, I get a 10% increase for the next 5 hours, the relationship is no longer linear. My salary won’t be the number of hours I work * fixed hourly salary. The reason we carry the burden of deep learning for more complicated tasks such as text generation of computer recognition, is because the relationships we are looking for are highly unlinear. The word that comes after “I love” is not obvious and it’s not constant.

A great benefit of ReLU, perhaps what made it so commonly used, is that it’s very computationally cheap to calculate it on many numbers. When you have a small number of neurons (let’s say tens of thousands), computation isn’t super crucial. When you use hundreds of billions, like big LLMs do, a more computationally efficient way of crunching numbers might make the difference.

Regularization

The last concept we’ll introduce before explaining the (very simple) way it’s implemented in Transformers, is dropout. Dropout is a regularization technique. Regu- what? regularization. As algorithms are based on data and their task is to be as close to the training target as they can, it can be sometimes useful for someone with a big brain to just memorize stuff. As we are taught so skillfully in school, it isn’t always useful to learn complicated logic, we can sometimes just remember what we’ve seen, or remember something close to it. When was war world 2? well… it was affected by World War 1, economic crises, angry people, etc…. which was around 1917 .. so let’s say 1928. Perhaps it’s just better to memorize the actual date.

As you might imagine, this isn’t good for Machine Learning. If we needed answers to questions we already had answers for, we wouldn’t need this crazy complicated field. We need a smart algorithm because we can’t memorize everything. We need it to make considerations on live inferences, we need it to kind of think. A general term for techniques used to make the algorithm learn, but not memorize, is called Regularization. Out of these regularization techniques, one commonly used is dropout.

Dropout

What’s dropout? a rather simple (lucky us again) technique. Remember we said fully connected layers are, fully connected? well, dropout shakes that logic. The dropout technique means turning the “connection strength” to 0, which means it won’t have any effect. Solder “a” becomes completely useless for commander 1 as its input is turned to 0. No answer, not positive, not negative. On every layer we add dropout, we randomly choose a number of neurons (configured by the developer) and turn their connection to other neurons to 0. Each time the commander is forced to ignore different soldiers, hence not being able to memorize any of them as perhaps it won’t them next time.

Back to Transformers!

We now have all the building blocks needed to understand what happens specifically in the Feed Forward layer. It will now be very simple.

This layer simply does 3 things:

1. Position-wise linear calculation — every position in the text (represented as vectors) is passed through a linear layer.

2. A ReLU calculation is done on the output of the linear calculation.

3. Another Linear calculation is done on the output of the ReLU.

4. Finally, we add to the output of layer 3.

Boom. That is all there is to it. If you’re experienced with deep learning, this section was probably very easy for you. If you aren’t, you may have struggled a bit, but you came to understand an extremely important moving piece of deep learning.

In the next part, we’ll be speaking about the Decoder! Coming soon to a town near you.

Be the first to comment