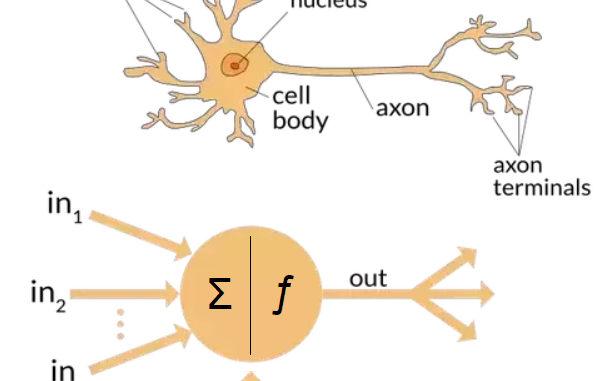

In simpler terms, a neural network (NN) is a type of artificial intelligence (AI) modeled after our brain’s structure and function.

In our brain, neurons receive signals through dendrites, process these signals in the cell body, and send outputs via axons. Similarly, in an NN, the input layer receives raw data, which is then processed through multiple hidden layers of nodes.

Each node in a neural network acts like a brain cell. It decides whether to send information to the next layer based on certain rules. The connections between nodes are like pathways in the brain. These connections have different strengths, which affect how information flows.

Both brains and neural networks learn by changing these connections. In the brain, pathways become stronger or weaker. In neural networks, the strength of connections is adjusted during training.

Now that we understand the basic concept of neural networks, let’s dive deeper into their structure. Neural networks are composed of several key components that work together to process information and learn from data.

The structure of a neural network (NN) is composed of several key components organized into layers. Here’s an overview:

1. Layers:

Input Layer: This is the first layer that receives the raw data. Each node (or neuron) in this layer represents a feature of the input data.

Hidden Layers: These are intermediate layers between the input and output layers. They perform computations and transformations on the input data. There can be multiple hidden layers, and the network is termed “deep” when it has many of these layers. Each hidden layer consists of nodes that apply activation functions to the inputs they receive.

Output Layer: This is the final layer that produces the output of the network. The number of nodes in this layer corresponds to the number of prediction classes or regression outputs.

2. Nodes (Neurons):

Each layer consists of nodes, which are the basic units of computation. Nodes receive input, process it, and pass it to the next layer.

3. Connections (Weights):

Nodes in one layer are connected to nodes in the next layer through weights. These weights determine the strength and significance of the connections. During training, the network adjusts these weights to minimize the error in its predictions.

4. Bias:

Each node, except those in the input layer, has an associated bias. The bias allows the activation function to be shifted to the left or right, which can help the model fit the data better.

5. Activation Functions:

Activation functions are applied to the output of each node to introduce non-linearity into the network. Common activation functions include ReLU (Rectified Linear Unit), sigmoid, and tanh.

6. Learning and Training:

During training, the network uses algorithms like backpropagation and optimization techniques like gradient descent to adjust the weights and biases. The goal is to minimize the difference between the predicted outputs and the actual outputs (the error).

Here is a basic neural network Python code that showcases loading data (Mnist data), building, training, and evaluating a neural network using TensorFlow and Keras.

# Import Librares

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.datasets import mnist

from tensorflow.keras.utils import to_categorical# Load the MNIST dataset

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# Normalize the images to values between 0 and 1

x_train, x_test = x_train / 255.0, x_test / 255.0

# One-hot encode the labels

y_train = to_categorical(y_train, 10)

y_test = to_categorical(y_test, 10)

# Build the neural network model

model = Sequential([

Flatten(input_shape=(28, 28)), # Flatten images into 1D vector

Dense(128, activation='relu'), # Hidden layer with 128 neurons and ReLU activation

Dense(10, activation='softmax') # Output layer with 10 neurons (prediction classes) and softmax activation

])

# Compile the model

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

# Train the model

model.fit(x_train, y_train, epochs=5)

# Evaluate the model on the test dataset

test_loss, test_acc = model.evaluate(x_test, y_test)

print(f'Test accuracy: {test_acc}')

While this example provides a solid foundation, there are many ways to extend and improve the neural network models:

- Deeper Networks: Experiment with adding more hidden layers to create deeper networks that can learn more complex features.

- Regularization Techniques: Implement dropout or batch normalization to prevent overfitting and improve model robustness.

- Advanced Architectures: Explore other architectures, such as Convolutional Neural Networks (CNNs) for image-related tasks or Recurrent Neural Networks (RNNs) for sequential data

In this article, we have covered from basic concepts to practical implementation. As demonstrated by the MNIST example, even simple neural networks can achieve impressive results. Keep experimenting and exploring more advanced techniques to enhance your models further.

Be the first to comment