Imagine you’re building a model to predict whether a passenger on the Titanic survived. It’s a straightforward task — binary classification. You start with a few layers of neurons stacked on each other, one after the other, and connect them in a simple chain. Data flows from the input, through the hidden layers, and finally to the output, predicting survival or non-survival. You think, This is easy! The Sequential API is perfect for this kind of job.

So, you quickly create your model using the Keras Sequential API:

from keras.models import Sequential

from keras.layers import Dense# A simple binary classification model using the Sequential API

linear_model = Sequential([

Input((X_train.shape[1],)),

Dense(32, activation='relu'),

Dense(16, activation='relu'),

Dense(1, activation='sigmoid') # Output layer for survival prediction

])

linear_model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

It works like a charm. The Sequential API is, by nature, linear. It lets you stack layers one after the other — no fuss, no confusion. You can think of it as building a simple ladder. Climb one rung, then the next, until you reach the top.

But as you get more curious, you realize that predicting survival isn’t your only interest. You’re also curious about age. Can the same model predict both survival and age? Now, things get complicated.

Suddenly, your Sequential model doesn’t cut it anymore. It would be best to have a model that can split into two paths — one predicting age as a continuous value and the other predicting survival as a binary classification. It’s like having a road with a fork: the data travels down the same road at first but then splits into two paths. The Sequential API, however, only supports one road, so what now?

This is where the Keras functional API kicks in!

The Functional API doesn’t just build a straight-line ladder; it allows you to craft a network. It’s more like building a highway system — roads can merge, split, and reconnect. With the Functional API, your model can have multiple outputs, shared layers, and paths that don’t follow the usual linear structure. The main idea is that a deep learning model is usually a directed acyclic graph (DAG) of layers. So, the functional API is a way to build graphs of layers.

In simpler terms, you can create a model that not only predicts some continuous value but can predict any binary value, multi-class value, or even multiple regression values using a single model. Your imagination is the limit after you’ve mastered the functional API.

Coming back to our Titanic dataset, to focus on the topic, I have only chosen numerical columns and a “gender” column to make it easier to understand; the rest of the columns have been dropped. Our goal here is to predict whether the passenger survived and what the passenger’s age was. So, let’s dive in!

Let’s read the Titanic dataset and apply some pre-processing for the model to train, all mundane stuff; if you have reached this far, you probably know all this.

# import

from keras.api.layers import Dense,Input

from keras.api.models import Model

from keras import layers

import pandas as pd

import numpy as np

import keras

from sklearn.model_selection import train_test_split# get data

titanic = pd.read_csv("https://raw.githubusercontent.com/datasciencedojo/datasets/master/titanic.csv")

# Dropping columns which are not required

train = titanic.drop(columns=["PassengerId","Cabin","Name","Ticket","Pclass","Embarked"])

train.dropna(inplace=True)

"""

A new family size column is created to get the family size which includes,

siblings spouse and Parent Children.

This might feel logically incorrect to some readers,

but goal here is to focus on the Functional API not Feature Engineering.

"""

train['family_size'] = train['SibSp'] + train['Parch']

train = train.drop(columns=["SibSp","Parch"])

# One-Hot Encoding for Sex of the passenger

train = pd.get_dummies(train,drop_first=True,dtype='int')

train.head()

As per Figure 1, our target values are Survived and Age. So, let’s put them on y and the rest on X.

X = train[['Fare','family_size','Sex_male']]

y = train[['Age','Survived']]Per standard data science practice, we will split the dataset for training and testing.

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.2)"""

I have performed scaling as well, but have skipped the explanation

as the article was becoming too long, but its standard practice to

input scaled data to Deep Learning models.

"""

# Scaling the values

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

# This will return the scaled values of X in a tensor format or numpy array

# which is the required input format for fitting the model

# directly sending pandas Dataframe is not supported.

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

X_train is similar to what you might have seen multiple times, but now things get pretty interesting, so stay focused! Notice how our y_train now has “Age” and “Survived”, not only one column but TWO. I was fascinated by this as well!

Now, let’s jump into the central part; we will create a model using Keras to help us predict survival and age AT THE SAME TIME!

# This is the input layer.

# here input shape will be 3, as we have three columns in X_train

# family_size, sex_male and Fare

input_layer = Input(shape=(X_train.shape[1],)) # We are using two stacked hidden layers with standard relu function

hidden1 = Dense(32,activation=keras.activations.relu)(input_layer)

hidden2= Dense(16,activation=keras.activations.relu)(hidden1)

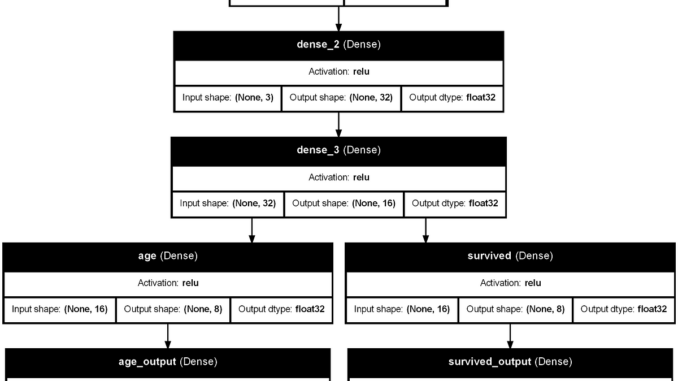

The input_layer is the Input for the model. You can have multiple inputs as well. We have created two hidden layers, hidden1 and hidden2 Notice how the input_layeris passed to hidden1, and hidden1 is passed to hidden2, creating a stack of linear layers. Our model currently looks something like this, as per Figure 4.

Now, let’s examine the output layers, two in this case: one for regression and one for binary classification; this is where it gets interesting!

# Output 1 (Age Regression)

output_layer_age = Dense(1,activation=keras.activations.linear,name="age_output")(hidden2)# Output 2 (Survival Classification)

output_layer_survived = Dense(1,activation=keras.activations.sigmoid,name="survived_output")(hidden2)

Now, we create the two layers output_layer_age with activation linear as we want to get the same output and output_layer_survived with activation sigmoidwhich will out the probability of survival. Both layers are connected to the same hidden_2 layer, separating them as their layers. However, you can create two separate linear stacks of layers as well; I have attached an example where I have created more layers to show a more complex example.

Notice the nameparameter, which is given to the output layers. It’s crucial to provide the names of the output layers (age_outputand survived_output for this example), the name will determine which layer will give out what when we compile the model, which is the next step.

The final model architecture is shown in Figure 5; notice the separation of layers.

Now, we create our model.

# creating the model

model = Model(inputs=[input_layer],outputs=[output_layer_age,output_layer_survived])

Here, the inputsis a list of multiple inputs you can provide; for this example, we are using only one of the input_layerNevertheless, for the outputs, you can see we have passed a list of two values, output_layer_age and output_layer_survived, these are the two values our model will throw at the time of prediction, and the output will be in the order of the passed outputs list; I have passed the age layer first so that it will give out age prediction first.

Finally, we compile our model and notice how the metricsand lossare passed as a dictionary, and the nameis the same as the one provided to the output_layername attribute; that’s why naming them is necessary for the model to understand which layer to predict.

# the dictionary keys are the same name of the output layers discussed above.

# For regression MAE is used

# For classificaiton, since its binary classification,

# we are using binary_crossentropy for loss and accuracy for metrics.

model.compile(

optimizer="adam",

loss={

"age_output":"mae",

"survived_output":"binary_crossentropy"

},

metrics={

"age_output":"mae",

"survived_output":"accuracy"

})

Alright, one more final thing to do before we can finally run our beloved model.fit(), traditionally y_train has only one column, so it is pretty easy to use model.fit(X_train,y_train); however, in this case, we need to tune our y_train as it has multiple columns. Hence, our model understands which targets the particular y_train column relates to. Ah, well, it’s easy to do, so here it is!

# Notice again the keys of the dictionary are the same as

# the name attribute which we gave to the output layers

y_train_dict = {

"age_output": y_train['Age'], # Target data for age

"survived_output": y_train['Survived'] # Target data for survived

}

This tuning will help our model understand what column to use for what target while training; it can be n number of columns!!

isn’t it magical? One model to predict multiple target columns!!

Alright, Let’s JUMP to the favourite part: TRAINING!!!

Fitting is relatively straightforward, as you are used to. Just pass the X_trainand y_train. Notice how the model can exactly select what column to select for which task. That is the magic of naming the output layers and consistently passing them along to the model compilation, such as loss and metrics. Pay attention to the names of your output layers!!

# Notice that X_train_scaled is passed and the dictionary we created

# for y_train called y_train_dict for target columns

model.fit(X_train_scaled,y_train_dict,epochs=20,validation_split=0.2)

All right, all right, all right, this is all great, but what about the prediction? How will I know what prediction the model gives, and how do I know what is for age and what is for surviving? Well, worry not; we will discuss that in detail now!

The Prediction Chapter of Multi output Functional model

Now that we have fitted our model, it’s time to take it for a test drive, eh!

Prediction is done similarly to what we are used to, which is model.predict(), however, traditionally, with linear model, we get only one output, but here we will get two outputs, one for age and another for the survived target.

# Predictions

predictions = np.array(model.predict(X_test_scaled))

predictions.shape # (2,143,1)

One thing to note here is that typically, model.predict(x_test) returns a numpy array (see https://keras.io/api/models/model_training_apis/), but with multi-output, it sends out a list, which we can easily convert to a numpy array, as shown above.

This shape can be confusing if you are doing it for the first time; let me clarify it for you: do you remember the output layers we defined earlier? see Figure 5, recall that both the layers have a single output, one is of age and other is of prediction of survival. That’s what this shape is telling us, more clearly,

The prediction shape of (2, 143, 1) in our predictions means the following:

- 2: This represents the two different outputs from our model — one for predicting age and the other for predicting survival.

- 143: This is the number of test samples; since we did a

test_splitof 0.2, test data has 143 values. - 1: This indicates that each prediction for both outputs is a single value. For survival, it’s a probability (between 0 and 1), and for age, it’s a continuous value.

Now that we understand the shape let’s look at the actual predictions.

We will get two arrays of predictions, which will be in the order in which the outputs parameter of the model is defined, which I explained earlier.

So, in our case, predictions[0] will give us a numpy array of predicted age and predictions[1] will provide us with the probability of surviving as this is the order out output we defined in our model see Figure 8 and Figure 9.

Similarly, if you have more than two outputs, you will get arrays of predictions based on the number of target columns you have used.

So that’s how you use Keras’s Functional API. I hope you enjoyed the read. Feel free to follow along. The whole code is linked below. Trust me, your imagination is the limit to the complexity and flexibility that you can achieve with this power.

Until next time, eh?

Be the first to comment