“Our freedom of speech is freedom or death, we got to fight the powers that be!”-Chuck D

In the fervent anticipation of the official announcement of the elected president by Indonesia’s General Elections Commission (KPU), social media, notably Twitter, has become a vital outlet for citizens to voice their opinions. This platform is a hub for critiques of the government and a diverse array of arguments from supporters of candidates 01, 02, and 03.

Amidst this diversity, there’s a risk of excessive expression impacting public perception. Hence, this project introduces an automated system for sentiment classification. The goal is to delve into the realm of Natural Language Processing (NLP) to conduct Twitter Sentiment Analysis using TensorFlow library.

Natural Language Processing (NLP) stands as a pivotal technology within the realm of machine learning, equipped to interpret, manipulate, and comprehend human language. As a branch of artificial intelligence, NLP enables machines to interact with and understand the intricacies of human communication. This technology is widely employed for analyzing the meaning or sentiment embedded in messages, thereby facilitating real-time responses to human communication.

The data set utilized for the training, testing phases, and model development of this project was sourced from Twitter discussions pertaining to the 2019 presidential candidate Joko Widodo. The dataset can be accessed via the following link: Twitter Sentiment Data for Joko Widodo. This dataset comprises three key columns: “score,” “text,” and “class.”

- Score: This column denotes the sentiment score assigned to each tweet, providing a quantitative measure of the sentiment expressed.

- Text: The “text” column contains the actual content of the tweets, serving as the textual input for the sentiment analysis.

- Class: The “class” column categorizes the sentiment expressed in each tweet, offering a categorical label such as positive, negative, or neutral.

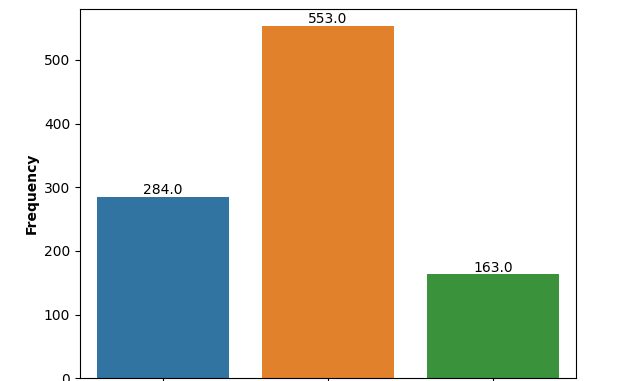

The dataset comprises 1000 collected tweets. The analysis of this dataset reveals the sentiment surrounding Jokowi in 2019 on the Twitter platform, indicating 284 tweets with a negative sentiment, 553 tweets with a neutral sentiment, and 163 tweets with a positive sentiment.

The initial step in constructing a sentiment analysis model using NLP involves data preprocessing. In machine learning, analyzing text data can be challenging. Therefore, in this NLP model, it is essential to transform sentences into tokens to facilitate the machine learning process. Utilizing the tokenizer library from TensorFlow, we can convert textual data into numerical representations.

tokenizer = Tokenizer(

num_words = 1000,

oov_token = '<OOV>'

)

Tokenizer: This is a class from the TensorFlow library used for tokenizing text. It breaks down sentences into individual words (tokens).num_words: This parameter specifies the maximum number of words to keep in the vocabulary. It helps in managing the size of the dataset.oov_token: This parameter assigns a special token for out-of-vocabulary words. It is useful for handling words that are not in the tokenizer’s vocabulary.

tokenizer.fit_on_texts(sentences_train)

word_index = tokenizer.word_index

train_sequences = tokenizer.texts_to_sequences(sentences_train)

padded_sentences_train = pad_sequences(

train_sequences,

maxlen=80,

truncating='post',

padding='post'

)test_sequences = tokenizer.texts_to_sequences(sentences_test)

sentences_test = pad_sequences(

test_sequences,

maxlen = 80,

truncating = 'post',

padding = 'post'

)

label_tokenizer = Tokenizer()

label_tokenizer.fit_on_texts(labels)

labels_train = np.array(label_tokenizer.texts_to_sequences(labels_train))

labels_test = np.array(label_tokenizer.texts_to_sequences(labels_test))

Tokenizer: The same tokenizer instance is used for both training and testing data to ensure consistency in the tokenization process.fit_on_texts: This method updates the internal vocabulary of the tokenizer based on the provided text data.word_index: Theword_indexattribute holds a dictionary mapping words to their respective indices in the vocabulary.texts_to_sequences: This method converts the input text data into sequences of numerical values using the vocabulary established by the tokenizer.pad_sequences: This function pads the sequences to a specified length to ensure uniformity in the input size for the model. It is crucial for maintaining consistency during training.label_tokenizer: A separate tokenizer is used for the labels to convert text labels into numerical representations.texts_to_sequences(for labels): This method converts the text labels into numerical sequences using the label tokenizer.- The resulting

padded_sentences_train,padded_sentences_test,labels_train, andlabels_testare now ready to be fed into the sentiment analysis model for training and evaluation

The next step is the formation of the model for sentiment analysis itself. This modeling step is crucial, as it involves creating an automated system for classifying whether the text in a given dataset leans towards positive, neutral, or negative sentiments. This process involves constructing Neural Network Environment to understand the deep patterns from the text data.

model = tf.keras.Sequential([tf.keras.layers.Embedding(vocab_size, embeding_dim, input_length=max_length),

tf.keras.layers.GlobalAveragePooling1D(),

tf.keras.layers.Dense(24, activation='relu'),

tf.keras.layers.Dense(12, activation='relu'),

tf.keras.layers.Dense(6, activation='sigmoid')

])

Embedding Layer:

- The first layer is the Embedding layer, which is crucial for converting words into dense vectors of fixed size.

GlobalAveragePooling1D Layer:

- Following the Embedding layer is the GlobalAveragePooling1D layer. It performs global average pooling on the temporal dimension of the sequence, reducing the spatial dimensions to a fixed size. This helps in simplifying the information for downstream layers.

Dense Layers:

- The subsequent three Dense layers contribute to the neural network’s ability to learn intricate patterns and relationships in the data.

- The first Dense layer consists of 24 neurons with a rectified linear unit (ReLU) activation function, introducing non-linearity to the model.

- The second Dense layer comprises 12 neurons with ReLU activation, further enhancing the model’s capacity to capture complex features.

- The third Dense layer, with 6 neurons and a sigmoid activation function, is responsible for producing the final output, which represents the sentiment class probabilities (positive, negative, neutral).

model.compile(loss='sparse_categorical_crossentropy',

optimizer=tf.keras.optimizers.Adam(learning_rate=0.01),

metrics=['accuracy']

)

The compile method configures the model for training. It requires three main parameters:

loss: The loss function measures how well the model performs. In this case, ‘sparse_categorical_crossentropy’ is chosen, suitable for integer-encoded labels.optimizer: The optimizer adjusts the model weights during training. ‘Adam’ is a popular optimization algorithm, and the learning rate is set to 0.01.metrics: ‘accuracy’ is chosen as the evaluation metric to monitor the model’s performance during training.

model.fit(sentences_train,

labels_train,

epochs=1000,

validation_data=(sentences_test, labels_test),

callbacks=callbacks

)

The fit method trains the model on the provided training data. Key parameters include:

sentences_trainandlabels_train: Training data and corresponding labels.epochs: The number of times the model will iterate over the entire training dataset. In this case, it’s set to 1000, indicating a prolonged training period.validation_data: Validation data is used to evaluate the model’s performance on unseen data after each epoch.callbacks: Callbacks are functions called during training. They are useful for implementing actions like early stopping to prevent overfitting.

After iterating through the training process, the model shows impressive performance with 96% training data accuracy and 86% validation data accuracy. These results indicate that the model is capable of effectively classifying text sentiments.

After developing the models, lets deep dive into the utility of this model to our main problem. However, to classify the tweet data from 2024 Indonesia Public Election we need to gather the raw data set. In this case we use ntscraper library to scrap all the tweet data.

from ntscraper import Nitter

scraper = Nitter()def get_tweets(query, modes, limit):

tweets = scraper.get_tweets(query, mode = modes , number = limit)

final_tweets = []

for tweet in tweets['tweets']:

data = [tweet['link'], tweet['text'], tweet['date'], tweet['stats']['likes'], tweet['stats']['comments']]

final_tweets.append(data)

data = pd.DataFrame(final_tweets, columns=['Link', 'Tweet','Tanggal', 'Like', 'Comment'])

return data

Library Import:

- Importing the

Nitterscraper from thentscraperlibrary, enabling interaction with the Nitter front-end for Twitter.

Scraper Initialization:

- An instance of the Nitter scraper is created using

scraper = Nitter().

Function Definition (get_tweets):

- This function is designed to retrieve tweet data based on specified parameters, namely

query(search query),modes(search modes = ‘user’, ‘hastag’, and ‘term’), andlimit(number of tweets to retrieve).

Data Extraction Loop:

- The function utilizes the scraper to obtain tweets and iterates through them, extracting relevant information such as tweet link, text, date, likes, and comments.

DataFrame Creation:

- The extracted information is then organized into a Pandas DataFrame named

datawith columns representing tweet attributes.

After scraping the data we now has a 3 data set for each candidate. Now lets implemented our model to the dataset to make a classification. However, before we can apply to our model into the dataset we need to apply the preprocessing like the previous step to ensure that the model works correctly with the dataset.

After preprocessing the candidate dataset we now can use our model by using this code.

predictions_anies = model.predict(sentences_predict_anies)

predictions_prabowo = model_.predict(sentences_predict_prabowo)

predictions_ganjar = model.predict(sentences_predict_ganjar)

Presented here are the comprehensive outcomes derived from the sentiment analysis predictions concerning the three prominent presidential candidates in the aftermath of an extensive series of quick counts in Indonesia.

For a deeper exploration and a more in-depth understanding of the project, I invite you to go into the extensive documentation on my GitHub repository.

Be the first to comment