Today, we’ll be using the MNIST dataset (Modified National Institute of Standards and Technology) for building an ML model that classifies the digits in the database. The MNIST dataset consists of handwritten digits from 0–9 used widely for training image processing systems. We’ll be using Tensorflow for building the model. The documentation for the dataset can be found here: https://www.tensorflow.org/datasets/catalog/mnist

- Importing the needed libraries:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import random

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

import tensorflow_datasets as tfds

2. Splitting the dataset into training and testing:

In case you’re wondering how I figured out the tuples, I referred to the official documentation for the MNIST dataset. It can be found here: https://www.tensorflow.org/api_docs/python/tf/keras/datasets/mnist/load_data

(xtrain, ytrain), (xtest, ytest) = tf.keras.datasets.mnist.load_data()

3. Displaying the images present in the dataset:

for i in range(9):

ax = plt.subplot(3,3,i+1) #rows, columns and label

plt.imshow(xtrain[i], cmap = plt.get_cmap('gray'))

plt.title("Number {}".format(ytrain[i]))

plt.axis('off')

We display 9 images from the dataset. I’m going to briefly mention the parameters present in the code above:

- The plt.subplot() takes 3 parameters, the number of rows, columns and the index.

- The images are stored in the xtrain array we created in step 2.

- ‘cmap’ stands for colormaps. We wish to display black and white images of the digits.

- We provide a title to the images, and this title is the number present in the image. The titles can be found in the train array we made in step 2.

- Finally, we do not wish to show the axis when our images are displayed, and so we turn it ‘off’.

Before we move to step 4, let us talk about ‘One Hot Encoding’.

Machine Learning doesn’t perform with categorical data, but numerical data. Consider a dataset to have a Gender attribute with 2 categories as Male and Female. Instead of passing the string labels, we create an array with 2 columns. If a person is a male, the first column gets filled with 1 and the second column is filled with 0. Otherwise the first column is a 0 and the second column is a 1, when a person is a female.

Gender = [<Male>,<Female>]

Karan : [1,0]

Sonakshi: [0,1]

In any such array, only one column holds the value 1, and the rest of the entries are 0.

4. One-Hot Encoding the labels:

Instead of the labels spanning from 0 to 9, we one-hot encode them. Remember that the labels are present in the ytrain and ytest arrays created in step 2.

ytrain = tf.keras.utils.to_categorical(ytrain)

ytest = tf.keras.utils.to_categorical(ytest)

5. Reshaping the images, and typecasting them:

rows,cols=28,28

xtrain = xtrain.reshape(xtrain.shape[0],rows,cols,1)

xtest = xtest.reshape(xtest.shape[0],rows,cols,1)

xtrain = xtrain.astype('float32')

xtest = xtest.astype('float32')

xtrain/=255.0

xtest/=255.0

print(xtrain.shape)

print(xtest.shape)

We wish for our image to have 28 pixels along the row and 28 pixels along the columns. The line xtrain.reshape() takes in the following parameters.

- The image stored in the xtrain array.

- Number of rows

- Number of columns

- Number of channels. Since our images are in grayscale, they only need 1 channel. RGB images have 3 channels.

Similarly, images in the xtest array are reshaped.

The images are typecasted into ‘float32’ data format. While training a neural network, it is common to use 32-bit precision, hence we typecast the images.

In any image, the pixels can take values from 0 to 255 based on their colour. Dividing them by 255 makes their value come between 0 and 1, standardising the range of values for improved training and testing.

Printing the shapes gives us the number of images in the dataset, number of rows, number of columns and number of channels.

For xtrain the shape is (60000,28,28,1) and for xtest we get (10000,28,28,1)

6. We now come to the most awaited part- where we build the neural network!

model = models.Sequential()

model.add(layers.Conv2D(128,(3,3),activation='relu'))

model.add(layers.MaxPooling2D(2,2))

model.add(layers.Dropout(0.5))

model.add(layers.Conv2D(64,(3,3),activation='relu'))

model.add(layers.MaxPooling2D(2,2))

model.add(layers.Dropout(0.5))

model.add(layers.Conv2D(64,(3,3),activation='relu'))

model.add(layers.Flatten())

model.add(layers.Dense(128,activation='relu')) #Dense(128) is a fully connected layer

model.add(layers.Dense(10,activation='softmax'))

We use a Sequential mode, which allows us to stack multiple layers.

The first layer has a 2D Convolutional network with 128 filters, kernel size of 3×3 and uses the Rectified Linear Unit (ReLU) activation function.

In CNN, filters are used to extract specific features from a given image. Consider a CNN that is used to detect humans. The first filter could be used to extract faces from an image, the second filter extracts the eyes, the third filter extracts the nose and so on.

Kernel size specifies the heights and width of the 2D convolutional window.

Rectified Linear Unit is an activation function that provides output 0 for input less than or equal to 0 and returns the input value when input is greater than 0. ReLU is commonly preferred over sigmoid or tanh since it solves the vanishing gradient problem. (In sigmoid or tanh function, the output values snap to 1 for very high input values).

Next we use MaxPooling. Now what exactly is that?

Essentially, pixels in images are just numbers. Max pooling is used to select the maximum value present in a window over which the filter is applied.

So consider using a filter of size 2×2 in the above image. The filter is placed over the blue segment and the maximum in that window is 9. A stride mentions the number of pixels we move the filter by. So after completing the blue segment, we move the filter by 2 pixels right and come to the green segment where the max value is 7. Next, we move by two pixels down and come to the yellow segment and pick up 8, then shift 2 pixels to the right and pick up 6.

Coming back to our code snippet, we apply a dropout of 0.5. Dropout is a strategy used to prevent overfitting (a situation where a machine gives accurate results for trained data but fails to give correct results for new and unseen data). In dropout, we randomly deactivate a portion of neurons (50% in this case) during each training update.

We then apply a second convolutional layer with 64 filters, 3×3 filter size, and ReLU activation. We use MaxPooling and Dropout same as before and apply a third layer of Conv2D.

The Flatten() method converts the pooled feature maps (2D in nature) into a linear vector (1D in nature) so that it can be inserted in the dense layers

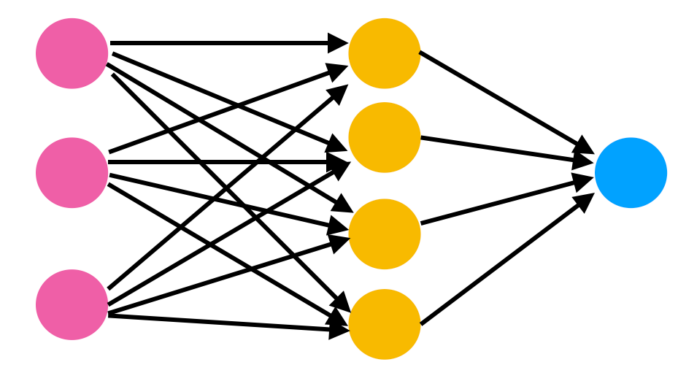

Finally, we first add a Dense layer with 128 neurons in it. Neurons in a dense layer receive input from every other neuron in the previous layer. This layer also uses the ReLU activation.

The last dense layer is the output layer. It has 10 neurons for 10 classes (for 0–9 digits). This layer uses the softmax activation function. This function converts the weighted sum into probabilities for each output (there are 10 outputs in our case) that sum to 1.

7. Our pipeline is ready, and it is time to compile our model:

model.compile(optimizer = tf.keras.optimizers.Adam(0.001),loss=tf.keras.losses.categorical_crossentropy,metrics=['accuracy']

Let us talk about the parameters present in this snippet.

- Optimizer: It is used to adjust the weights and the learning rate of the neural connections to minimise loss. We use the Adam optimizer which is most commonly used for CNN models.

- Next we come to the loss function. The loss function compares the predicted values and the actual values. Our aim is to minimize loss in our neural network. We use the CategoricalCrossEntropy loss function here, since we have multiple categories. (10 categories for outputs 0 to 9).

- Metrics is the basis to judge the performance of our neural network. Here, we measure performance in terms of the accuracy of predictions.

8. Time to train our model!

model.fit(xtrain,ytrain,epochs=10,batch_size=16)

- Epochs refers to the number of iterations performed for training.

- batch_size refers to the number of samples propagated through the network.

score = model.evaluate(xtest, ytest)

print('Test accuracy:', score[1])

We get an accuracy of 99.1% with our network.

You can further change the accuracy by changing the hyperparameters (learning rate, dropout rate, using other activation functions, etc.)

9. Making Predictions:

This has to be my favourite part, the part where we make predictions using the testing dataset.

predictions = model.predict(xtest)

results = np.argmax(predictions,axis = 1)

print(results)

- argmax returns the returns indices of the maximum values along with specified axis.

- In simple terms, the softmax returns the probability of the outputs for each class as discussed above, and argmax return the index where the probability is highest.

plt.figure(figsize=(10,10))

for i in range(9):

ax = plt.subplot(3,3,i+1) #rows, columns and label

plt.imshow(xtest[i],cmap = plt.get_cmap('gray'))

plt.title(results[i])

Using the code snippet above, we plot the images (10×10 pixels as specified using figsize) and the predicted results (given by results[i] array).

Here’s the link to the repository for reference: https://github.com/SonakshiA/Handwritten-Digit-Classification/tree/main

Be the first to comment