Decision trees operate by splitting data into subsets based on feature values, creating a tree-like model of decisions. Each node represents a feature, each branch a decision rule, and each leaf a potential outcome. This structure is highly interpretable, as one can easily follow the path from root to leaf to understand how a decision is made.

Neural networks, particularly deep ones, consist of multiple layers of neurons that learn hierarchical representations of data. They are exceptionally good at handling complex, non-linear relationships, making them ideal for tasks such as image recognition and natural language processing. However, the downside is their “black-box” nature, making it challenging to interpret how they arrive at specific decisions.

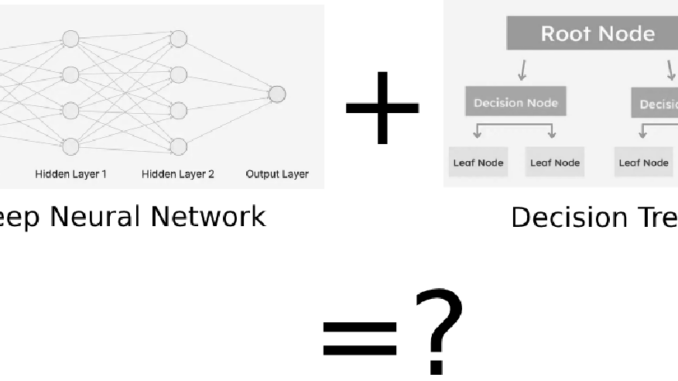

DNDT aims to bridge the gap between the interpretability of decision trees and the predictive power of deep neural networks. It does this by integrating decision tree structures within a neural network framework, effectively learning decision boundaries in a hierarchical manner.

The DNDT model consists of two primary components:

- Neural Network Backbone: This part of the model learns representations of the input data through multiple layers, just like a traditional neural network.

- Differentiable Decision Trees: Instead of traditional decision nodes, DNDT uses a differentiable approximation of decision trees, allowing the entire model to be trained end-to-end using gradient descent.

Step-by-Step Process

- Data Input and Neural Representation: The input data is fed into the neural network backbone, which transforms it into a high-dimensional representation.

- Tree Structure Learning: The transformed data is then passed through several layers of differentiable decision trees. Each tree node applies a soft decision function, which can be thought of as a probabilistic split, allowing the model to remain differentiable.

- End-to-End Training: The entire DNDT model is trained using standard backpropagation techniques. The loss is propagated through both the neural network and the decision tree layers, enabling the model to learn both complex representations and effective decision rules simultaneously.

- Interpretability: By incorporating decision tree structures, DNDT provides insight into the decision-making process, offering a level of transparency not typically available in standard neural networks.

- Flexibility: DNDT can handle complex, non-linear relationships in the data, thanks to the neural network backbone so it can be utilized in either regression or classification task as needed.

- Scalability: The model can be scaled to handle large datasets and deep architectures, benefiting from the advances in neural network training techniques.

DNDT can be applied to a variety of domains where both interpretability and predictive accuracy are crucial. For instance:

- Healthcare: Understanding how certain features influence medical diagnoses.

- Finance: Interpreting the factors leading to credit approval decisions.

- Customer Relationship Management (CRM): Analyzing customer data to predict churn and understand key drivers.

Be the first to comment