This article requires you to have knowledge of standard transformers and how they work. If you are a beginner and you’d like to know about transformers, please take a look at Transformer for Beginners article.

In Hierarchical Transformer — part 1 we defined, what we mean by “hierarchical transformers”, and we reviewed one of prominent work in this domain which was called Hourglass.

In this article, we will continue the line of work by looking into another well-known work called Hierarchical Attention Transformers (HAT).

Let’s get started.

This method was initially proposed for classifying long documents, typically in length of thousands of words. A usecase of this is classifying legal documents or biomedical documents which are typically very long.

Tokenization and Segmentation

The HAT method works by taking an input document, and tokenizing it using Byte-Pair Encoding (BPE) tokenizer that breaks text into subwords/tokens. This tokenizer is used in many well-known large language models such as BERT, RoBERTA and GPT family.

Then it splits the tokenized document into N equally-sized chunks; i.e. if S denote the input document then S = [C1, …., CN] are N equally-sized chunks. (Through out this article, we sometimes refer to chunks as segments, but they are the same concept.) Each chunk is a sequence of k tokens Ci = [Wi[cls], Wi1…, Wik-1] that the first token, Wi[cls], is the CLS token which represents the chunk.

As we see in image above, every chunk is a sequence of k tokens, where the first token is the CLS token.

Model Architecture

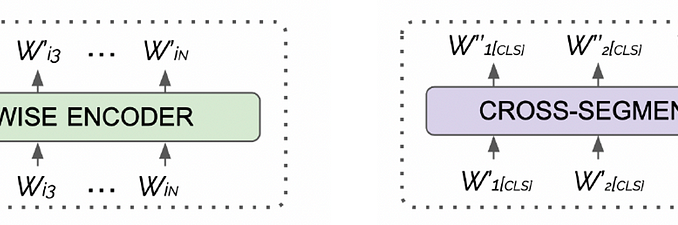

After tokenizing and segmenting the input sequence, it feeds it to the HAT transformer model. The HAT model is an encoder-transformer and consists of two main components:

- segment-wise encoder (SWE): this is a shared encoder block that takes in sequence of a segment (aka chunk) and processes the chunk.

- cross-segment encoder (CSE): this is another encoder block that takes is CLS tokens of all segments (aka chunks) and process cross-segment relations.

Be the first to comment