If you were to ask GPT, how to create a graph of knowledge from a given text? it may suggest a process like the following.

- Extract concepts and entities from the body of work. These are the nodes.

- Extract relations between the concepts. These are the edges.

- Populate nodes (concepts) and edges (relations) in a graph data structure or a graph database.

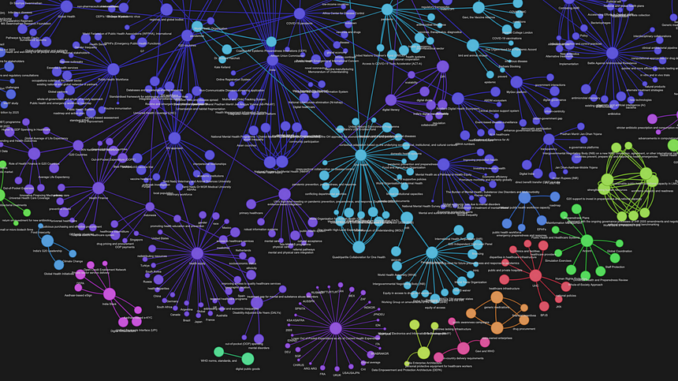

- Visualise, for some artistic gratification if nothing else.

Steps 3 and 4 sound understandable. But how do you achieve steps 1 and 2?

Here is a flow diagram of the method I devised to extract a graph of concepts from any given text corpus. It is similar to the above method but for a few minor differences.

- Split the corpus of text into chunks. Assign a chunk_id to each of these chunks.

- For every text chunk extract concepts and their semantic relationships using an LLM. Let’s assign this relation a weightage of W1. There can be multiple relationships between the same pair of concepts. Every such relation is an edge between a pair of concepts.

- Consider that the concepts that occur in the same text chunk are also related by their contextual proximity. Let’s assign this relation a weightage of W2. Note that the same pair of concepts may occur in multiple chunks.

- Group similar pairs, sum their weights, and concatenate their relationships. So now we have only one edge between any distinct pair of concepts. The edge has a certain weight and a list of relations as its name.

You can see the implementation of this method as a Python code, in the GitHub repository I share in this article. Let us briefly walk through the key ideas of the implementation in the next few sections.

To demonstrate the method here, I am using the following review article published in PubMed/Cureus under the terms of the Creative Commons Attribution License. Credit to the authors at the end of this article.

The Mistral and the Prompt

Step 1 in the above flow chart is easy. Langchain provides a plethora of text splitters we can use to split our text into chunks.

Step 2 is where the real fun starts. To extract the concepts and their relationships, I am using the Mistral 7B model. Before converging on the variant of the model best suited for our purpose, I experimented with the following:

Mistral Instruct

Mistral OpenOrca, and

Zephyr (Hugging Face version derived from Mistral)

I used the 4-bit quantised version of these models — So that my Mac doesn’t start hating me — hosted locally with Ollama.

These models are all instruction-tuned models with a system prompt and a user prompt. They all do a pretty good job at following the instructions and formatting the answer neatly in JSONs if we tell them to.

After a few rounds of hit and trial, I finally converged on to the Zephyr model with the following prompts.

SYS_PROMPT = (

"You are a network graph maker who extracts terms and their relations from a given context. "

"You are provided with a context chunk (delimited by ```) Your task is to extract the ontology "

"of terms mentioned in the given context. These terms should represent the key concepts as per the context. \n"

"Thought 1: While traversing through each sentence, Think about the key terms mentioned in it.\n"

"\tTerms may include object, entity, location, organization, person, \n"

"\tcondition, acronym, documents, service, concept, etc.\n"

"\tTerms should be as atomistic as possible\n\n"

"Thought 2: Think about how these terms can have one on one relation with other terms.\n"

"\tTerms that are mentioned in the same sentence or the same paragraph are typically related to each other.\n"

"\tTerms can be related to many other terms\n\n"

"Thought 3: Find out the relation between each such related pair of terms. \n\n"

"Format your output as a list of json. Each element of the list contains a pair of terms"

"and the relation between them, like the follwing: \n"

"[\n"

" {\n"

' "node_1": "A concept from extracted ontology",\n'

' "node_2": "A related concept from extracted ontology",\n'

' "edge": "relationship between the two concepts, node_1 and node_2 in one or two sentences"\n'

" }, {...}\n"

"]"

)USER_PROMPT = f"context: ```{input}``` \n\n output: "

If we pass our (not fit for) nursery rhyme with this prompt, here is the result.

[

{

"node_1": "Mary",

"node_2": "lamb",

"edge": "owned by"

},

{

"node_1": "plate",

"node_2": "food",

"edge": "contained"

}, . . .

]

Notice, that it even guessed ‘food’ as a concept, which was not explicitly mentioned in the text chunk. Isn’t this wonderful!

If we run this through every text chunk of our example article and convert the json into a Pandas data frame, here is what it looks like.

Be the first to comment