Go to https://docs.nvidia.com/deeplearning/tensorrt/install-guide/index.html#installing-zip and follow the steps to download the zip packagefor your desired version. You should end up in this section:

For example, place the zip file in your C:\ folder and unzip it.

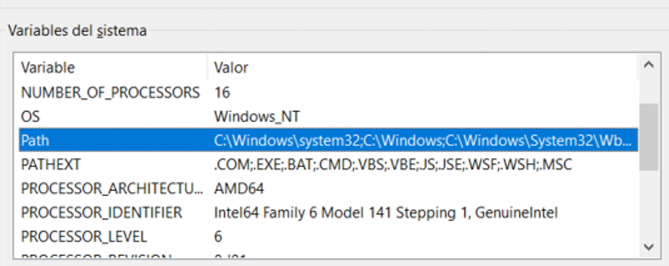

In Windows, search for “Environment Variables.”

Go to the Path variables

Now add the paths of your bin and lib folders located inside your unzipped TensorRT folder.

Inside the Python environment where you want to install TensorRT, navigate to the python folder shown in the previous step and install the TensorRT .whl file that matches your Python version (3.6, 3.7, …).

You now have the TensorRT library installed!!

If you want to use PyTorch or TensorFlow models and convert them to TensorRT format, you may want to install these additional packages. Follow the previous instructions (go to the folder and pip install the .whl file):

uff

graphsurgeon

onxx

Now you have all the necessary packages to start exporting models to .engine format and run inference on them!

Be the first to comment