objective:-

we are going to compare different models for text classification and figure out which models work better for text classification, the models that we are going to compare are DNN, CNN, RNN

along with that, we are going to learn

how to use the DNN/CNN/RNN models to classify text in Keras.

how to tokenize and integrate a corpus of text for training in Keras.

how to do one-hot-encodings in Keras.

how to use embedding layers to represent words in Keras.

how to use the bag-of-word representation for sentences

let’s have a look at the dataset how it looks like that we are using in the blog and the codes that are illustrated we are solving a text classification problem

before diving deep let’s introduce some basic terminologies that we are going to use in the blog

how many words do we have in our dataset `VOCAB_SIZE`

how many titles we have `DATASET_SIZE`

The maximum length of the titles we have is `MAX_LEN`

to feed to the model we need to convert the text to some numbers hence we are going to use the “Tokenizer” class from “keras.preprocessing.text”

let’s see how it works

now we are using the “Tokenizer” that we have imported from Keras

tokenizer = Tokenizer()

tokenizer.fit_on_texts(titles_df.title)

integerized_titles = tokenizer.texts_to_sequences(titles_df.title)

let’s see a sample output of using this Tokenizer

we “pad” the remaining text in the dataset to the maximum length so that the same size vector can be fed into the model

def create_sequences(texts, max_len=MAX_LEN):

sequences = tokenizer.texts_to_sequences(texts)

padded_sequences = pad_sequences(sequences, max_len, padding='post')

return padded_sequences

we can see the sample output here once we tokenized the sentences and pad them to the maximum length as

now we convert the source of these articles as categorical variables we use keras “to_categorical”

CLASSES = {

'github': 0,

'nytimes': 1,

'techcrunch': 2

}

N_CLASSES = len(CLASSES)def encode_labels(sources):

classes = [CLASSES[source] for source in sources]

one_hots = to_categorical(classes)

return one_hots

let’s see a sample output of it

we use “create_sequences” and “encode_lables” for the x and y datasets i.e, titles and sources

for all the models that we are using in this blog we are using simple embedding layer transforming the word integers into dense vectors we are importing using “from tensorflow.keras.layers import Embedding”

def build_dnn_model(embed_dim):model = Sequential([

Embedding(VOCAB_SIZE + 1, embed_dim, input_shape=[MAX_LEN]), # TODO 3

Lambda(lambda x: tf.reduce_mean(x, axis=1)), # TODO 4

Dense(N_CLASSES, activation='softmax') # TODO 5

])

model.compile(

optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy']

)

return model

we put a custom Keras Lambda layer in between the Embedding layer and the Dense softmax layer to do an average of the word vectors returned by the embedding layer. This is the average that’s fed to the dense softmax layer. By doing so, we create a model that is simple but that loses information about the word order, creating a model that sees sentences as “bag-of-words”.

while training the model we are using “EarlyStopping” and “PATIENCE”

which will stop training as soon as validation loss has not improved after a specified number of epochs mentioned in “PATIENCE”

BATCH_SIZE = 300

EPOCHS = 100

EMBED_DIM = 10

PATIENCE = 3dnn_model = build_dnn_model(embed_dim=EMBED_DIM)

dnn_history = dnn_model.fit(

X_train, Y_train,

epochs=EPOCHS,

batch_size=BATCH_SIZE,

validation_data=(X_valid, Y_valid),

callbacks=[EarlyStopping(patience=PATIENCE), TensorBoard(MODEL_DIR)],

)

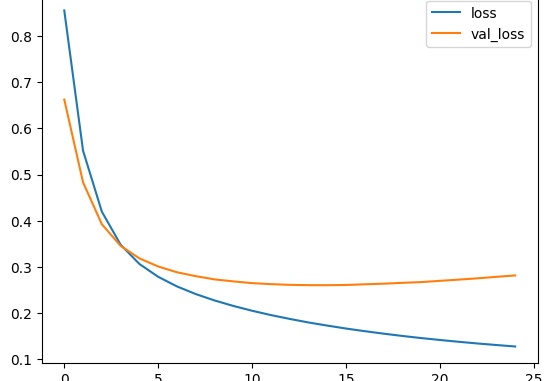

lets plot loss and accuracy over the history

we can see at the end of 25 the epoch we have a training accuracy of 0.95 and validation accuracy of 0.89 and each step in an epoch took approx 8 milliseconds

in the above model, we used

“Lambda(lambda x: tf.reduce_mean(x, axis=1))”

as an intermediate layer b/w embedding layer and softmax layer which acts a bag of words and does not account for the order of words

now in this code, we use a single “GRU” layer which now takes account of the order of words in a sentence

our human intuition now says that this works better than the previous model as the previous model doesn’t consider the order

let’s find out if intuition is correct or not

also, we set “mask_zero=True” in the “Embedding” layer so that the padded words (represented by zero) are ignored by this and the subsequent layers

def build_rnn_model(embed_dim, units):model = Sequential([

Embedding(VOCAB_SIZE + 1, embed_dim, input_shape=[MAX_LEN], mask_zero=True), # TODO 3

GRU(units),

Dense(N_CLASSES, activation='softmax')

])

model.compile(

optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy']

)

return model

let’s train the model as above using “early stopping” as above

EPOCHS = 100

BATCH_SIZE = 300

EMBED_DIM = 10

UNITS = 16

PATIENCE = 10rnn_model = build_rnn_model(embed_dim=EMBED_DIM, units=UNITS)

history = rnn_model.fit(

X_train, Y_train,

epochs=EPOCHS,

batch_size=BATCH_SIZE,

validation_data=(X_valid, Y_valid),

callbacks=[EarlyStopping(patience=PATIENCE), TensorBoard(MODEL_DIR)],

)

Let’s plot loss and accuracy for the history of epochs

the model has achieved a val_accuracy of 0.89 and training accuracy of 0.97 at the 12 epoch itself and each step took 30 milliseconds

this is a keras model that uses a single “Conv1D” model as an intermediate layer between the embedding layer and softmax layer

this Conv1D layer also takes into account the word order in sentences similar to GRU unlike DNN ( which acts as a bag of words)

also, we need to flatten the layer after the convolution layer before passing it to Softmax

her as well “mask_zero=True” same as above we don’t pass 0 padding at the end of sentences

let’s define the model

def build_cnn_model(embed_dim, filters, ksize, strides):model = Sequential([

Embedding(

VOCAB_SIZE + 1,

embed_dim,

input_shape=[MAX_LEN],

mask_zero=True), # TODO 3

Conv1D( # TODO 5

filters=filters,

kernel_size=ksize,

strides=strides,

activation='relu',

),

Flatten(), # TODO 5

Dense(N_CLASSES, activation='softmax')

])

model.compile(

optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy']

)

return model

let’s train the model

EPOCHS = 100

BATCH_SIZE = 300

EMBED_DIM = 5

FILTERS = 200

STRIDES = 2

KSIZE = 3

PATIENCE = 10cnn_model = build_cnn_model(

embed_dim=EMBED_DIM,

filters=FILTERS,

strides=STRIDES,

ksize=KSIZE,

)

cnn_history = cnn_model.fit(

X_train, Y_train,

epochs=EPOCHS,

batch_size=BATCH_SIZE,

validation_data=(X_valid, Y_valid),

callbacks=[EarlyStopping(patience=PATIENCE), TensorBoard(MODEL_DIR)],

)

let’s plot the history of loss and accuracy for all epochs

we can see at the 12th epoch itself we have got val_accuracy as 0.88 and training accuracy as 0.98 and the time took for each step in an epoch is 12 milliseconds

we can say although GRU took more time per step in an epoch it has better val_accuracy and takes fewer epochs compared to a bag of words(DNN) or CNN

our intuition that the model which takes the order of words in a sentence is better than that which doesn’t consider the order

for larger datasets, the GRU outperforms the CNN and Bag of Words model(DNN)

hence if I had to choose a model for real datasets that achieves better accuracy I would choose GRU and train for more epochs

we can see that the time for GRU( 30 ms) is approx 3 times that of DNN(7 ms) and 2.7 times 12( CNN) and accuracy at 12, the epoch for both CNN and GRU is almost the same as accuracy at the 25th epoch for DNN

but for large datasets in real-world GRU performs better as it captures more detail than CNN and DNN as GRU is designed for text datasets this time difference is worth spending

my order would be

GRU>CNN>Bag of words ( DNN) model

Cheers

if you like the blog we can connect on Linkedin

my Github

code for this blog can be found here

I have taken inspiration for this blog from Google’s ML repository

Thanks

Kanukollu Goutham Viswa Tej

Be the first to comment