In this blog post I am going to compare Dataflow Gen2 vs Notebook in terms of how much it costs for the workload. I will also compare usability as currently the dataflow gen2 has got a lot of built in features which makes it easier to use.

The goal of this blog post is to understand which in my opinion is cheaper and easier to use, which will then be the focus for future blog posts with regards to what I’ve learned along the way, which will hopefully assist you too.

To compare between the two workloads, I am going to be using the same source file as well as do the same transformations which will result in the same result.

For my examples below I am using an F2 Capacity in Australia Southeast which is $0.42/hour. The pricing information can be found here: Microsoft Fabric – Pricing | Microsoft Azure

In both workloads, all that I’m going to do is to add a new column called date num, which will be the date represented in a number format.

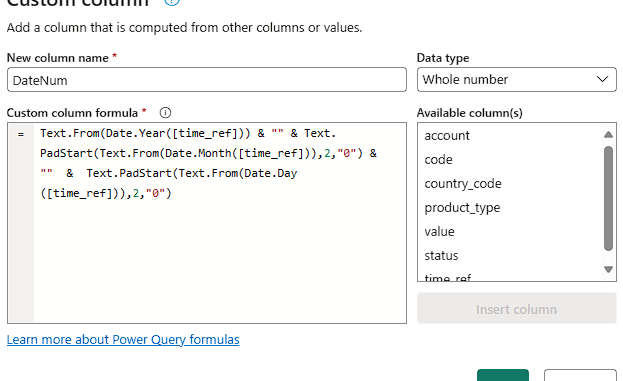

As you can see below, this is how I did it in the dataflow Gen 2.

This is the expected output from the Dataflow Gen2

And this is how I did it when using the notebook to achieve the same results. Please note that the notebook code I did not do this myself. I used the data Wrangler feature to get the desired output I have. Blocked about this previously which you can find the link here:

This is the expected output from the Notebook code.

One of the critical things to validate when using Fabric workloads is how much does this cost.

I am still learning how to calculate the Fabric costs, so this is subject to change. And when this does, I will then update this blog post!

I use the Fabric metrics app to look at both the capacity units consumed and the duration to calculate to calculate the costs of each of the workloads.

As I will explain below, you can see how I determined the cost, for each workload to decide in terms of comparing the costs which workload is cheaper to use.

Below shows the CU(s) and Duration (s) from my two workloads.

I have then calculated the billing costs based on the billing example from the Microsoft documentation: Data warehouse billing and utilization reporting – Microsoft Fabric | Microsoft Learn

Dataflow Gen2 Cost

The duration for the Dataflow Gen2 was 97.18 seconds, so to calculate the cost I did the following below.

Because I am using an F2 capacity the price per CU is $0.42/hour

The cost calculation is then (97.18 x 0.42)/3600 = $0.0113

NOTE: The reason to divide by 3600 is because there are 3600 in an hour. Because our costing is hourly, I must then divide it by 3600 to get the cost per second.

Notebook Cost

The duration for the Notebook was 84.81 seconds, so to calculate the cost I did the following below.

Because I am using an F2 capacity the price per CU is $0.42/hour

The cost calculation is then (84.81 x 0.42)/3600 = $0.0098

Bursting Costs

It is known that bursting can occur when using Fabric workloads. This must potentially be factored in when calculating the costs for workloads when using Fabric.

Thanks to Tristan Malherbe for his assistance to how to calculate the bursting costs.

If I had to make up a bursting example where there was an additional 2000 CU(s) of bursting this is how I would calculate the additional bursting costs.

For an F2 capacity I would calculate the cost for 1 CU to be $0.00005833

This was calculated as: Per Minute CU cost / (CU(s) Capacity * 60 Seconds)

Actual Calculation: $0.007 / (2 * 60) = $0.00005833

The final cost for the additional 2000 CU(s) would be: 2000 * $0.00005833 = $0.1167

Cost Comparison

To compare the costs, I then work out the difference in cost between the Dataflow Gen2 and the Notebook.

This works out to be about 115.14% cheaper to use the notebook compared to the Dataflow Gen2. I understand the cost comparison in my example is very small, but what I have seen on larger workloads is that this becomes quite significant.

Another thing that I must factor in is looking at how many CU(s) are consumed for each of the workloads.

When I compare this there is a significant difference between them, the notebook consumes 340.30% less CU(s). This is certainly something to consider when looking at how many CU(s) you get to consume daily. For an F2 capacity there are 172,800 CU(s) per day to be consumed.

Here is a cost calculator if you want to work out the estimated costs.

Another consideration is which one is easier to use when comparing Dataflows Gen2 or Notebooks.

I personally think that this might vary depending on what changes need to happen with your data.

My example is simple, but I think it still demonstrates how this can be achieved with the different workloads.

Notebook usability

To create the new column using the notebook what I did was to use the Data Wrangler built into the notebook to make the change.

It is certainly more of a challenge to learn a new language such as Python when comparing to using something with a GUI, such as Dataflow Gen2.

With, what I have learnt is that there are a lot of working examples that you can search for and the code very often the code is flexible.

When trying to create the new column using a notebook, I first had to load the data into a data frame. And then I used the Data Wrangler to create my new column with the “New column by example”

This is the code that it created below, the great thing about this is I did not write one line of the actual code!

If you are interested in how this works you can read my blog post An easy way to transform/clean your data using a Notebook in Microsoft Fabric – FourMoo

This made it quite easy to create the column, load it to my data frame and then write the output to a Lakehouse table.

Dataflow Gen2 usability

People will be a lot more familiar with using data flow gen 2 because this is Power Query (as in Power BI Desktop) but on the web.

The Dataflow Gen 2 features a GUI which makes it a lot easier and potentially and potentially quicker to complete tasks.

In my working example, I had to use a custom column to get the data in the format that I wanted It was particularly easy to get this done.

The only additional step I had to do was to enable the data destination, which enabled me to write the data to a Lakehouse table.

Usability Outcome

For me when looking or comparing which one is easier to use between a notebook or the Dataflow Gen 2 my initial reaction is to go with the Dataflow Gen 2 because this is something I am more familiar with.

But to be fair, there is a lot of code examples and functionality with using a notebook. I have also found that when the data sets are larger, a notebook can run things significantly quicker. What I do enjoy with the notebook is that once I have got a piece of code, I can then save this code and reuse it multiple times when needed in other data set requirements.

For me to determine an outcome. I guess it would depend on how much data transformations I need to do, as well as how large the dataset I’m working with is what I would probably do would be to use a combination of both the data flow gen 2 and the notebook.

I could then get the best of both worlds; maybe more complex transformations would be done using a Dataflow Gen 2 and larger datasets would be loaded using notebooks.

With that being said, I am finding that Python is becoming easier the more I use and there is a lot of code out there which can be easily used.

In this blog post I have explained the different costs between the Dataflow Gen and Notebooks.

I also explained some differences on how to transform the data.

In my personal opinion I am going to be using the Notebooks much more in the future. I feel that is where I can learn a lot and share my knowledge with others.

I am certainly going to be focusing on more Fabric content as this is a new area for me and I do hope that it will also be valuable content for you the reader to learn about.

If you have any suggestions about potential future blog posts, please let me know.

Be the first to comment