Fall of 2023 I had the fantastic opportunity to participate in the KaggleX BIPOC program, and learn about MLOps through an in-depth, hands-on project.

KaggleX is a fantastic mentorship program that exists with the goal of diversifying the data space. The organizers match novice and aspiring data scientists with an experienced mentor and the teams work on a project together. The program also includes presentations and office hours from diverse data practitioners all over the world. If you are interested it is a phenomenal way to learn and meet some very cool, smart people.

As my goal for the program was to take a deep dive into the full machine learning lifecycle and productionizing models, my mentor and I decided to work on building out an end-to-end pipeline using TFX. We explored both serving and scaling a prediction service, as well as creating a pipeline for continuous training.

Unsurprisingly, given my previous posts, I wanted to work with book data, and recommender systems were a natural path forward. In the interest of simplicity, instead of a classic end product that took in a user and their rated books and generated recommendations, we trained the model on user + book data, then pulled out the learned book embeddings to build a cosine based recommendation system.

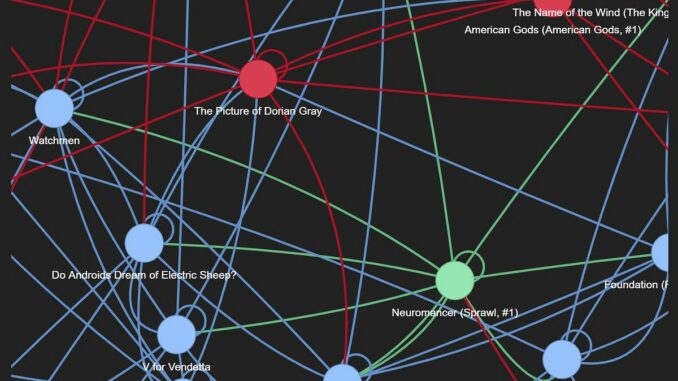

I wanted to be able to create a network graph around a particular book that would let me look at how different books related to each other. If we can graph out the ten most similar books to the book we input, and then add the ten most similar to those, and so on, we can get some interesting recommendations a few layers out from the original. Close to something we enjoy, but also maybe a bit different from what we would normally pick up.

You can tinker with it yourself in Colab if you are interested!

We leveraged the UCSD book graph, a collection of around 100 million user ratings on about 2 million books. After some EDA and early attempts at training the model we determined that most ratings on Goodreads are for only a few thousand books (with the most ratings going to Harry Potter and the Hunger Games).

We concluded that using the entire data set was noisy and overwhelmed our resources, so we narrowed it to about 40 million ratings on the top 3k most rated books for the training.

The center of the tech stack was TFRS (Tensorflow Recommenders), the Tensorflow library for recommendation models, built on top of Keras to support the full model workflow. The plan was to build the pipeline out in TFX, run the training in Vertex AI, and deploy the application in GKS with a Streamlit UI. (Another plug for KaggleX: they generously provide a $500 GCP credit!). The diagram below is a high level overview of the stack.

The TFRS package is pretty well documented, and the developers have put together some fantastic tutorials and starter code, however, there were a few gotchas in the process that we had to get around, and I wanted to document them here. I hope that, either they help others, or, even better, that our workarounds were not the best strategies, and someone in the know comes across this and provides more insight.

As always, the first element is the code! The full trainer module code is here, and the tutorial is also quite thorough, but I’ll touch on some of the more significant elements, as there are some things that we had to tweak.

A key element is of course the model, which, in the case of TFRS, is a two tower retrieval recommendation architecture. TFRS allows us to use a hybrid content and collaborative filtering system (a system based both on the similarities between objects, but also on what users that have liked similar items also like). For a much better explanation of the various recommendation system types Datacamp has a nice overview and set of code examples Beginner Tutorial: Recommender Systems in Python

But in brief, we create two models, one for the query element (in our case users) and one for the candidates (books), that compute representations of our data points as embeddings — our two towers. Then the overall model multiplies them together to figure out how closely they match. The training uses our classic gradient descent and tries to maximize the similarity between users and books to determine what to recommend a given user. This post has an excellent deep dive into TFRS and two tower recommender systems if you want more: MovieLens-1M Deep Dive — Part II, Tensorflow Recommenders.

To start with our UserModel class creates the embedding representation of our users.

We use our UserModel along with some number of stacked dense layers — depending on how deep we want the model — to create the query side of the architecture. A deeper model can learn more complex patterns, but training it can be more difficult.

And finally we combine the two for training, using FactorizedTopK as our metric.

The FactorizedTopK metric is used in recommendation systems to optimize item ranking. It essentially measures “how often a true candidate is in the top K candidates for a given query”, guiding the model to prioritize items that users have interacted with over those they have not.

Now you may notice, I make a lot of hardcoded calls to my data directory in the code above (pd.read_csv(‘gs://kagglx-book-recommender-bucket/data/book-recs-vertex-training/books/book_title.csv’, names=[‘title’])), and I do have a reason for that, (although I don’t necessarily advise it)!

As I mentioned, the retrieval recommender system in TFRS does come with a clear tutorial and example. However, the first problem we run into is that the tutorial is meant to be run within InteractiveContext, not a distributed environment that one would find in production.

This is an issue because in the code where we create the trainer, we set up a custom config that stores a ‘books’ argument pointing to our transformed data artifact.

trainer = tfx.extensions.google_cloud_ai_platform.Trainer(

module_file=module_file,

examples=ratings_transform.outputs['transformed_examples'],

transform_graph=ratings_transform.outputs['transform_graph'],

schema=ratings_transform.outputs['post_transform_schema'],

train_args=tfx.proto.TrainArgs(num_steps=50),

eval_args=tfx.proto.EvalArgs(num_steps=10),

custom_config={

'epochs':2,

'books':books_transform.outputs['transformed_examples'],

'books_schema':books_transform.outputs['post_transform_schema'],

'ratings':ratings_transform.outputs['transformed_examples'],

'ratings_schema':ratings_transform.outputs['post_transform_schema'],

tfx.extensions.google_cloud_ai_platform.ENABLE_VERTEX_KEY:True,

tfx.extensions.google_cloud_ai_platform.VERTEX_REGION_KEY:region,

tfx.extensions.google_cloud_ai_platform.TRAINING_ARGS_KEY:vertex_job_spec,

'use_gpu':use_gpu,

}

)

We then pass that config element into the models, in order to access the training and eval splits of our candidate data to, like so

books_uri = fn_args.custom_config['books']

books_artifact = books_uri.get()[0]

input_dir = artifact_utils.get_split_uri([books_artifact], 'train')

book_files = glob.glob(os.path.join(input_dir, '*'))

books = tf.data.TFRecordDataset(book_files, compression_type="GZIP")

Then we use the book titles to build out a vocabulary that we go on to use in training. Unfortunately, the data from this line: books_uri.get()[0] will be accessible in memory when running this in a notebook, but will crash the pipeline when it tries to run in a distributed environment perhaps due to lazy evaluation, or distributed contexts that leave the object empty when the value is called in the model.

This issue has been documented in the repo, but there isn’t much activity on it: https://github.com/tensorflow/tfx/issues/5885 ; https://discuss.tensorflow.org/t/tfx-custom-config-argument-in-trainer-not-working/13157

Since the block of code is simply building the directory path to our candidate data artifact in our training or eval splits, we can get around the empty data issue by accessing that data from some other storage. Although, as noted in the issue, the correct path seems to be to pass the artifacts through channels instead of the custom config that depends on memory.

Warning, however, this method I am using (just pointing to the same data in both instances) works in the case of this project, because, as described above, the goal is to get the embeddings from the trained model, not to actually predict user + book pairs, so we actually want the embeddings for all of the book titles, and aren’t worried about keeping training and eval data separated.

Although I do hope to explore what the actual fix could be in a future post!

The next section of the code is setting up the actual pipeline.

We are using the Trainer component from the GCP extension module, which will create the job run in Vertex AI.

The Vertex job spec establishes things like machine type, and also passes in the registry path for the custom Docker image that we use to run the pipeline. We’ll get much more into this in a bit!

vertex_job_spec = {

'project': project_id,

'worker_pool_specs': [{

'machine_spec': {

'machine_type': 'n1-standard-4',

},

'replica_count': 1,

'container_spec': {

'image_uri': 'gcr.io/kagglx-book-recommender/cb-tfx:latest'

},

}],

}

We use the Kubeflow runner for our pipeline.

I won’t get terribly into the weeds of the pipeline code itself. You can go through it here, but it’s built on typical components such as ExampleGen, SchemaGen, and a couple of transforms.

As with many a pipeline, this one starts by setting up a bucket. We’ll move our input data and trainer modules there as well as provide a spot where the pipeline can dump artifacts and the trained model.

Then of course, the custom image we mentioned above for the machines that are running the pipeline!

FROM us-docker.pkg.dev/vertex-ai/training/tf-cpu.2–12.py310:latest

RUN apt-gedt update

RUN DEBIAN_FRONTEND=noninteractive TZ=Etc/UTC apt-get -y install tzdata

RUN python -m pip install -q tensorflow_recommenders==0.7.3 tfx==1.14.0 kfp==1.8.22

The image starts from one of the GCP Tensorflow deep learning images. There are quite a few available for different things, this is basic Tensorflow with CPU.

But the next couple of lines overcome a pretty annoying bit of configuration. See, if you try and run the pipeline without installing tzdata you will see the error

Check failed: absl::Load TimeZone("America/Los_Angeles", & default_timezone).

This post How to Deal with Docker Containers and Timezones using tzdata breaks it down really well, and was a life saver, but long story short, the light versions of various OS distros that we use as base images in Docker leave out extraneous things like time zones, and if our code has a dependency on them somewhere it’ll fail. So we have to install tzdata to make up for this gap.

Another note, the tzdata install is interactive (it asks for a couple of configs during setup) but we can’t accommodate that in a docker image build, so we run the install as noninteractive.

Then we throw in the packages we need in order for our pipeline to run, most importantly of course, tensorflow_recommenders.

Finally, we build the image and push to our image registry

gcloud builds submit - tag $TFX_IMAGE_URI . - timeout=15m - machine-type=e2-highcpu-8

And there we go! We have what we need to compile and run our training pipeline, which we do in the following few lines.

!/home/jupyter/.local/bin/tfx pipeline compile - pipeline_path=kubeflow_v2_runner.py - engine=vertex!/home/jupyter/.local/bin/tfx pipeline create - pipeline_path=kubeflow_v2_runner.py - engine=vertex - build_image

!/home/jupyter/.local/bin/tfx run create - pipeline_name=book-recs-vertex-training - engine=vertex - project=kagglx-book-recommender

Note that I ran this in a Yupyter notebook which is why we have all of the /home/jupyter/.local/bin/tfx business in the CLI call instead of just tfx. This is just to point the CLI to the right version of tfx in the environment and could be different depending on how you make the call.

We can now go to the Vertex Pipeline UI to see the pipeline running! It takes a bit of time, but in the end, you should see a trained model in the bucket that you pointed the pipeline to as the serving directory.

Let’s jump back to the trainer code for one quick minute to talk about the serving signature. As discussed in the introduction, the goal of the UI is to input the name of a book, and get the most similar books, as learned by the embedding layer. So instead of inputting a data point and getting a prediction, we want to input the index of a book in our vocabulary and get the embeddings.

We wanted to use Tensorflow Serving, basically just to try it out (there are absolutely better setups for dealing with embeddings in ML systems). So this odd use case requires us to build a custom serving signature. The first part is a function that takes the input data and determines what to return from the model (if not a prediction).

Then after training we register this method as the serving signature

Now we can use our model the way we had planned!

The application and model services setups are pretty boring to look at, mostly a bunch of Kubernetes yml files. There is a pretty straight forward tutorial on the topic straight from GCP.

At a high level, we point our service to where our model lives in our bucket, set up Tensorflow serving via its official Docker image, and launch the service itself with a LoadBalancer. The primary idea is that we set up GKE to automatically scale our service with increases/decreases traffic so we don’t have to worry about it.

The setup for the actual application is built using Cloudbuild. We create a build that turns our application into a Docker file and launches it in GKE straight from our repository when we push a change. The post MLOps End-To-End Machine Learning Pipeline-CICD is a phenomenal breakdown of that entire process to accomplish this.

Once all of that is setup, we can use the application! The original application ran in Streamlit, but I’ve moved it to Colab as it’s a bit easier to keep up and running there.

As I mentioned above, the results are fun to explore. One thing I found interesting was the graph produced by different books.

Some, like Neuromancer, create pretty spread out graphs, with a fairly varied set of books including things one would expect, like Dune, and a lot of Neil Gaiman, all the way to Lolita and The Adventures of Huckleberry Finn on the outskirts of the graph. Books become much less similar than the original input just a few layers out.

Whereas books like A Court of Thorns and Roses create very inter-networked graphs, with few edge nodes, indicating that readers stick pretty closely within this genre.

Not sure if anyone would find this surprising, but it is fun to see laid out!

All in all the project was a lot of fun, and I can’t recommend the KaggleX program enough.

Be the first to comment