One of the common challenges in Machine Learning is Overfitting issues so in this article I’ll talk about Overfitting and how to overcome on it

To overcome these issues we must know it enough

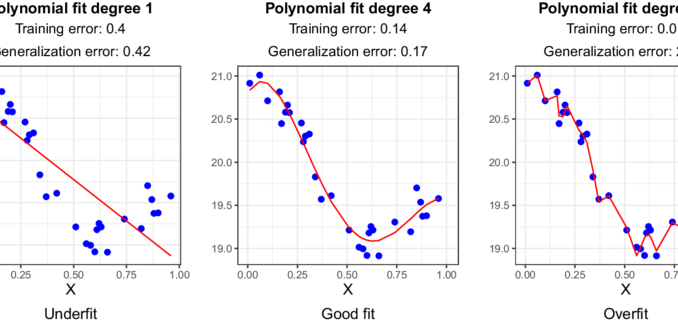

Overfitting is a modeling error.

This happens when the machine learning algorithm learns the training data well and picks up the underlying patterns and the noise and random fluctuations present in the data. In other words, the overfitting model is so complex, fitting the training data so closely that it struggles to generalize to new, unseen data.

Imagine learning a language by memorizing a specific set of sentences without understanding the basic grammar and vocabulary. When you encounter sentences you have never seen before, you will be lost. Machine learning overkill is like this scenario — the model has memorized the training data but fails to understand the broader language of the data domain.

Feature Engineering: Including too many features, especially irrelevant or redundant ones, can lead to overfitting when the model is trying to understand them.

Complex models: Models with too many parameters or layers can pick up noise in the data, leading to overfitting. Deep neural networks are particularly vulnerable to this when they are very complex for the given data set.

Limited data: When you have a small data set, the model may try to fit noise into the data because there are insufficient examples to know the underlying patterns.

Noisy data: Data sets that contain inaccurate information, outliers, or inconsistencies can mislead the model to fit noise instead of actual patterns.

Overfitting the training data: If you train a model for too many epochs or iterations, it may start to fit the training data too closely.

Detecting overfitting is a critical step in managing the problem. Here’s an in-depth look at common overtaking detection techniques:

Validation sets: Split your data set into three parts: the training set, the validation set, and the test set. The training set is used to train the model, the validation set is used to tune the hyperparameters, and the test set is kept separate to evaluate the performance of the final model. If the model performs significantly better on the training set compared to the validation set, this is a clear sign of overfitting.

Validation: Cross-validation is a technique used to evaluate the performance of a model on different subsets of data. In k-fold cross-validation, the data is split into ‘k’ subsets, and the model is trained and validated ‘k’ times, each time using a different cross-validation fold. This helps identify consistent discrepancies between training and validation performance.

Learning Curves: Learning curves are visual representations of a model’s performance on both training and validation datasets as the amount of training data increases. By plotting these curves, you can observe how the model’s performance evolves. Overfit models will show a large gap in performance, with training performance steadily improving while validation performance stabilizes or deteriorates.

Regularization techniques: Regularization techniques such as L1 (Lasso) and L2 (Ridge) add penalties to model parameters to discourage overly complex solutions. By monitoring the behavior of these penalties and their impact on model performance, you can detect signs of overshoot.

Preventing and mitigating overfitting is a crucial aspect of building reliable machine learning models. Below is an in-depth exploration of strategies to address overprocessing:

Feature Selection and Engineering: Thoughtful selection of features and Engineering plays a crucial role in mitigating the problem of overfitting. Feature selection involves selecting the most relevant features and ignoring irrelevant or redundant features. Feature engineering entails creating new features that better capture underlying patterns in the data.

More data: In many cases, the best defense against overtaking is to have more data. A larger data set can help the model generalize better because it has more examples to learn from. Collecting additional data is often a cost-effective way to improve model performance.

Simpler models: Occam’s Razor principle suggests that simpler models should be preferred over complex models when they perform equally well. Simpler models with fewer parameters, such as linear regression or decision trees, are less susceptible to overfitting. Choose a model complexity that matches the complexity of your data.

Regularization: Regularization techniques impose penalties on model parameters, discouraging them from reaching outliers. Two common types are L1 (Lasso) and L2 (Ridge) organization. L1 regularization encourages dispersion (some parameters become completely zero), which may aid in feature selection. L2 regularization prevents parameters from becoming too large.

Early stopping: Monitor the model’s performance on the validation set during training. When you notice that model performance on the validation set starts to decline or plateau while training performance continues to improve, this is a sign of overfitting. Stop training at this point to prevent further overfitting.

Ensemble methods: Ensemble methods such as Random Forests and Gradient Boosting combine multiple models to make predictions. These models can be less susceptible to overfitting because they combine the decisions of several simpler models. Ensuring diversity among component models is fundamental to the success of ensemble methods.

Data augmentation: Data augmentation techniques increase the effective size of your dataset by applying transformations or introducing variations to the data. This is especially useful in image and text data, where simple transformations such as cropping, rotating, or replacing synonyms can create new examples.

Hyperparameter tuning: Experiment with different hyperparameters to find the right balance between model complexity and generalizability. The main hyperparameters include learning rate, batch size, dropout rates, and number of layers in the neural network. Hyperparameter tuning can be performed using techniques such as grid search or random search.

Be the first to comment