Demo : Predicting Fashion Clothes

During this demo, we will explain step by step and talk about different things about Neural Network Classification. First let’s reminder the typical architecture of a Neural Network :

- An Input Layer

- Some Hidden Layer

- An Output Layer

This we already saw in the previous post. But I did not talk about the hyper parameters.

When we talk about training a neural network, we often encounter two types of parameters: weights and biases, which the network learns during training, and hyperparameters, which guide the learning process. Hyperparameters are external configuration settings that you, as the developer, choose before training your neural network.

Input Layer Shape: The input layer is the initial layer of the neural network, and its shape is determined by the number of features or variables in your dataset. Each feature corresponds to a node in the input layer.

Hidden Layer(s): The hidden layers are the intermediate layers between the input and output layers. The number of hidden layers and the arrangement of neurons within these layers are hyperparameters that you can configure based on the complexity of the problem.

Neurons per Hidden Layer: The number of neurons in each hidden layer is a crucial hyperparameter. It determines the capacity of the network to learn intricate patterns. Increasing the number of neurons can enhance the model’s ability to capture complex relationships but may also lead to longer training times.

Output Layer Shape: The output layer shape is determined by the nature of the problem. For regression tasks, where the goal is to predict a continuous value, the output layer usually has one neuron. For classification tasks with multiple classes, the output layer may have multiple neurons, each representing a class.

Hidden Activation: The activation function applied to the neurons in the hidden layers. Common choices include Rectified Linear Unit (ReLU), Sigmoid, or Hyperbolic Tangent (tanh). The activation function introduces non-linearity to the model, allowing it to learn complex relationships. We will see the difference after.

Output Activation: The activation function applied to the neurons in the output layer. The choice depends on the nature of the problem. For binary classification, a Sigmoid function is often used, while for multi-class classification, a Softmax function is common. For regression tasks, a linear activation function might be appropriate.

Loss Function: The loss function quantifies how well the model is performing. It measures the difference between the predicted values and the actual values. The choice of the loss function depends on the type of problem: Mean Squared Error (MSE) for regression and Cross-Entropy for classification.

Optimizer: The optimizer determines how the neural network updates its weights during training to minimize the loss. Common optimizers include Stochastic Gradient Descent (SGD), Adam, and RMSprop. The choice of optimizer and its parameters (learning rate, momentum) can significantly impact the training process.

In the book : Hands-On Machine Learning with Scikit-Learn, Keras & TensorFlow Book by Aurélien Géron (->Page 295) It explains a typical architecture of a classification network :

Knowing this let’s create our model for the Neural Networks Classification !

First we need our data ! I am using Fashion mnist (a test dataset in TensorFlow) :

Fashion-MNIST is a dataset of Zalando’s article images consisting of a training set of 60,000 examples and a test set of 10,000 examples. Each example is a 28×28 grayscale image, associated with a label from 10 classes.

Here : fashion_mnist | TensorFlow Datasets

to get the data we use the following :

import tensorflow as tf

from tensorflow.keras.datasets import fashion_mnist(train_data, train_labels), (test_data, test_labels) = fashion_mnist.load_data()

But before diving into the creation of our model, we need to understand the data ! IT IS CURCIAL TO UNDERSTAND ON WHAT YOU ARE WORKING !

A reminder : The Input data and output should be in numerical form !

So you got it what should be train_data and train_labels ? Numbers !

Let’s understand the shape first :

print("My train data Shape:", train_data.shape)

print("My train labels :", train_labels.shape)

print("Maximum label : ", train_labels.max())

Understanding the shape of each layer is essential for configuring the architecture of your neural network. It helps in determining the number of parameters, connections, and the overall flow of information through the network. The shape of the layers also influences the interpretation of the network’s output, especially in multi-dimensional tasks.

For example, if you have an image classification task and the output layer has a shape of (10,), it implies that your model is designed to classify input images into 10 different classes. Each element in the output corresponds to the probability or confidence score assigned to a particular class.

How can we interpret this ?

Well this means that our train data has 60000 clothes with Weight = 28 & Height = 28 (28×28).

It also means that we have 10 different types of clothes (since the max label is 9 and we have a shape of scalar of 60000).

What are these types (called classes) ? Well here : zalandoresearch/fashion-mnist: A MNIST-like fashion product database. Benchmark (github.com)

Alright, now we have our data. Let’s create the list of class names visualize what it looks like :

class_names = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']

index_of_choice = 100

plt.imshow(train_data[index_of_choice],cmap=plt.cm.binary)

plt.title(class_names[train_labels[index_of_choice]])

Amazing ! we have a bag ! Okey ! Let’s start modelling ! In TensorFlow, the process of creating and training a model generally involves three fundamental steps:

Model Creation: Assemble the layers of a neural network either by constructing it from scratch using the functional or sequential API, or by importing a pre-built model, a technique known as transfer learning.

Model Compilation: Define the criteria for evaluating the model’s performance, specifying loss and metrics. Additionally, articulate how the model should enhance its performance through the choice of an optimizer.

Model Fitting: Allow the model to discern patterns within the data, uncovering the relationship between input (X) and output (y). This step involves the model’s learning process as it strives to map input data to the corresponding output.

model_1 = tf.keras.Sequential([

tf.keras.layers.Flatten(input_shape= train_data[0].shape),

tf.keras.layers.Dense(4, activation=tf.keras.activations.relu),

tf.keras.layers.Dense(4, activation=tf.keras.activations.relu),

tf.keras.layers.Dense(10, activation=tf.keras.activations.softmax)

])model_1.compile(loss = tf.keras.losses.SparseCategoricalCrossentropy(),

optimizer = tf.keras.optimizers.Adam(),

metrics = ["Accuracy"])

non_norm_history = model_1.fit(train_data,train_labels,epochs=10,validation_data=(test_data, test_labels))

Here we create our model with :

Input Layer, two hidden layers and an output layer (Remember, we had 10 Classes).

but what the activation relu means, what softmax means ?

In the context of neural networks, activations in the hidden layers refer to the application of activation functions to the outputs of neurons within these layers. Each neuron in a hidden layer receives input, processes it, and then applies an activation function to determine its output.

Activation functions introduce non-linearities to the neural network, allowing it to learn and understand intricate patterns in the data.

It means I will give you this function try to use it and find a pattern.

Common activation functions used in hidden layers include:

ReLU (Rectified Linear Unit):

- f(x)=max(0,x)

- Outputs the input if it is positive; otherwise, it outputs zero.

- Widely used due to its simplicity and effectiveness in capturing non-linearities.

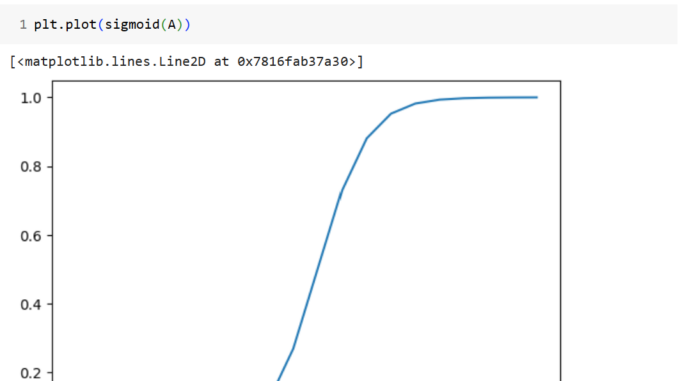

Sigmoid:

- f(x)=1/(1+exp(-x))

- Squashes input values between 0 and 1.

- Useful in binary classification problems.

Softmax (for Output Layer in Multi-class Classification):

- Converts a vector of arbitrary real values into a probability distribution.

- Commonly used in the output layer for multi-class classification problems.

Let’s back to our model, the result was :

so we have an accuracy of 0.09 ==> 9% ! So bad so we need to improve our model !

Reminder : To boost the effectiveness of our model, we have the flexibility to modify various aspects within the three key steps discussed earlier.

Creating a Model:

- Consider adding more layers to increase the model’s complexity.

- Boost the number of hidden units (neurons) within each layer for increased capacity.

- Experiment with different activation functions for each layer to capture diverse patterns.

Compiling a Model:

- Explore alternative optimization functions, like the Adam optimizer, known for its efficacy across a range of problems.

- Adjust the learning rate within the optimization function to fine-tune the model’s training process.

Fitting a Model:

- Extend the training duration by fitting the model for more epochs, providing additional opportunities for learning and pattern recognition.

These adjustments empower us to tailor the model to specific requirements, optimizing its architecture, training process, and overall performance. Experimentation with these modifications allows for a more refined and effective machine learning model.

And also normalize our data ! Let’s start by this : (Make our data between 0≤data≤1) :

train_data_norm = train_data / train_data.max()

test_data_norm = test_data / test_data.max()

Let’s rerun our model with the normalized data :

model = tf.keras.Sequential([

tf.keras.layers.Flatten(input_shape= train_data[0].shape),

tf.keras.layers.Dense(4, activation=tf.keras.activations.relu),

tf.keras.layers.Dense(4, activation=tf.keras.activations.relu),

tf.keras.layers.Dense(10, activation=tf.keras.activations.softmax)

])model.compile(loss = tf.keras.losses.SparseCategoricalCrossentropy(),

optimizer = tf.keras.optimizers.Adam(0.001),

metrics = ["Accuracy"])

norm_history = model.fit(train_data_norm,train_labels,epochs=10,validation_data=(test_data_norm, test_labels))

81% of accuracy ! Seems amazing !

Let’s create a confusion matrix ! What is a Confusion Matrix ?

A confusion matrix is a table used in machine learning to evaluate the performance of a classification model. It provides a comprehensive summary of how well the model is performing by breaking down the predictions into various categories.

The confusion matrix consists of four key metrics:

True Positives (TP):

- The number of instances where the model correctly predicts the positive class.

True Negatives (TN):

- The number of instances where the model correctly predicts the negative class.

False Positives (FP):

- The number of instances where the model incorrectly predicts the positive class when the actual class is negative (Type I error).

False Negatives (FN):

- The number of instances where the model incorrectly predicts the negative class when the actual class is positive (Type II error).

Let’s do the same with our demo.

cm = confusion_matrix(y_true, y_pred)

Looks like we are good 69.3% is a well predicted comparing to the true value. But however, we have some imprefection. By example; the PullOver has a 65.1% probability to be well predicted but it may be 22.8% a coat !

is it normal ? Well a pullover and coat looks the same so maybe our model may confuse both of them.

Finally, let’s try to predict random images :

import random

def plot_random_image(model, images, true_labels, classes):

i = random.randint(0, len(images))

image_to_predict = images[i]

true_positive_image = classes[true_labels[i]]

pred_probs = model.predict(tf.expand_dims(image_to_predict, axis=0))

predicted_image = classes[pred_probs.argmax()]plt.imshow(image_to_predict, cmap=plt.cm.binary)

if(predicted_image == true_positive_image):

color = "green"

else:

color = "red"

plt.xlabel("Pred: {} {:2.0f}% (True: {})".format(predicted_image,

100*tf.reduce_max(pred_probs),

true_positive_image),color=color)

plot_random_image(model_4,test_data_norm,test_labels,class_names)

Youhou it works !

Be the first to comment