2 years ago, I was working on a customer project for text summarization and paraphrasing for Turkish. Going through open-source models and papers, I have realized that, in fact, there was no pre-trained text generation model in Turkish. I was specifically looking for a sequence-to-sequence model. I’ve found BART, T5 and PEGASUS models, all of which were pre-trained for conditional text generation tasks in English. I’ve also found the multilingual versions of the first two, mBART and mT5. They were pre-trained in multilingual settings and, therefore, support Turkish. However, they were exposed to very little amount of Turkish since the majority of training time and learning capacity of the models were spent on other languages. Consequently, even though fine-tuning these models worked most of the time, it was not optimal.

In order to have a well-performing 100% native Turkish LLM, I have decided to pre-train one from scratch. Thankfully, my proposal in pre-training the first sequence-to-sequence Turkish LLM was well taken and supported by the company I worked for, VNGRS.

My idea was to implement everything from scratch and not use any high-level framework such as Huggingface. This way, I would have complete control over and learn every detail while implementing.

Model Architecture and Pre-training Task

Although the project I was working on back then involved text summarization and paraphrasing, I wanted to have a model that could be fine-tuned for all text generation tasks without being too specific to any task.

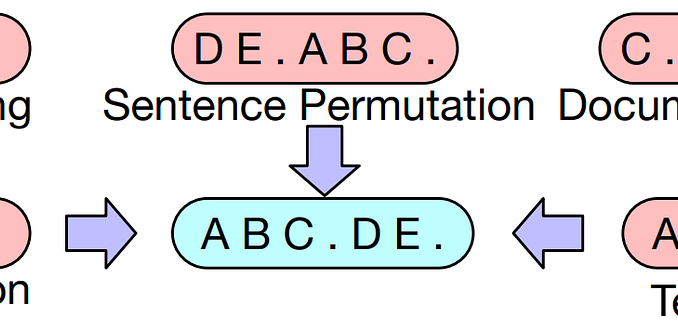

Going through sequence-to-sequence pre-trained language models, it was trivial to see there were three alternatives: T5, BART and PEGASUS. PEGASUS’ pre-training task was optimized for text summarization, so I decided not to choose it. Even though T5 and BART are similar in terms of model size and architecture, I have chosen the BART pre-training task (sentence shuffling and span masking) as it is more complex and could lead to better learning for the model.

As the model architecture, I have chosen mBART because the authors of the paper found that post-encoder and post-decoder LayerNorm layers stabilize mixed precision training, which is especially crucial given the limited budget I had.

Tokenizer

First, I needed to train a subword tokenizer for Turkish. I have chosen Google’s SentencePiece Unigram Model and trained it on 10 GB of text with a vocabulary size of 32,000. We call this tokenizer VBARTTokenizer.

On average, the OpenAI BPE tokenizer uses 3.10 tokens to encode a Turkish word, while our tokenizer uses 1.33 tokens. This stems from the fact that our tokenizer is trained on and optimized for Turkish exclusively. Consequently, a text made of 500 words takes 1,550 tokens for the OpenAI BPE tokenizer, while this number is only 665 for our tokenizer, resulting in 2.33 times more compact representation in the tokenization part.

We have made VBARTTokenizer public. It can be accessed here as part of our open-source Turkish NLP library VNLP.

Dataset

Like many before me, I wanted to use a large web corpus as the pre-training data. OSCAR-2201 and mC4 corpora provide an abundance of crawled web content. However, they are very noisy and full of SEO-targeted keywords, titles and so on. Hence, in order to obtain a denoised corpus, they must be cleaned first. We have conducted a thorough analysis at the document and sentence level, developing rule-based and machine learning-based heuristics and obtained a final, cleaned corpus of 135 GBs of Turkish text, containing documents from both OSCAR-2201 and mC4.

Implementation

I have implemented the model in the Tensorflow framework, going through relevant papers, GitHub and Huggingface source codes. Moreover, I have written a converter code to convert my implementation into the corresponding Huggingface version in order to utilize its generation functions, such as beam search, top_p, top_k, and so on.

We have written two data generators to actuate the BART pre-training task, one in Python and the later in pure Tensorflow. This is because Python GIL prevents the parallelization of Python-based data generators even if it is wrapped by tf.data class, while Tensorflow code can be executed in parallel.

Training

During the development phase, I occasionally made the analogy of seeing the project as our personal James Webb Space Telescope, as we had a limited training budget and consequently had a single shot to succeed. Once everything was set in place, we conducted several tests before starting the final training.

The training took around 40 days on 8 x Nvidia A100–80 GB GPUs and cost about $40,000 on a single p4de.24xlarge instance on AWS.

First we trained our first model on Large config with 374M parameters. We call this model VBART-Large.

Then we enlarged the first model using its pre-trained weights and doubling the number of encoder and decoder blocks. We continued the training from there. The result was a 740M parameter model, which we call VBART-XLarge.

Both models have a context length of 1,024 tokens. However this is flexible and can be shrunk or extended for shorter or longer sequences, respectively.

Fine-tuning Results

After the pre-training, we have fine-tuned our models on tasks like text summarization, text paraphrasing, question answering and question generation.

Comparing our models to mT5 and mBART, we have observed that our models outperform the multilingual ones significantly, even when the latter are much bigger. For instance, the VBART-Large model (374M) is at par with mT5-Large (1.2B) in question generation and answering tasks.

Overall, both of our models outperform open-source mT5 and mBART models when fine-tuned for Turkish.

You can try news summarization and news paraphrasing models on the demo page.

Paper

Lastly, we have written a paper that elaborates on this work. Currently, it is submitted to a conference and is in double-blind review phase, which prevents us from sharing the details. Once this phase is over, we will add a link to it and provide the details on every phase of the project.

Be the first to comment