Today I have finally fixed my bug which has haunted me for the last two months and made my model complete. After countless hours of debugging with skit-learn and using methods like balancing weights, I have concluded that TensorFlow’s evaluate() function in v 2.12 is not working on Ubuntu systems.

When I asked TensorFlow to evaluate my model, he gave me the following metrics:

loss: 0.3311 -

binary_accuracy: 0.8877 -

precision: 0.0000e+00 -

recall: 0.0000e+00 -

auc: 0.7139 -

val_loss: 0.3415 -

val_binary_accuracy: 0.8979 -

val_precision: 0.0000e+00 -

val_recall: 0.0000e+00 -

val_auc: 0.7410 - 445s/epoch - 2s/step

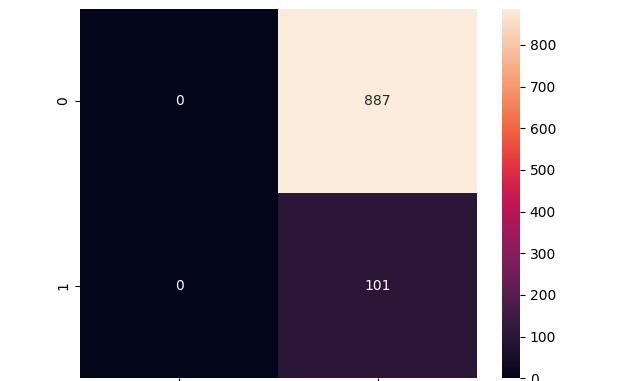

But when I asked Skit Learn to give me my confusion matrix he gave me the image above, explaining why my model cannot predict correctly.

For some context I am building a skin cancer detection app and used the notorious HAM10000 dataset from Harvard University. The dataset is extremely imbalanced regarding the proportion of malignant vs benign diseases labeled.

This means that my model’s precision and recall which are the most important features of a biotech ai model are close or equal to 0. After validating and testing the model I got the following metrics

Validation Results: [0.31469643115997314, 0.8958333134651184, 0.0, 0.0, 0.7172790765762329]

Test Results: [0.3305717408657074, 0.890625, 0.0, 0.0, 0.6953222751617432]

But then he changed his opinion after performing the following commands

evaluation_results = loaded_model.evaluate(test_dataset, steps=test_size // batch_size, verbose=0)

precision = precision_score(true_labels, predicted_labels, average='weighted')

recall = recall_score(true_labels, predicted_labels, average='weighted')

accuracy = evaluation_results[1]

print(f"Accuracy: {accuracy}")

print(f"Precision: {precision}")

print(f"Recall: {recall}")

Accuracy: 0.8896484375

Precision: 0.7933743999940139

Recall: 0.8907156673114119

When I tested the model with some samples manually I got fine results, I just needed to re-adjust the threshold. I thought that because I only did 10 epochs I could increase the precision and recall. After doing more epochs than 8 the model decided to stop me because of the EarlyStopping function I used to prevent overfitting.

After stopping my model had higher performance metrics by the evaluate function but If you tested it manually you would see that it was a mess. Skit learn also gave him the following values

Accuracy: 0.693414

Precision: 0.5

Recall: 0.0430215166739

Now why this could be the result of a rushed update from TF’s team to get the v12.2 before the holidays? It could also be that validating an imbalanced dataset causes some changes in the performance.

I compared multiple times Skit Learn’s parameters to TF params and noticed that SL was always closer to what I experienced through manual testing. The moral of the story is don’t trust libraries to do everything for you and aim to be flexible and use other libraries if needed.

Be the first to comment