Missing values are very common in real datasets. Over time, many methods have been proposed to deal with this issue. Usually, they consist either in removing data that contain missing values or in imputing them with some techniques.

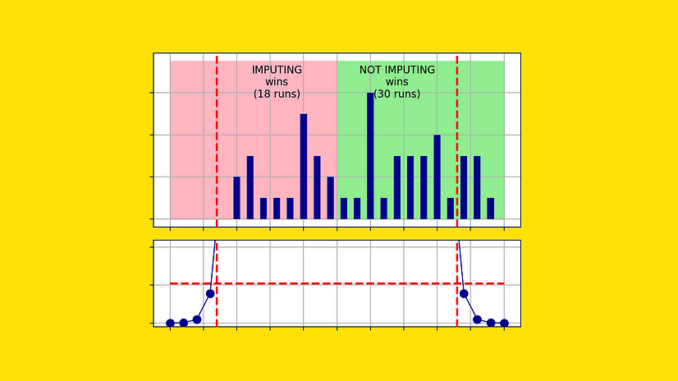

In this article, I will test a third alternative:

Doing nothing.

Indeed, the best models for tabular datasets (namely, XGBoost, LightGBM, and CatBoost) can natively handle missing values. So, the question I will try to answer is:

Can these models handle missing values effectively, or would we obtain a better result with a preliminary imputation?

There seems to be a widespread belief that we must do something about missing values. For instance, I asked ChatGPT what should I do if my dataset contain missing values, and it suggested 10 different ways to get rid of them (you can read the full answer here).

But where does this belief come from?

Usually, these kinds of opinions originate from historical models, particularly from linear regression. This is also the case. Let’s see why.

Suppose that we have this dataset:

If we tried to train a linear regression on these features, we would get an error. In fact, to be able to make predictions, linear regression needs to multiply each feature by a numeric coefficient. If one or more features are missing, it’s impossible to make a prediction for that row.

This is why many imputation methods have been proposed. For instance, one of the simplest possibilities is to replace the nulls with the feature’s mean.

Be the first to comment