Eye tracking technology has evolved from a niche scientific tool to a pivotal component in various industries, including accessibility, gaming, and augmented reality. With the advent of devices like the Apple Vision Pro, which boasts advanced eye and gesture tracking capabilities, the potential of this technology has expanded tremendously. In this context, I embarked on a personal project to delve into the realms of eye tracking by building HUE Vision — a web-based application that uses machine learning and computer vision to detect and track eye movements in real-time.

HUE Vision is a web application designed to demonstrate the possibilities of eye tracking using just a webcam and some powerful, open-source libraries. The application captures video through the user’s webcam, tracks eye movement, and predicts gaze direction, displaying this data interactively on the screen. You can try out the demo of the application here.

TensorFlow.js: This library brings machine learning capabilities directly to the browser, allowing the use of pre-trained models or to train a new model from scratch, with JavaScript. For HUE Vision, TensorFlow.js is utilized to create and train a neural network model that predicts the user’s gaze direction based on the eye image captured from the webcam.

clmtrackr: An open-source JavaScript library for fitting facial models to faces in videos or images. It tracks the face in real time and is particularly useful for tracking facial features such as the eyes, which are crucial for gaze detection in HUE Vision. More about clmtrackr can be found on their GitHub page.

Web technologies: HTML5, CSS3, and JavaScript form the backbone of the project, providing the structure, styling, and interactive elements of the application.

jQuery: Used for DOM manipulation, making it easier to handle events, perform animations, and manage asynchronous requests.

Web APIs: Various HTML5 and Web APIs are employed to handle video streaming (getUserMedia), canvas rendering, and other browser-based features, ensuring that the application can interact seamlessly with the user’s hardware and browser capabilities.

Setting Up the Environment

The first step was setting up the HTML and JavaScript environment to stream the webcam video and overlay a canvas where the eye tracking would be visualized. This setup required handling cross-browser compatibility and ensuring that the video stream could be accessed smoothly across different devices.

Integrating TensorFlow.js and clmtrackr

After setting up the basic environment, the next step was integrating TensorFlow.js and clmtrackr. clmtrackr is used to detect facial features and track them continuously. The positions of the eyes detected by clmtrackr are then used to extract the eye regions, which are fed into a TensorFlow.js model.

The model itself consists of convolutional layers designed to understand and predict the coordinates on the screen where the user is looking. This prediction is based on the eye images, using the neural network trained directly in the browser.

Training and Interaction

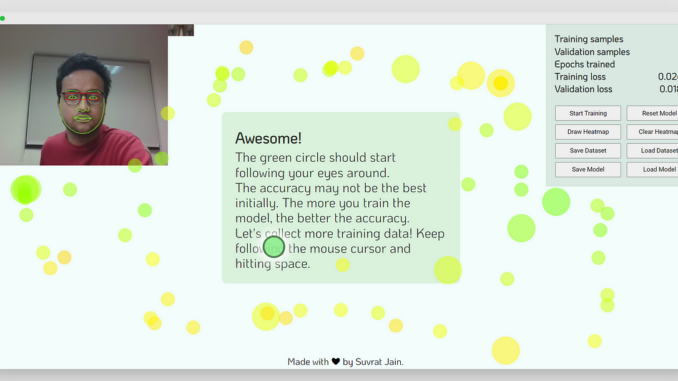

Users can train the model in real-time by looking at different parts of the screen and pressing a key to record their gaze direction. The more data gathered, the more accurate the model becomes. This interactive approach not only makes the application engaging but also demonstrates the learning capabilities of modern machine learning algorithms.

Heatmap Visualization

To understand the model’s effectiveness and areas of improvement, a heatmap visualization was implemented. This feature shows where the model predicts the gaze correctly and where it does not, helping to visualize the model’s performance across different screen areas.

One of the main challenges was ensuring that the model trained quickly and effectively without needing extensive computational resources, which are often limited in browser environments. Optimizing the model to run efficiently in real-time was crucial.

Additionally, managing video stream data and integrating it with the machine learning model required careful handling of asynchronous JavaScript operations, data tensor management, and memory leaks, which are common pitfalls in such applications.

HUE Vision is a testament to what can be achieved with a combination of simple tools and a deep understanding of machine learning and web development. Projects like these pave the way for more interactive and intuitive applications, reflecting the capabilities seen in cutting-edge devices like Apple Vision Pro and beyond. They allow hobbyists and developers to explore and innovate on the frontier of computer vision and machine learning without requiring access to advanced, specialized hardware. It serves as a great educational tool and a stepping stone for anyone interested in the field of eye tracking and human-computer interaction.

This project is open to anyone interested in learning about eye tracking, and I encourage you to delve into it, perhaps even contributing to its development. The complete codebase and more details can be found on my GitHub.

Connect with me on LinkedIn: https://www.linkedin.com/in/simplysuvi/

Be the first to comment