I did an experiment in neural network training that presents a compelling case for using average images as a pretraining step. This method, applied to the MNIST dataset, reduced the computational load of training by 30% while maintaining accuracy. The approach hinges on the concept of using aggregated representations of each class to give the model an initial “feel” for the data it will encounter, rather than exposing it to the entire dataset right away.

Traditional neural network training on datasets like MNIST involves processing each image in a large set, typically amounting to tens of thousands of forward passes. This experiment challenged this norm by reducing the initial training phase to just ten forward passes, one for each average image of the digit classes.

The key to this approach lies in the power of average images to represent the general characteristics of each class. By averaging, we condense the essential features that define each digit into a single image. This process distills the broader traits, allowing the model to grasp the basic aspects of each class.

The setup involved creating average images for the ten digits in the MNIST dataset. Each of these images encapsulates the collective features of its respective class. The neural network was first trained on these images, significantly cutting down the number of forward passes from 60,000 to just 10 in the initial phase. It took 28 epochs to train the model to ~80% accuracy on the test set using this method.

The training was structured in three phases:

- Pretraining on Average Images: The model was exposed to the average images, which provided it with a preliminary understanding of each digit class. (28 epochs, 10 forward passes per epoch, one backward pass per epoch)

- Subsequent Training on the Full Dataset: After this initial phase, the model was further trained on the full MNIST dataset up to 90% accuracy. This step was crucial for the model to learn the finer details and variations within each class. (145 steps, 64 forward passes per step, one backward pass per step)

- Comparison to the Traditional Method: I then trained another model using the same architecture and dataset from scratch up to 90% accuracy. It took significantly more compute power than the first 2 phases combined. (214 steps, 64 forward passes per step, one backward pass per step)

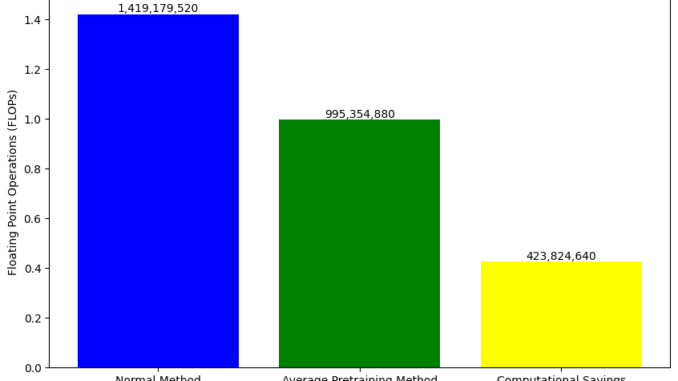

- Computational Efficiency: The average pretraining approach required only 995,354,880 Floating Point Operations, compared to 1,419,179,520 FLOPs in the traditional method, pretraining from scratch on the full dataset. This is a 30% reduction in computational load. This was largely possible due to the reduction in forward passes from 13,482 in traditional training to 9,560 forward passes in the experimental training.

- Accuracy: The final model accuracy was almost identical between the two methods, with the new approach achieving 90.0% and the traditional method 90.1%. I thought that pretraining on the average might cause the model to overfit on something other than the dataset, and might cause training to take longer, but no it actually sped it up.

Pretraining on average images allows the network to establish a basic understanding of the data before delving into more complex and varied examples. This “big picture” learning phase sets the stage for more detailed and specific learning later on. It’s a more computationally efficient way to introduce the network to the dataset, laying a foundation that is then built upon in the subsequent training phase with the full dataset.

By beginning with a condensed, average representation of classes, we can make the training process more efficient without sacrificing the model’s ability to learn effectively. This method could be especially valuable in situations where computational resources are limited, or when scaling to larger and more complex datasets.

Be the first to comment