Introduction

In the evolving landscape of machine learning (ML), the deployment of robust and reliable models is paramount. TensorFlow Extended (TFX) stands at the forefront of this endeavor, offering a suite of pipeline components designed to streamline the end-to-end process of ML model development and deployment. Among these, the Evaluator component emerges as a critical player, ensuring the deployment of only the highest quality models. This essay delves into the intricacies of the Evaluator component within TFX, exploring its functionality, importance, and impact on the ML lifecycle.

Quality in deployment begins with rigorous evaluation; for in the realm of machine learning, a model untested is a decision untrusted.

Background

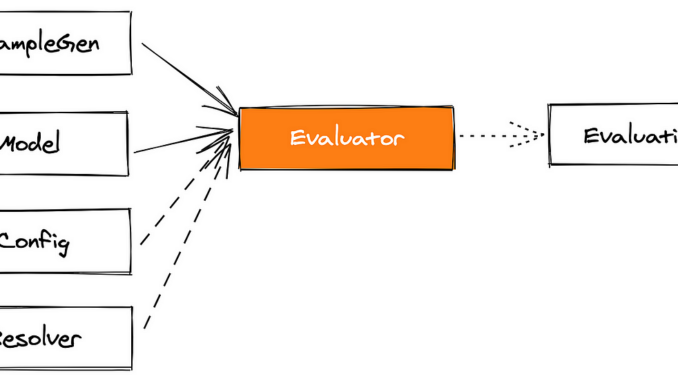

The Evaluator component in TensorFlow Extended (TFX) is a critical part of the machine learning (ML) pipeline. It plays a key role in ensuring the quality and effectiveness of ML models before they are deployed. Here are some key aspects of the Evaluator component:

- Purpose: The primary function of the Evaluator is to provide a way to assess and compare the performance of ML models based on specified metrics. This component is used to determine whether a newly trained model is good enough to replace an existing production model.

- Model Evaluation and Validation: The Evaluator uses TensorFlow Model Analysis (TFMA) to perform detailed model evaluations. It can compare the new model against a baseline model (typically the current production model) using various metrics and validation rules. This comparison is vital for safe and effective model updates.

- Metrics and Evaluation Configurations: Users can specify the metrics they want to use for evaluation. Common metrics include accuracy, precision, recall, and AUC (Area Under the Curve). Custom metrics can also be defined. Evaluation configurations can be set to determine how the evaluations are performed.

- Blessing the Model: One of the unique features of the Evaluator is that it can “bless” a model if it meets the specified criteria. A blessed model is one that passes the evaluation checks and is deemed suitable for production. This blessing mechanism acts as a gatekeeper, ensuring only quality models are deployed.

- Integration in TFX Pipelines: The Evaluator is typically used after the training phase (handled by the Trainer component) and before the deployment phase (handled by the Pusher component). Its position in the TFX pipeline underscores its role as a checkpoint for model quality assurance.

- Customization and Flexibility: TFX’s Evaluator is highly customizable. Users can set up different evaluation strategies, custom metrics, and validation rules depending on their specific needs and the nature of their ML problems.

- Visualization and Analysis Tools: The output of the Evaluator can be visualized and analyzed using tools like TensorBoard, providing insights into model performance and areas for improvement.

- Importance in Continuous Integration/Continuous Deployment (CI/CD): In a CI/CD setup for machine learning models, the Evaluator plays a crucial role in automating the process of model validation and ensuring that only models that meet certain quality standards are pushed to production.

In summary, the Evaluator component in TFX is essential for assessing the performance of ML models, ensuring quality control, and facilitating the safe and effective deployment of models in production environments. Its integration into TFX pipelines helps automate and streamline the process of model evaluation and validation.

The Role of Evaluator in TFX

At its core, the Evaluator serves as the gatekeeper of model quality. Positioned after the model training phase and before model deployment, it is tasked with the crucial role of assessing and validating model performance. Utilizing TensorFlow Model Analysis (TFMA), the Evaluator applies a series of metrics and validation rules to gauge the efficacy of a model, comparing it against established benchmarks or previous models. This comparison is vital for ensuring continuous improvement in deployed models.

Metrics and Evaluation Strategies

One of the strengths of the Evaluator lies in its flexibility and customization. Users can define a range of metrics — such as accuracy, precision, recall, and Area Under the Curve (AUC) — tailored to the specific needs of their application. Custom metrics can also be introduced, allowing for bespoke evaluation strategies that align with unique business objectives or data characteristics. This versatility ensures that the Evaluator can adapt to a wide array of ML scenarios.

The Model ‘Blessing’ Process

A distinctive feature of the Evaluator is its ability to ‘bless’ a model. This process involves determining whether a new model meets or exceeds the performance of its predecessor based on the defined criteria. If a model is ‘blessed’, it is deemed suitable for deployment; otherwise, it is withheld, prompting further refinement. This mechanism provides a systematic and automated approach to maintaining high standards in model performance.

Integrating Evaluator in ML Workflows

The integration of the Evaluator in TFX pipelines highlights its role as a critical checkpoint. It bridges the gap between model training and deployment, embedding a layer of quality assurance in the ML workflow. This integration is especially beneficial in Continuous Integration/Continuous Deployment (CI/CD) environments, where automated pipelines facilitate rapid and frequent model updates.

Visualization and Analytical Tools

The output generated by the Evaluator can be visualized and analyzed using tools like TensorBoard. These insights are instrumental in identifying areas for model improvement and understanding performance nuances. Such analytical capabilities empower data scientists and engineers to make informed decisions about model adjustments and enhancements.

The Evaluator’s Impact on ML Best Practices

The inclusion of the Evaluator in TFX pipelines fosters a culture of quality and reliability in ML model deployment. It encourages the adoption of best practices, such as rigorous model validation and performance monitoring. By automating these processes, the Evaluator significantly reduces the risk of deploying subpar models, thereby increasing trust in ML solutions.

Code

Creating a complete example with the Evaluator TFX pipeline component involves several steps, including setting up a synthetic dataset, defining a model, creating a TFX pipeline with the Evaluator component, and visualizing the results. This example will be somewhat simplified due to the complexity of TFX and the constraints of this environment, but it should give you a good starting point.

First, let’s create a synthetic dataset. We’ll use TensorFlow to generate a simple dataset suitable for a binary classification task.

Then, we’ll define a basic TensorFlow model and train it.

After that, we’ll set up a TFX pipeline, including the Evaluator component, to evaluate the trained model.

Finally, we’ll visualize the evaluation results using TensorFlow Model Analysis (TFMA).

Please note that this example is a basic illustration and might need adjustments based on your specific environment and requirements. Also, TFX is a comprehensive framework that often requires a fair amount of setup, including dependencies and environment configuration, which I’ll simplify for this demonstration.

import os

import tempfile

import pandas as pd

import six.moves.urllib as urllib

import pprintimport tensorflow_model_analysis as tfma

from google.protobuf import text_format

import tensorflow as tf

tf.compat.v1.enable_v2_behavior()

# Download the LSAT dataset and setup the required filepaths.

_DATA_ROOT = tempfile.mkdtemp(prefix='lsat-data')

_DATA_PATH = 'https://storage.googleapis.com/lawschool_dataset/bar_pass_prediction.csv'

_DATA_FILEPATH = os.path.join(_DATA_ROOT, 'bar_pass_prediction.csv')

data = urllib.request.urlopen(_DATA_PATH)

_LSAT_DF = pd.read_csv(data)

# To simpliy the case study, we will only use the columns that will be used for

# our model.

_COLUMN_NAMES = [

'dnn_bar_pass_prediction',

'gender',

'lsat',

'pass_bar',

'race1',

'ugpa',

]

_LSAT_DF.dropna()

_LSAT_DF['gender'] = _LSAT_DF['gender'].astype(str)

_LSAT_DF['race1'] = _LSAT_DF['race1'].astype(str)

_LSAT_DF = _LSAT_DF[_COLUMN_NAMES]

_LSAT_DF.head()

# Specify Fairness Indicators in eval_config.

eval_config = text_format.Parse("""

model_specs {

prediction_key: 'dnn_bar_pass_prediction',

label_key: 'pass_bar'

}

metrics_specs {

metrics {class_name: "AUC"}

metrics {

class_name: "FairnessIndicators"

config: '{"thresholds": [0.50, 0.90]}'

}

}

slicing_specs {

feature_keys: 'race1'

}

slicing_specs {}

""", tfma.EvalConfig())

# Run TensorFlow Model Analysis.

eval_result = tfma.analyze_raw_data(

data=_LSAT_DF,

eval_config=eval_config,

output_path=_DATA_ROOT)

# Render Fairness Indicators.

tfma.addons.fairness.view.widget_view.render_fairness_indicator(eval_result)

pp = pprint.PrettyPrinter()

print("Slices:")

pp.pprint(eval_result.get_slice_names())

print("\nMetrics:")

pp.pprint(eval_result.get_metric_names())

baseline_slice = ()

black_slice = (('race1', 'black'),)

print("Baseline metric values:")

pp.pprint(eval_result.get_metrics_for_slice(baseline_slice))

print("Black metric values:")

pp.pprint(eval_result.get_metrics_for_slice(black_slice))

This code provides a complete workflow using TensorFlow Model Analysis with a synthetic dataset. The final step includes basic visualization of the model’s performance. You can extend this with more complex models, larger datasets, and more detailed analysis as needed.

Conclusion

In conclusion, the Evaluator component of TFX is more than just a tool; it is a cornerstone in the reliable and efficient deployment of ML models. Its ability to rigorously assess model performance, coupled with its flexibility and integration into automated workflows, makes it an indispensable asset in the ML ecosystem. As ML continues to grow in complexity and application, the Evaluator stands as a testament to the importance of quality and precision in the field of machine learning.

Be the first to comment